Art from supercomputing

21 April 2017

Last Thursday marked the opening of the FEAT project (Future Emerging Art and Technology) exhibition in Dundee’s LifeSpace art research gallery. The FEAT project is a pilot that focuses on the synergy between art and science, and how art can bring benefits to the scientific process. EPCC is involved through the INTERTWinE project.

For the past 12 months the artists Špela and Miha have been working with us on a piece related to supercomputing. They have spent time at a number of European supercomputing centres and additional FEAT workshops, such as the one in Vienna last summer that I attended and discussed in a previous blog article.

The artists are looking at the role of algorithms and data in our lives and they specifically thought that by feeding in unintended data then certain algorithms would produce unexpected and interesting results. For instance, the weather forecast can have a major impact upon decisions in unrelated areas, such as financial markets, but what happens if we set the initial conditions to be something entirely unexpected such as the microscope image of somebody’s cells…. will the weather forecast produce something intelligible and if so what impact does this have for our confidence in the forecast in general?

A repository of data

Also involved from the technical perspective was Slavko Glamočanin, who has developed an audio/visual platform, Naprava, which can be used as a building block for displaying and working with multimedia. In the run up to last week Slavko had installed Naprava on a Raspberry PI and then written a number of specialist add-ons to his platform which would enable interfacing between different inputs (such as a microscope, endoscope camera, microphone and a common API for algorithms to post data to) and outputs (such as projectors and algorithms.) This Raspberry PI also acted as a central repository, holding all the data which could then be sent to a number of locations via a web UI.

The algorithms

When I arrived in Dundee on the Monday the first thing we did was finalise the specific algorithms which would be used in the piece and exactly what we would do in the three days of development.

The first algorithm we decided upon was XMountains, a fractal terrain generator written by EPCC’s Stephen Booth. The heart of this code is a random number generator used to determine the terrain, so Slavko swapped it for a number generator based upon data held in the repository. It was then possible to send the code different data which was used to generate the landscape. I thought it was really interesting was that different types of landscapes were generated depending on the type of data – such as audio data tended to result in jerky and unpredictable scapes, whereas images were far smoother. Movies of these landscapes were then provided to mechanical turks, who recorded 30 second responses about what feelings or memories the landscapes elicited.

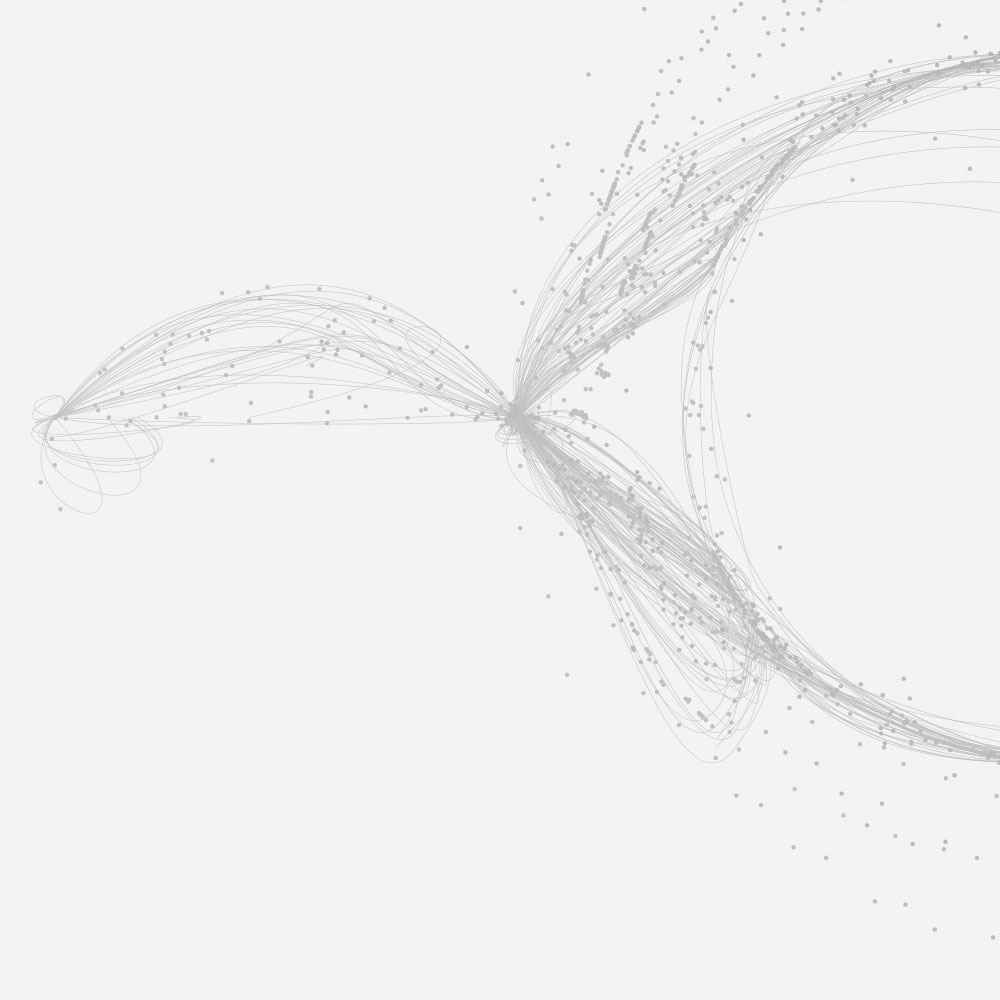

The second algorithm we identified was a star extractor code, used by astronomers to identify stars and galaxies in noisy telescope images. We found that by running any image through this code, the extractor would still work and we could extract a (sometimes very large) number of stars. This output was then processed into a JPG image “mask” of the stars and galaxies against a transparent background. In the same manner I ran over 10000 map tiles from Google Earth and other texture images through this extractor and produced star JPG masks of each of these (it took an entire night to process them all!) But based on this, we could extract the stars & galaxies from an input image and then perform a comparison on the identified stars to the pre-generated masks to see which was the closest fit. The end result was an image search but the closest match was based upon image comparison of the identified stars and galaxies rather than the original images. It was really interesting to see which source images, potentially looking nothing like each other, would be judged the closest match based on this.

We also took along our Raspberry Pi cluster Wee Archie and our weather demo, which runs on the cluster and is driven by the MONC atmospheric model that I have developed with the UK Met Office. Again, the idea was to feed in unpredictable inputs and these were used to set the land-surface conditions, determining the location & amount of land or water in the model at different points. As the model progressed it was possible to send different binary data to it, which would modify the conditions dynamically and change what it was doing. It was interesting to see some of the different and unpredictable weather/cloud conditions generated from the different inputs and how these would change dramatically.

So what's next?

It was a really busy few days working on these algorithms and getting them ready for the performance on the Thursday evening but it seemed to go really well and, in my mind, was a good first exhibit of the piece. If you are in Dundee the exhibition runs until June 17, and the overall six pieces generated by the FEAT project are well worth seeing.

We are currently planning updates to this specific performance in the run-up to its next exhibition, which will be in Brussels in September.

The exhibition runs from April 13 to June 17.