Designing quantum algorithms at NQCC’s Second UK Quantum Hackathon

22 November 2023

Jakub Adamski, a PhD student at EPCC, writes about attending a hackathon organised by the National Quantum Computing Centre (NQCC) this summer.

Image shows Jakub's team, which won first prize in the Hackathon, and its mentors. (Jakub is third from left.)

Held at the University of Birmingham, the hackathon [1] was intended to spread awareness about the current capabilities and limitations of quantum computers. Participants were divided into ten teams, with each given a specific use case which we had approximately 30 hours to find a quantum solution to. The topics varied, from logistic planning to numerical simulations of systems such as nuclear fusion reactors or jet engines. The teams were also assigned target quantum hardware from multiple providers, on which the algorithms could be tested in real life. The hackathon had many partners interested in quantum computing, such as the NHS, the National Grid and Rolls-Royce.

I was in a team of five, among which there were two students from Bristol and one from London. Our use case was given by Rolls-Royce, who are interested in simulations of power systems such as jet engines. We were assigned three mentors – from Rolls-Royce, Classiq (which makes software for the development of quantum circuits), and IonQ (one of the hardware providers). Our team was lucky to earn the first prize in the hackathon.

In this blog post, I would like to describe what we were working on, and what the event was like in general. I am also going to use it as an opportunity to talk a bit more about quantum computing.

Simulating power systems on a quantum computer

Nowadays, there are two main algorithms used for large scale simulations of power systems – computational fluid dynamics (CFD) and finite element method (FEM). The core procedure in both is to solve a large number of differential equations, discretised into linear equations. In principle, computing them requires expensive matrix inversion – state-of-the-art methods can invert a 5-billion-by-5-billion matrix on hundreds to thousands of supercomputing CPU and GPU nodes.

There exist quantum approaches the CFD and FEM algorithms, but as exciting as they may sound, they still mostly rely on classical resources, since quantum computers can only run relatively simple tasks. However, there are a few routines that in theory can be exponentially accelerated in the quantum world – and solving linear systems of equations is one of them. In fact, multiple algorithms have been devised for this purpose.

The original motivation for moving this problem onto a quantum computer is the Harrow, Hassidim and Lloyd (HHL) algorithm, published in 2008 [2]. The way it works is that through quantum magic you can encode a vector of size \(2^n\) using just \(n\) qubits. Well, it is actually \(2n+1\) qubits, because for an equation \(A\vec{x}=\vec{b}\) you need to encode both \(\vec{x}\) and \(\vec{b}\) plus an additional ancillary qubit. The matrix \(A\) is encoded within the circuit itself. In an ideal case, both the time and space complexity can get exponentially better on a quantum computer. Unfortunately, this only works on paper – in reality, these magical encodings are not free, and often require an exponential number of quantum gates to be properly implemented. We found that even for simple 8x8 matrices, hundreds of thousands of gates became necessary, and most current quantum computers can only handle up to a few thousand gates in a row before the state is destroyed by noise. And, to be fair, this only scratches the surface of an ocean of problems this algorithm faces – for interested readers, I really recommend this article Read The Fine Print by Scott Aaronson.

My team became interested in two upgraded versions of the HHL algorithm – Variational Quantum Linear Solver (VQLS), and Quantum Singular Value Transformation (QSVT) [3, 4]. The VQLS is a variational algorithm and relies on treating matrix inversion as an optimisation problem. Its effectiveness is limited by the matrix structure and experimental constraints, often making it unreliable. The QSVT is a general algorithm for matrix arithmetic, and can be also used for matrix inversion. We split our team into two groups to explore both algorithms, and I chose to work on the latter – which I am going to describe in more detail below.

Quantum singular value transformation

Before jumping straight into quantum algorithmics, let me first remind you about the basics of how quantum computers work. Essentially, we have a quantum state, or a statevector, which for \(n\) qubits can be treated as a \(2^n\)-element vector. The statevector can be modified by applying gates (or operators), which are basically matrices of size \(2^n \times 2^n\). No wonder quantum computing seems perfect for linear algebra problems! There are also two constraints – the statevector needs to be normalised, i.e. its length must be 1; and the operators have to be unitary, such that \(U^\dagger U = I\) (this way the length of the statevector stays 1 at all times).

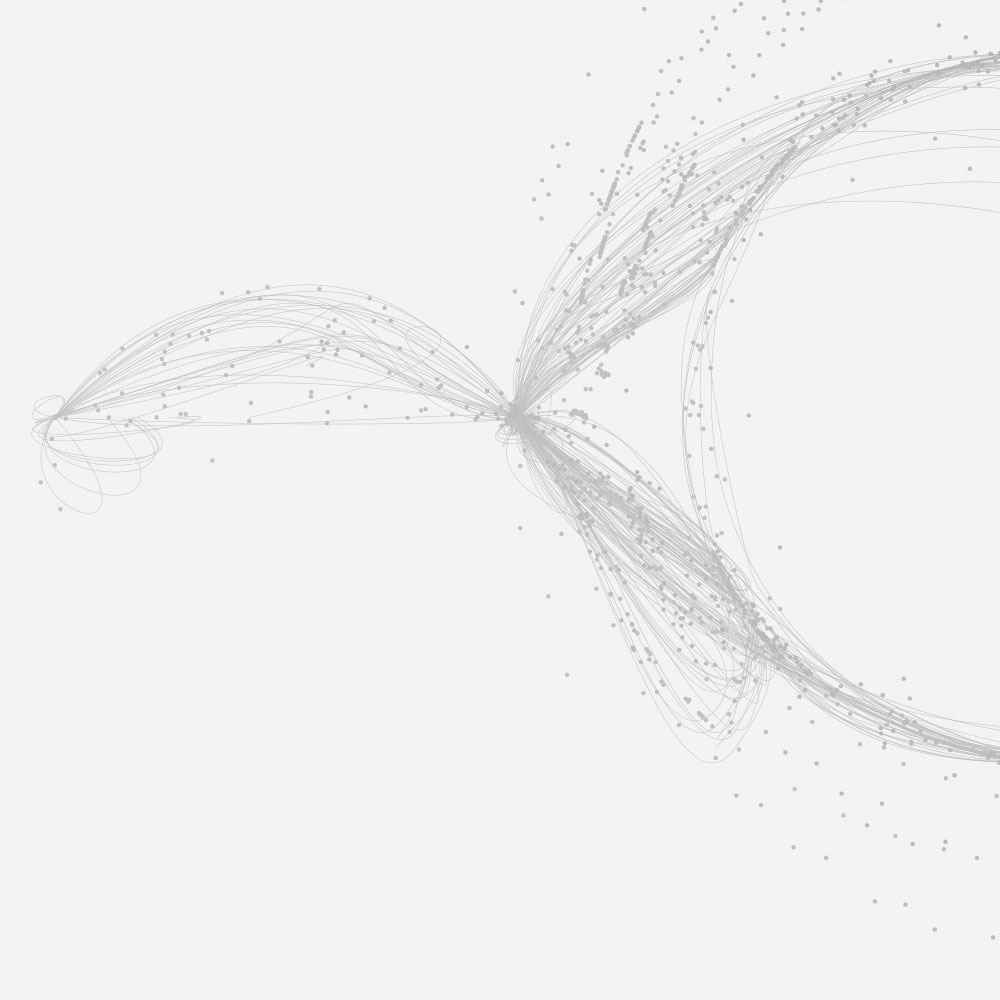

The QSVT algorithm was developed fairly recently in 2018. It quickly got famous due to its generality – it is used to apply any polynomial function to the quantum state. The way it works is best explained with matrices. The examples below are taken from a Pennylane tutorial.

Let’s introduce a very simple unitary matrix of the form: \[ U(a) = \begin{pmatrix} a & \sqrt{1 - a^2} \\ \sqrt{1 - a^2} & -a \\ \end{pmatrix} \]

In addition, we need a parametrised signal-processing matrix: \[ S(\phi) = \begin{pmatrix} e^{i\phi} & 0 \\ 0 & e^{-i\phi} \\ \end{pmatrix} \]

It depends on the parameter \(\phi\). Now, it turns out, that we can determine a series of values \(\phi_0, \phi_1, \ldots, \phi_k\), such that the following matrix multiplication yields: \[ S(\phi_0) U(a) S(\phi_1) U(a) \ldots S(\phi_{k-1}) U (a) S(\phi_k) = \begin{pmatrix} P(a) & * \\ * & * \\ \end{pmatrix} \]

The \(*\) symbols mean that we are not interested in the entries. The \(P(a)\) element means an arbitrary polynomial of \(a\). Hence, by choosing the values of \(\phi_i\), we can compute any polynomial of the first value of the \(U(a)\) matrix.

This demonstration only works for single numbers, however, it turns out that if we use larger matrices, we can treat the entire upper-left quarter of the matrix as an argument, regardless of its size. Such a larger matrix, can for example look as follows (in its block form):

\[ U(A) = \begin{pmatrix} A & \sqrt{I - A A^\dagger} \\ \sqrt{I - A^\dagger A} & -A^\dagger \\ \end{pmatrix} \]

After applying the same series of \(U\) and \(S\) matrices, we get: \[ \begin{pmatrix} P(A) & * \\ * & * \\ \end{pmatrix} \]

Thus, we calculated a polynomial of the matrix \(A\), and it is encoded in a unitary form, which means it can be implemented as a quantum circuit! By setting the initial statevector to \(\vec{x}\), we can use the circuit to compute any expression of the form \(\vec{y} = P(A) \vec{x}\). And since the maximum size of the statevector and the matrices acting on it grows exponentially with the number of qubits, we can quickly reach very large sizes with just a few dozen qubits.

Solving linear systems of equations

Now, you might be wondering how we are supposed to use polynomial expansions to solve a matrix inversion problem. Well, it turns out that inversion is just a reciprocal function \(f(A) = \frac{1}{A}\). Therefore, if we can find a polynomial expansion of the reciprocal function, it will make the problem trivial. Unfortunately, finding such an expansion is not so trivial itself.

The reciprocal function is naturally difficult to expand into a polynomial, for example one cannot just use the well-known Taylor expansion. This is because the function goes to infinity at \(x = 0\). There are more advanced analytical methods, but they all fail near zero. The coefficients can also be estimated numerically, but again, it gets challenging around zero. Therefore, the question becomes how close to zero we need to get.

The answer depends on the matrix and its condition number \(\kappa\). This number determines how sensitive a linear equation \(A \vec{x} = \vec{b}\) is to the change in \(\vec{x}\). The bigger \(\kappa\) is, the more sensitive the equation, and therefore, it is more difficult to solve. Unfortunately, larger matrices tend to have larger condition numbers. This significantly limits the QSVT method, which is supposed to be used for exponentially large matrices.

During the hackathon, I was able to successfully simulate a QSVT linear solver of a \(16 \times 16\) matrix, with condition numbers up to \(\kappa = 8\). Indeed, the accuracy decreased significantly with higher condition values, but the algorithm could still produce decent approximate results. I tried to export my circuit to run it on real quantum hardware, but the library I used was not fully implemented, and it proved impossible without modifying the source code. However, I estimated that such an algorithm should be implementable with about 32,000 gates. In comparison, a naïve HHL implementation was estimated to consist of around 2,000,000 gates, so the potential improvement could reach two orders of magnitude.

Of course, there are other limitations that should be mentioned. First, the number of gates to encode most matrices continues to grow exponentially, so the problem of the HHL algorithm persists, only to a smaller degree. And secondly, the output vector is still inherently quantum, which means we cannot really read it all out. Instead, we need to observe it, which only gives us an estimation of one of the elements, chosen at random – a typical quantum behaviour. To obtain the entire result, we need repeated evaluations of the circuit followed by observations, and in the worst case when all elements are non-zero, we have to do it at least \(2^n\) times. In practice, one can hope the output vector is sparse, and only a few elements are important. With all that in mind, it becomes clear that more improvements are needed if we want to use this method in practice.

Presentation and reception

My team presented our findings in front of the jury, alongside with the other teams. We had an extra upper hand, because my teammates working on the VQLS algorithms managed to run it on hardware from two different providers – IonQ and IBM. We determined that their approach is more suited for near term devices, while the QSVT algorithm will probably be more useful in the long term. In addition, a portion of our presentation was devoted to potential ethical implications of our work.

The presentation was well-received, and we were asked a lot of questions about the methods and hardware we used. In the end, we got the most votes from the jury and won the hackathon, which was a pleasant surprise. Our prizes included medals, bookshop coupons, and a single trophy, which was taken by the two students from Bristol (we decided it was the fairest solution).

Final thoughts

The hackathon was a wonderful experience, and I really recommend it to anyone interested in quantum computing. The atmosphere wasn’t too competitive, with most people genuinely interested in each other’s work. And most importantly, prior knowledge about quantum computing wasn’t essential! It was certainly useful to be familiar with some basic concepts, but the hackathon could be treated as a practical introduction to the field.

To me, experiencing how young quantum computing is in practice was eye-opening – most of the presented solutions were as constrained as ours. I find it exciting to be a part of this developing field, as it feels like I’m in a way rediscovering computer science. I am looking forward to seeing how everything evolves in the coming years. Thank you for reading!

References

- Article on the hackathon published in Physics World

- A. W. Harrow, A. Hassidim, S. Lloyd – Quantum algorithm for solving linear systems of equations

- C. Bravo-Prieto et al. – Variational Quantum Linear Solver

- A. Gilyén et al. – Quantum Singular Value Transformation and Beyond: Exponential Improvements for Quantum Matrix Arithmetics

Author

Jakub Adamski, PhD student at EPCC

Jakub.Adamski@ed.ac.uk