Linpack and BLAS on Wee Archie

7 June 2017

Wee Archie is our mini-supercomputer made from Raspberry Pis, used for public outreach to teach members of the public the concepts of supercomputing. It has been very successful, and as such a second one has been built to keep up with demand!

Our two Wee Archies are codenamed Wee Archie Green and Wee Archie Blue, after the colour of their LED displays. Wee Archie Green, the original, is made from Raspberry Pi Model 2Bs, whilst Wee Archie Blue is made from the newer Raspberry Pi Model 3Bs.

To quantify their performance, we decided to run Linpack on them. We followed the instructions found at https://www.howtoforge.com/tutorial/hpl-high-performance-linpack-benchmark-raspberry-pi/, which advises the use of a version of ATLAS available on in the Raspbian repository for BLAS. The Raspberry Pi Model Bs use quad-core 900MHz ARMv7 processors, whilst the Raspberry Pi Model 3Bs use quad-core 1.2GHz ARMv8 processors. It is thus expected that Wee Archie Blue has better performance than Wee Archie Green.

Fine tuning parameters

In Linpack, obtaining the highest score for a given machine involves a lot of fine-tuning of parameters to pick the values that give the best score. In particular, the problem size (N), the block size (NB) and the parallel decompositions (P and Q) can have a high impact on the score.

Ideally we want N to be as large as possible (its maximum size is limited by the machine’s memory), as this increases the computation-to-communication ratio, resulting in a higher number of FLOPS. NB specifies the size of the small tiles that the problem is split into for load balancing purposes. A smaller NB results in better load balancing, but more communications, whilst a larger NB can result in worse load balancing, but fewer messages needing to be sent. It is therefore best to choose some intermediate value of NB that achieves the best compromise between load balancing and communications. We found that NB=64 worked best on both Wee Archies.

A further constraint is that for best performance, N should be a multiple of NB so as to not waste computation. P and Q describe how the problem is decomposed in parallel. The advice is to choose P and Q to be roughly equal, but with Q slightly larger than P. Also, P x Q must be equal to the number of MPI processes you are using. The website http://hpl-calculator.sourceforge.net provides assistance on choosing the best values for these parameters.

Single-node performance

First of all, let’s consider the single-node performance on the two Wee Archies. This gives us a baseline of how well linpack scales over multiple cores with Wee Archie’s interconnect, and also allows us to get an idea of the performance of a single Raspberry Pi board.

We investigated the linpack score for 1, 2, 3 and 4 cores on the nodes. A single node of either Wee Archie contains 1GB of RAM. With NB=64, we chose N=9216, corresponding to 80% of the node’s total memory. This leaves room for the operating system’s memory, as well as any memory associated with MPI etc. The results we obtained were surprising. Wee Archie Green outperformed Wee Archie Blue by around a factor of two, with Wee Archie Green’s top score being 1.03 GFLOPs, whilst Wee Archie Blue’s top score was only 0.63 GFLOPs. This is unexpected considering that Wee Archie Blue runs more modern processors with higher clock rates, and so should perform better than Wee Archie Green. A second surprising result is that although Wee Archie Blue’s score increases with increasing core count (as expected), for Wee Archie Green, the score peaks at 2 cores, and decreases for 3 and 4 cores.

Investigating the strange scaling of Wee Archie Green, we found that each MPI process was inexplicably using two threads. As there is no threading in Linpack, the threading must be coming from the BLAS library, ATLAS. Strangely, although both Wee Archie Green and Blue use the same version of ATLAS from the Raspbian repository, only Green exhibits threading. Furthermore, the poor(er) performance of Wee Archie Blue could be attributed to the ATLAS installed on it not being optimised/compiled for the more modern processor. To test this, we decided to compile (on both machines) OpenBLAS (http://www.openblas.net), and investigate its performance.

OpenBLAS

Compiling OpenBLAS was as simple as typing in ‘make’, and waiting around 30 minutes whilst it built itself, and ran tests to confirm that it was working. We then compiled Linpack with OpenBLAS instead of ATLAS, and ran the single-node tests again. Firstly, we found that using all four cores on Wee Archie Blue caused it to overheat, resulting in either incorrect results, or for the node to crash. We therefore underclocked the processors to 1GHz from 1.2GHz, and we no longer had any problems. Secondly, OpenBLAS uses OpenMP threads, and we found that threads gave a slightly better performance than MPI processes, so we chose to use threads on the nodes.

Looking at our results using OpenBLAS, we found the behaviour we had expected from the start; the Linpack score increases with increasing core count, and Wee Archie Blue performs better than Wee Archie Green. A single node of Wee Archie Blue has a performance of 3.58 GFLOPs, whilst a single node of Wee Archie Green has a performance of 1.57 GFLOPs. It seems that our suspicion was correct - the ATLAS available in the raspbian repository is not optimised for the Raspberry Pi 2B or 3B. Although we cannot claim that OpenBLAS is perfectly optimised for the Raspberry Pis, it performs much better than ATLAS.

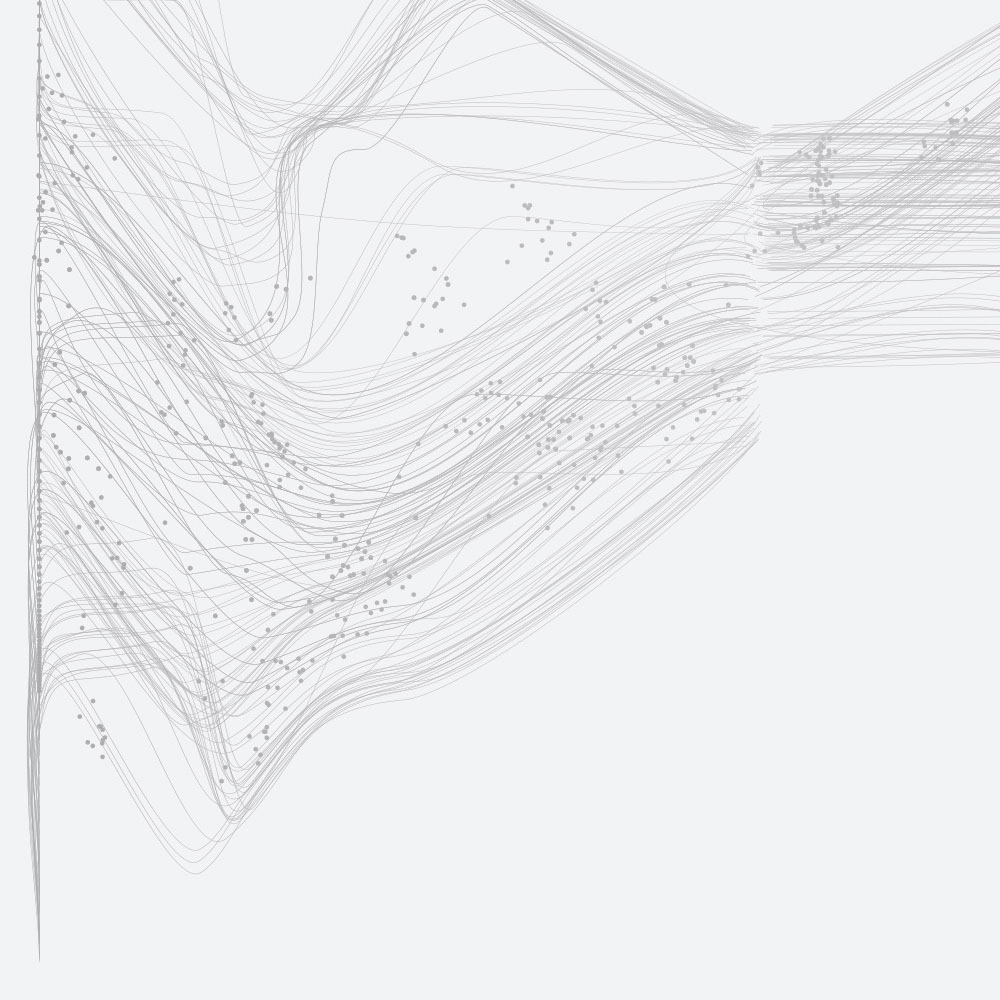

Now let’s move into evaluating both machines’ full performance. For this, we only used the 16 compute nodes, and not all 18 nodes on the machines. In addition to using Linpack with OpenBLAS, we also ran Linpack using ATLAS so we could compare the performance results. Like with the single node performance, we investigated using 1, 2, 3 and 4 cores on each node.

The results show that using OpenBLAS, Wee Archie Blue has a peak performance of 25.1 GFLOPs, and Wee Archie Green has a peak performance of 12.79 GFLOPs. These values are significantly better than 7.1 GFLOPs and 9.1 GFLOPS for ATLAS on Wee Archie Blue and Green respectively. One thing of note is that the score for 16 nodes is not anywhere near 16 times the single node score, as one may naïvely expect, but rather is around half of this value. This can be attributed to both Wee Archies’ interconnects, which are 10 Mbit/s Ethernet connections. This adds a significant communication bottleneck, which reduces the machines’ performance.

Wee Archie v. the top500

For fun, let’s look at when the Wee Archies would have placed highly in the top500 list, the list of the fastest supercomputers in the world, which is published roughly every 6 months. Going back to June 1993, the first ever list, we find that the top supercomputer then had a score of 59.7 GFLOPs – greater than either Wee Archie. In this year, the Wee Archies would have placed 5th and 21st. Taking the first 14 years of the top500 scores, we extrapolated backwards to see when Wee Archie Green and Blue would have been top of hypothetical top500 lists, and we found them to top their lists in Nov 1990 and June 1992 respectively.

In conclusion, we managed to measure Wee Archie Green and Blue’s performance with Linpack, finding peak performances of 12.8 GFLOPs and 25.1 GFLOPs respectively. Along the way, we found that the BLAS available in the Raspberry Pi repository (ATLAS) gave very poor performance, and so we compiled OpenBLAS and used it instead. This demonstrates the importance of using a good set of BLAS libraries to get optimum performance out of your supercomputer, and why it is advisable to use optimised libraries provided by vendors, like cray-libsci on ARCHER, or Intel MKL on Intel systems.