Spreading the love

Thread and process binding

Note, this post was updated on the 23rd March 2017 to include how to bind threads correctly on Cray systems (aprun -cc rather than taskset)

Making sure threads and processes are correctly placed, or bound, on cores or processors is essential to ensure good performance for a range of parallel applications.

This is not a new topic, and has been covered well by others before, ie http://www.glennklockwood.com/hpc-howtos/process-affinity.html. Generally this is just handled for you; if you're running an MPI program then your mpirun/mpiexec/aprun job launcher will do sensible process binding to cores.

If you're using OpenMP then the operating system should distribute your threads sensibly to cores as well. If you're using hybrid parallelisation (for example MPI+OpenMP) then you have to be a bit more careful, because you generally want to ensure the threads that each MPI process spawns run on hardware close to where the MPI process itself is running. The MPI process binding is set up by the job launcher, the OpenMP thread binding controlled by the operating system, and these two don't always align in the way you'd like.

Hybrid placement

However, it's generally possible to wrangle the job launcher and operating system to get reasonable process and thread distribution. For the Cray job launcher, aprun, you can specify a depth for each MPI process (using the -d flag), which sets how many cores to leave free adjacent to each MPI process. This gives space for threads to be spawned and use cores close to the MPI process (thus maintain data locality and re-using data in the same caches, etc...).

For other job launchers similar flags are available, for instance -bysocket -bind-to-socket for Open MPI, or setting export I_MPI_PIN_DOMAIN=omp with Intel MPI. This type of approach is important to ensure good performance on modern HPC systems because compute nodes tend to be multi-processor and in such an environment we need to ensure processes and threads are using both processors rather than just ending up on a single processors.

For example, ARCHER has 2 processors per node, each with 12 cores. If I ran a hybrid application using 12 MPI processes, each of which spawns 2 OpenMP threads, and I don't use the -d flag when running my job, the job launcher will start my 12 MPI processes on the 12 cores of the first processor, leaving the second processor free. Then when the MPI processes spawn threads they are likely to be bound to the cores the MPI process is using, meaning each core on the first processor will end up hosting 2 threads, and the second processor will still be empty.

Distributing processes

This damages performance both by sharing a core between multiple threads, and by leaving the resources (memory bandwidth, floating point capacity, etc...) of the second processor un-used. Indeed, even if you aren't running a hybrid application, if you're just using MPI, but you underpopulate (put fewer MPI processes on the node than there are cores), you probably want to take a similar approach, forcing the MPI processes to be spread across the processors in the node rather than clustering on the first processor.

Often underpopulation is done to get more memory per MPI process than would be available if the node was full, but of course whilst we want to have more memory per process we also want to utilise all the memory bandwidth in the node too, and that is a per processor feature. Therefore, we need to distribute our MPI processes across processors. We don't actually have to distribute processes inside a processor, we can use (for instance) the first six cores of each processor, as inside a process resources tend to be equally shared between cores.

This isn't always the case though, AMD's Interlagos processor had floating point units shared between two cores (two cores were called a module in this architecture) meaning floating point performance could be improved if underpopulating by ensuring processes were distributed to modules rather than cores.

Thread binding in new architectures

Sometimes we also encounter scenarios where the operating system or job launcher isn't quite configured properly and does something stupid with thread binding. We saw this in the early days of the Xeon Phi (Knights Corner), where hybrid thread binding was not sensibly handled in the default case (where you don't manually specify the binding), leading to real performance problems as show in the graph in Figure 2.

Figure 2: CP2K threading performance on Xeon Phi Knights Corner: Picture courtesy of EPCC's Iain Bethune and Fiona Reid.

However, one place where it's not obvious process distribution should be required is the latest Xeon Phi (Knights Landing). It's a single processor so it would appear, at least superficially, that we shouldn't have to worry about process placement for non-threaded applications. Clearly we do care that threads and processes are placed on empty cores, rather than sharing cores, if empty cores are available, but – provided the default setup is sensible – this should happen.

Process binding on KNL

On closer inspection of the Knights Landing (KNL) architecture, though, it becomes evident that whilst it is a single processor, it does have some of the characteristics of multi-processor systems. The KNL is divided into quadrants, with associated memory channels, which means if we underpopulate MPI processes on a KNL processor and don't distribute them across the quadrants then we may see performance loss.

Indeed, as shown in Figure 3, when underpopulating with CP2K we can see somewhere close to a 20% performance difference between distributing processes across the quadrants equally or just putting them on the first N cores in the system. The benchmark data is from our ARCHER KNL system, with the difference between the two lines being whether a depth argument was specified for the job launching or not (ie whether processes were spread out across cores or not).

Figure 3: CP2K thread performance with and without distributions of threads across the 64 cores of the KNL processor. Graph courtesy of Fiona Reid.

Thread binding on KNL

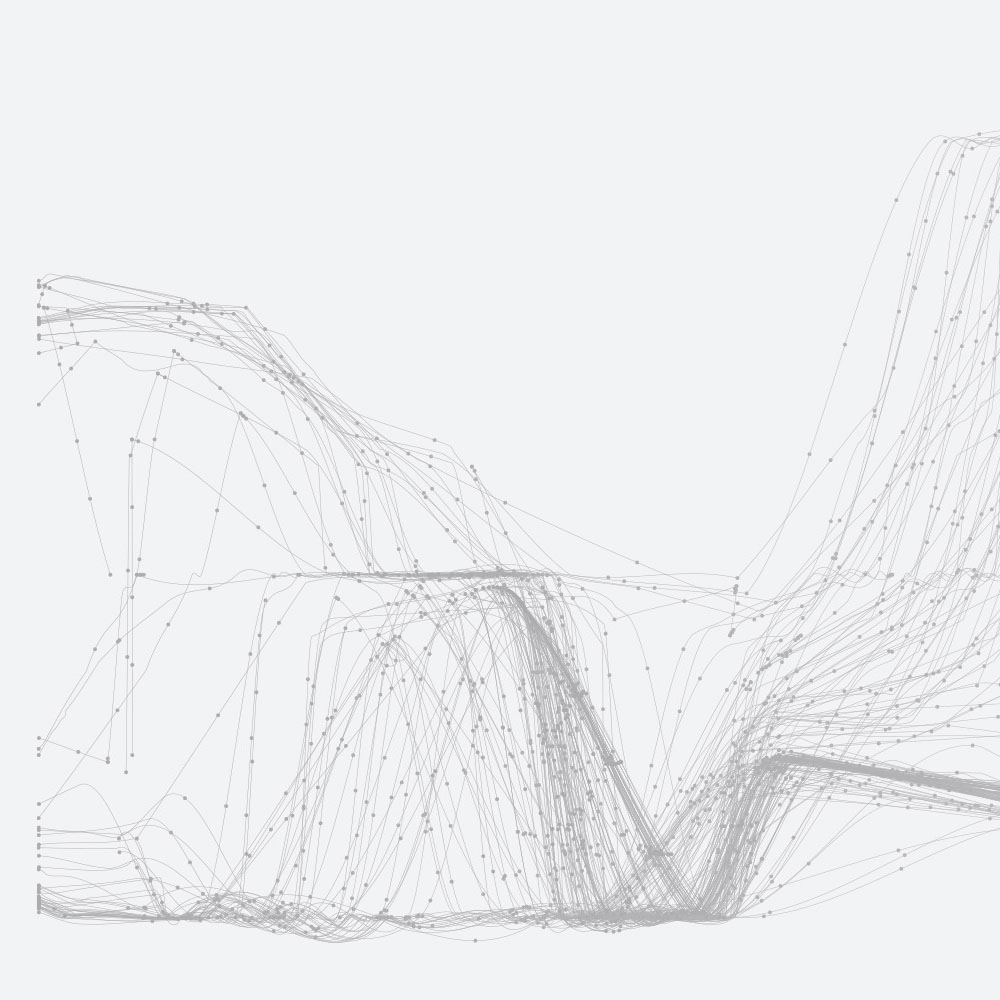

Likewise, we see the same type of performance behaviour if we investigate thread bindings, so running threaded application (OpenMP or otherwise) on the KNL processor. Figure 4 shows performance of R using the dopar for each threaded parallelism on KNL. Running R with threads and using the standard operating system binding performance ~10% slower than using taskset to distribute threads across quadrants evenly (ie taskset -c 0-63:4 R .... will distribute 16 threads across the 64 KNL cores we have in the KNL processors we are using).

For our Cray system taskset is overridden by the aprun job launcher so you need to use the aprun flag -cc to specify thread bindings. For instance, if I wanted to run 4 threads and spread them across an ARCHER KNL node I would used: aprun -n 1 -cc 0,16,32,48 ./my_executable

Obviously, this doesn't matter if you're filling up the cores on the processor, it will have no impact on a 64 thread run. Likewise, if we are only using a small number of threads (ie 4 or less) it also doesn't seem to improve performance for this benchmark, presumably because we don't have enough threads to saturate the memory bandwidth available in a single quadrant when only using a small number of threads.

Figure 4: R threaded parallel performance on KNL when spreading the threads or keeping them compact.

Hyperthreads

To further complicate this, modern processors often have the capability to run multiple processes or threads on a single core simultaneously. Both the KNL (which can run up to 4 hyperthreads) and ARCHER (which can run up to 2 hyperthreads) can allow this functionality, meaning you can run more processes or threads or threads*processes on a node than you have physical cores.

Generally, these are numbered in such a way that threads and processes are given out to cores before they are given out to hyperthreads, and most MPI job launchers and operating system thread binding policies will deal sensibly with them. However, on a system like ARCHER, if you do want to exploit these you have to enable them in the job launcher. On ARCHER this is done by passing the -j flag to the job launcher, specifying how many hyperthreads you want to enable on each core.

For our standard ARCHER nodes you can specify either -j 1 which is the default or enable hyperthreading with -j 2, for our ARCHER KNL system you can specify -j 1 to -j 4 although Intel advises not using 3 hyperthreads per core as it has performance impacts compared to 2 or 4 hyperthreads.

Blissful ignorance

Fortunately, for most applications you can ignore these issues, as you will be either filling up nodes completely, or the job launcher/operating system will be doing sensible binding for you. However, if you're ever underpopulating processors/nodes or are running hybrid applications it's always worth investigating how your threads and processes are being placed on the hardware, because simple changes can give good performance benefits.

Most MPI libraries and OpenMP implementations will provide flags or environment variables that will tell you when you run your program where threads and processes are being placed. If you don't have access to that you can also investigate through helper programs like Cray's xthi, a small program you can run that will print out where it is being placed when run.