The tyranny of 100x

Reporting Performance

Measuring performance is a key part of any code optimisation or parallelisation process. Without knowing the baseline performance, and what has been achieved after the work, it's impossible to judge how successful any intervention has been. However, it's something that we, as a community, get wrong all the time, at least when we present our results in papers, presentation, blog posts, etc... I'm not suggesting that people aren't measuring performance correctly, or are deliberately falsifying performance improvements, but the incentives to make your work look as impressive as possible causes people to present results in a way that really isn't justified.

Partly this is driven by hardware manufacturer's marketing departments. They love presenting performance improvements with the biggest numbers possible; 100x, 1000x, etc. There are periodic spats between Nvidia and Intel about the relative performances of their latest hardware, i.e. [1], [2]. Given the nature of marketing these are always going to focus on results that present the hardware in the best light compared to other manufacturers offerings, and this may mean selectively choosing applications that work well on your products or comparing to slightly older versions of competitors hardware if that makes your new release look better.

Understandable for marketing of products, but it's not behaviour that research scientists or software engineers should get involved in. Yet, future funding, publications, and standing in the community can improved by demonstrating amazing results. If you submit a paper to a conference and it can claim 10x speedup of an application, it's likely to get preference over one presenting 50% speedup. If you can make the case you've taken funders money and made codes run 100x faster then they are going to be much happer than if you tell them you got a 25% performance improvement.

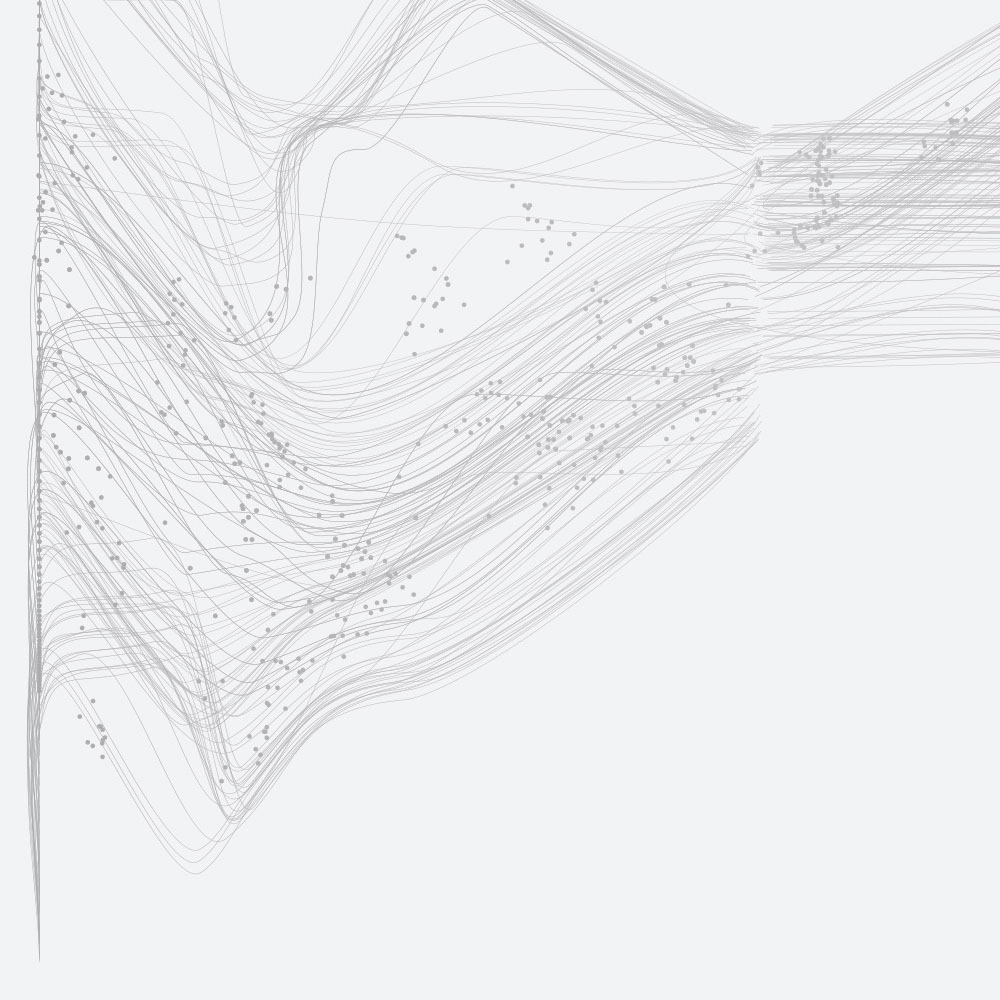

Such performance presentations are usually done by comparing parallel performance to serial performance, or comparing multiple nodes to a single process or thread. However, they can also be comparing different algorithms, or codes with full functionality to computational kernels without all the costly I/O, control logic, and other things that real codes need.

Overblown Reporting

One good example of such behaviour that springs to mind was when developers from Cambridge University and Intel reported 100x speedup on their application going to the Xeon Phi processor, see this web page for an example of the media coverage it got. In fact, they claimed 108x speedup on the Xeon Phi, but if you read the article you'll see that figure is arrived at by comparing a fully parallelised and optimised code on a Xeon Phi processor with the original (unoptimised) code on a single core of a standard processor.

Yes, the performance improvement is real, but the 108x does not reflect either the optmisation work, or their parallelisation work, or the performance of the Xeon Phi hardware. In fact, if you look at it, they actually get around a 3x speedup parallelising the code, a 2x speedup on that from optimising that code, then they changed the algorithm they were using, giving a 8x performance improvement. If you then compare the standard processor to the Xeon Phi, using the optimised code you can see around a 30% performance boost from using the Xeon Phi.

So, there is performance benefit from using the hardware, and they've also done a really good job in speeding the code. Any one of those individual results, 2x, 3x, 8x, are something to be proud of. Something to shout about, work well done. Comparing a fully optimised parallel code on a high performance compute device to a serial unoptimised low performing algorithm isn't.

Plenty of Fish in the Sea

There are plenty of other examples people doing this, be it Google and Columbia University claiming a 35x speed-up for their new programming language but where they are comparing a serial program to a fully parallel programming language running on a GPU; or companies suggesting Cloud resources have higher performance than HPC systems but where different generations of hardware are being compared, benchmarks are used that only really test the CPU hardware and speedup is presented rather than runtime.

Fair enough you might say, what harm is there is presenting results in the best way possible. Provided the results are real, surely its up to me how I present them and anyone reading it will understand the context, right? It's not affecting anyone is it? Unfortunately, I think it is. Making such claims has the potential to devalue good work done by others that isn't presented in such a way.

Small Steps

Large performance claims makes the 10%, 20%, 7% etc... improvements look poor. It makes the incremental improvement look like failures. However, if someone improves a large application, something like VASP, by 10% then that woud save ~£170,000 per year on ARCHER alone (based on last month's usage taken from this page). A small optimisation in overall runtime, but a large impact on computational resource usage and costs for science done around the world.

Furthermore, often people don't recognise that most of the applications using our HPC system have been around for a while, and have been optimised and re-optimised many times over their lifetimes. Therefore, a 10% optimisation of VASP may be much more impressive than a 2x speedup of a newly written applciation. We need some estimation of the headroom for performance of applications, as well as the performance achieved from the optimisation, to really assess whether what is being reported is great work or straight forward developments.

Therefore, I'd argue for always including sensible performance comparisons between hardware platforms, or software versions, ensuring the closest things are being compared. If you're comparing the performance of a GPU, don't compare it to a single core of one CPU. Likewise, if you're comparing the performance of 6 GPUs, don't compare it to a single node with only one or two processors in it. If you've optimised a parallel application, compare to the versions you had before optimisation, not to a serial version or some other configuration. That's not to say you can't compare a GPU to a single core as well, but at least include the fair comparision if you can.