ARCHER2: a world-class advanced computing resource for UK researchers operated by EPCC

Providing crucial support for world-class research across UK science and industry.

A service for science

ARCHER2 supports and enables a wide range of sciences including physics, chemistry, engineering and the environmental sciences. The service has been used to simulate iceberg calving in 3D, to create cardiac digital twins, and predict the spread of airborne pathogens indoors. See the ARCHER2 website for many more examples of the impressive range of science it supports.

A service for industry

EPCC provides on-demand access to ARCHER2 for sectors including life sciences, manufacturing, and materials science, with companies leveraging the ability to parallelise large-scale problems across ARCHER2’s 5,860 compute nodes.

Responsible computing

We are committed to reducing the ARCHER2 service’s carbon footprint, so contributing to the University of Edinburgh’s commitment to being zero carbon by 2040.

The ARCHER2 service has led on reducing emissions in a number of different ways including improving the energy efficiency of the service, improving the efficiency of the ACF data centre and quantifying the embodied emissions associated with the ARCHER2 hardware.

Community building

The ARCHER2 service works to build a strong, supportive relationship with research communities to deliver an effective service that benefits UK high performance computing. Our active outreach programme demonstrates to people across the UK the relevance and benefits of high performance computing.

ARCHER2 training

To support ARCHER2 users, EPCC designs and delivers an active training programme of online and in-person courses.

Technology

ARCHER2 is an HPE Cray EX supercomputing system with a peak performance of 28 PFLOP/s. The machine has 5,860 compute nodes, each with dual 64 core CPUs, giving 750,080 cores in total.

Cooling

ARCHER2 compute nodes are housed in water cooled HPE Cray EX “Mountain” cabinets.

Compute resource

5860 CPU compute nodes, each with:

• 2 x 2.25 GHz AMD 7742 EPYCTM 64c processor

• 2 x 100 Gbps Slingshot interconnect interfaces

• 256/512 GB RAM.

4 GPU test and development nodes, each with:

• 1 x 2.8 GHz AMD 7534P EPYCTM 32c processor

• 4 x AMD InstinctTM MI210 GPU accelerator

• 2 x 100 Gbps Slingshot interconnect interfaces

• 512 GB RAM.

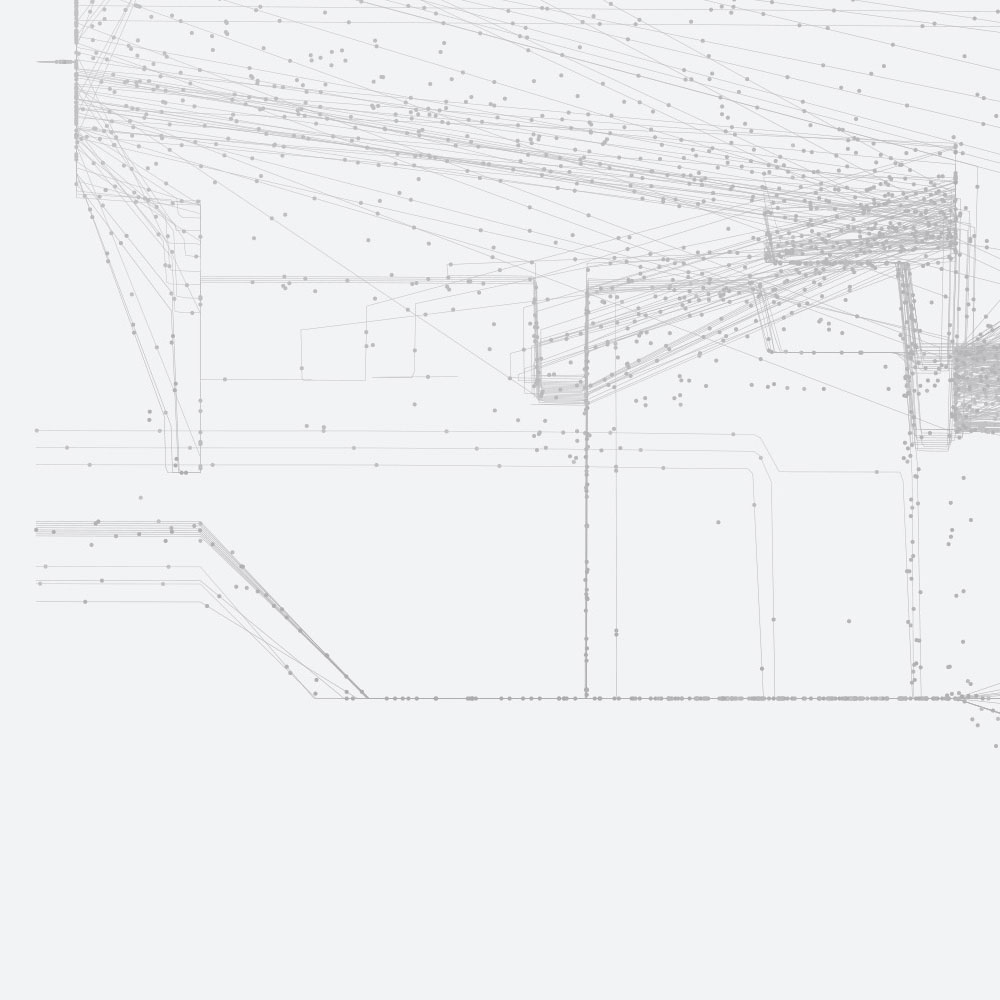

Interconnect

ARCHER2 has an HPE Slingshot interconnect with 200 Gb/s signalling per node, which uses a dragonfly topology:

• 128 nodes are organised into a group, with two groups per ARCHER2 compute cabinet.

• Within a group, 16 switches are connected in an all-to-all pattern with electrical links, and each node is connected to two switches via electrical links.

• All groups are connected to each other in an all-to-all pattern using optical links.

Storage

• 4 high performance HPE ClusterStor™ L300 Lustre file systems: 14.5 PB total

• 1 solid state (NVMe) HPE ClusterStor™ E1000 Lustre file system: 1 PB

• 4 backed-up Netapp FAS8200 NFS file systems:

1 PB total.

Access

Academic access

There are specific allocation mechanisms for UK researchers to request access via the ARCHER2 partner research councils EPSRC and NERC. For other enquiries please contact the ARCHER2 User Support Team at support@archer2.ac.uk

Or see the ARCHER2 website: www.archer2.ac.uk

Industry access

On-demand access to ARCHER2 is available for industry users. Further details can be found on the ARCHER2 website: www.archer2.ac.uk

Or please contact our Commercial Manager at:

commercial@epcc.ed.ac.uk

Download our ARCHER2 flyer

-

FileARCHER2 flyer 2025 (355.6 KB)