DiRAC: Tesseract (CPU)

Tesseract was an Extreme Scaling CPU based DiRAC system. It was decommissioned in March 2023.

EPCC hosts the Extreme Scaling component of the DiRAC facility. The Extreme Scaling CPU based system, Tesseract, is a 1476-node HPE SGI 8600 HPC system housed at EPCC's Advanced Computing Facility. EPCC also provides service support for the entire DiRAC consortium.

Tesseract is one of two DiRAC systems at EPCC, the other being Tursa, a GPU based system.

Technology

# Nodes

1476 :1468 CPU, 8 GPU

CPUs

2x Intel Xeon Skylake Silver 4116, 2.1 GHz, 12-core per node

Total CPU cores

35,424

GPUs

4x Nvidia Tesla V100-PCIE-16GB, 640 Tensor core, 5,120 CUDA core per node

Total GPU cores

20,480 Tensor cores, 163,840 CUDA cores

System Memory details

Total of 141TB of RAM

Storage technologies and specs

3PB of high speed DDN Lustre storage

9PB of HPE DMF managed Spectra tape library

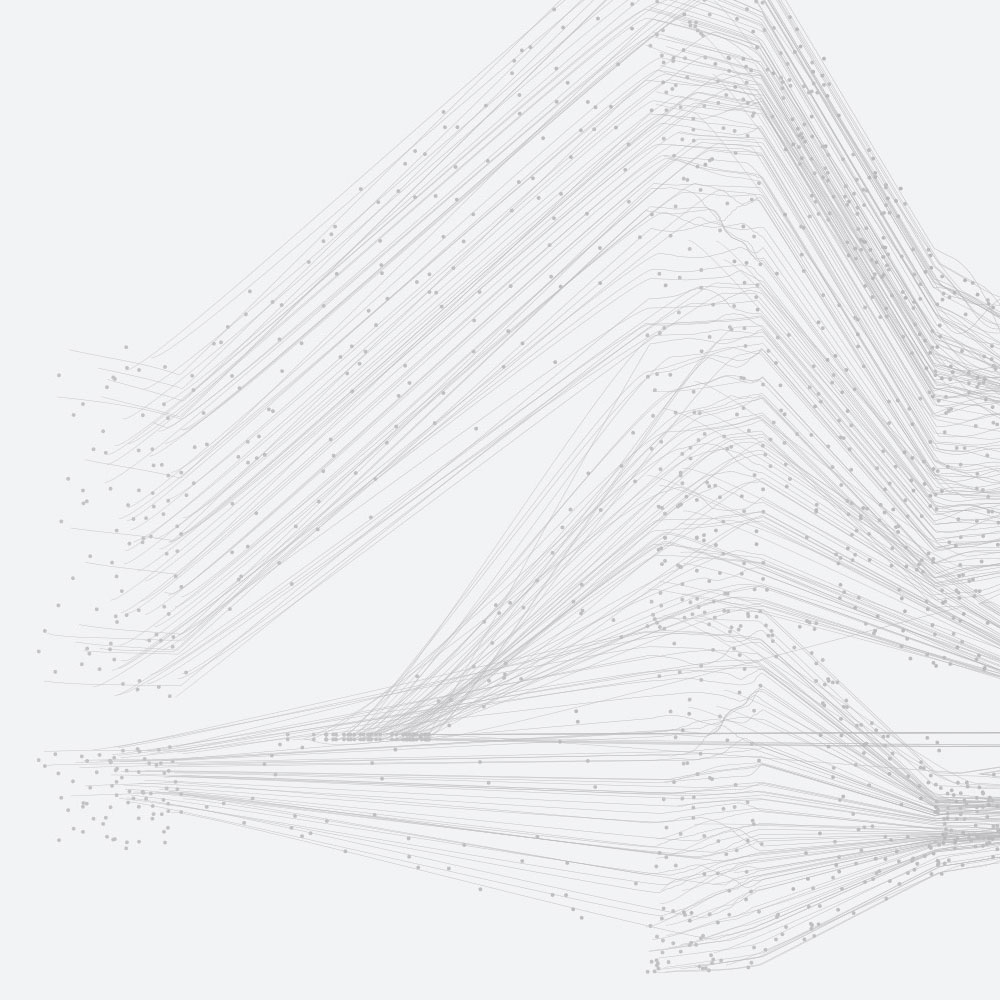

Interconnect technologies and specs

100 Gbit/s Intel Omnipath

Any other tech points not captured

Tesseract differs from typical 8600 and Omnipath deployments in that each leaf switch is set up with an exact power of two nodes – in this case 16. This allows problems to be placed and grouped extremely efficiently for the work run on the hypercube topology of the Omnipath network.

Layout/Physical system scale

Tesseract is composed of 6 HPE SGI 8600 cabinets as well as 5 support cabinets for system management and storage and a Cooling Distribution Unit

Cooling tech and specs

The Tesseract Compute Nodes are stored in water cooled HPE SGI 8600 cabinets

Scheduler details

PBS Pro

System OS Details

CentOS 8 (Compute Nodes) and Red Hat Enterprise Linux (Login and management nodes)

Science and applications

DiRAC is recognised as the primary provider of HPC resources to the STFC Particle Physics, Astroparticle Physics, Astrophysics, Cosmology, Solar System and Planetary Science and Nuclear physics (PPAN: STFC Frontier Science) theory community. It provides the modelling, simulation, data analysis and data storage capability that underpins the STFC Science Challenges and our researcher’s world-leading science outcomes.

The STFC Science Challenges are three fundamental questions in frontier physics:

- How did the Universe begin and how is it evolving?

- How do stars and planetary systems develop and how do they support the existence of life?

- What are the basic constituents of matter and how do they interact?

Access

Academic access

Access to DiRAC is coordinated by The STFC’s DiRAC Resource Allocation Committee, which puts out an annual Call for Proposals to request time as well as a Director’s Discretionary Call.

Details of access to DiRAC can be found on the DiRAC website.

Commercial access

DiRAC has a long track record of collaborating with Industry on bleeding-edge technology and we are recognised as a global pioneer of scientific software and computing hardware co-design.

We specialise in the design, deployment, management and utilisation of HPC for simulation and large-scale data analytics. We work closely with our industrial partners on the challenges of data intensive science, machine learning and artificial intelligence that are increasingly important in the modern world.

Details of industrial access to DiRAC can be found on the DiRAC website.

Trial access

If you are a researcher wishing to try the DiRAC resources, get a feel for HPC, test codes, benchmark or see what the DiRAC resources can do for you before making a full application for resources, an application can be made for seedcorn time.

Details of seedcorn access to DiRAC can be found on the DiRAC website.

People

The system is managed by DiRAC and is maintained by EPCC and the hardware provider HPE.

Support

The DiRAC helpdesk is the first point of contact for all questions relating to the DiRAC Extreme Scaling services. Support is available Monday to Friday from 08:00 until 18:00 UK time, excluding UK public holidays.