Data Assimilation for Smoothed Particle Hydrodynamics

Project Description

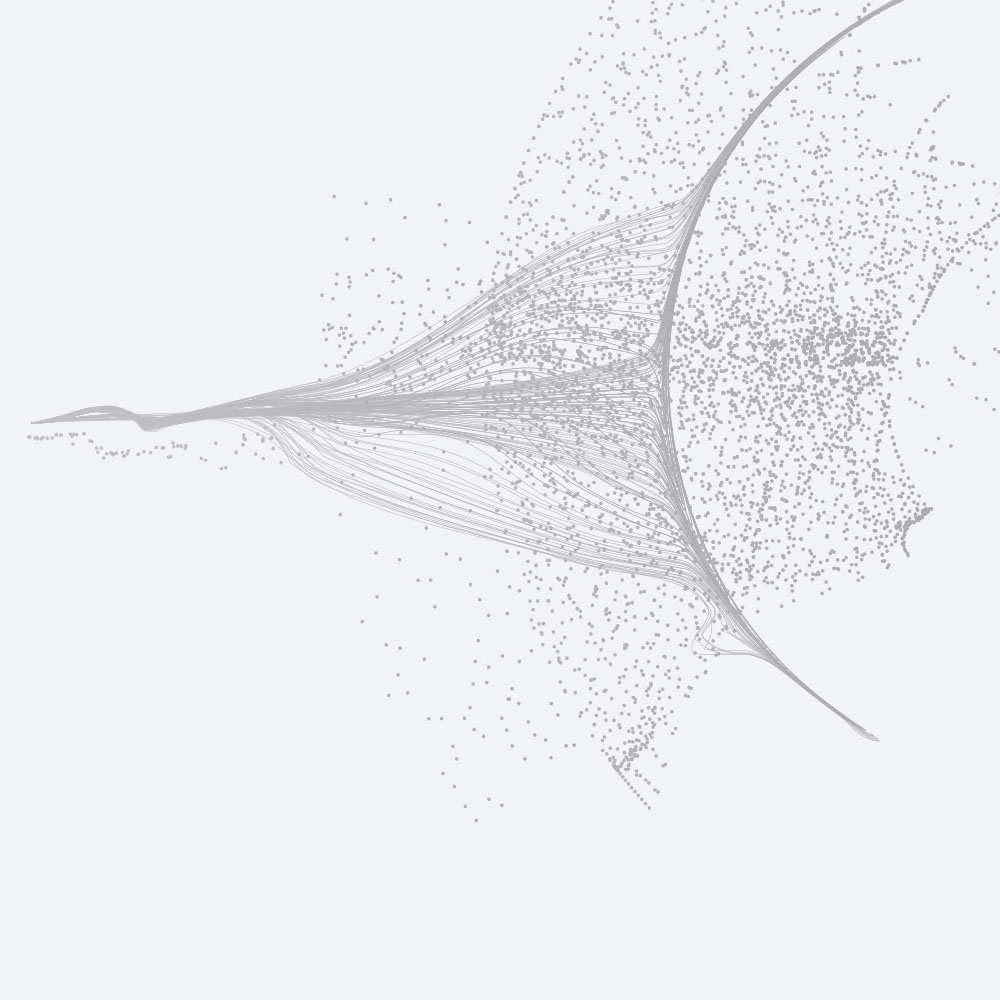

Smoothed particle hydrodynamics (SPH) is a powerful mesh-free method for simulating complex free-surface flows such as dam-break waves, tsunami impact and tank sloshing. Its Lagrangian, particle-based nature makes it ideal for problems involving large deformation, splashing and fluid-structure interaction. However, SPH simulations can drift from reality because of uncertain initial conditions, imperfect model parameters and numerical error. Data assimilation (DA) offers a principled way to combine simulations with observations so that the model is continually corrected, as is routine in numerical weather prediction. This project will bring SPH and DA together to create high-fidelity “digital twins” of extreme free-surface flows.

Primary Supervisor: Dr Joseph O’Connor

Project Overview

The aim of this research will be to enable SPH simulations to ingest data from sensors, gauges or cameras in real time or retrospectively, and to provide quantified uncertainty in key outputs such as free-surface elevation and impact loads. Method development may begin on simpler research codes but will explicitly look towards implementation in real open-source SPH codes (e.g. DualSPHysics). Successful methods could therefore have immediate impact for the wider SPH community.

The core of the PhD is the design and testing of DA schemes tailored to SPH, with flexibility in the methodological emphasis. One possible direction is ensemble-based DA (e.g. Ensemble Kalman Filters and related methods) which represent uncertainty using an ensemble of simulations updated when new data arrive. An equally valid direction is variational / adjoint-based DA, using emerging differentiable SPH frameworks (built on automatic differentiation) to optimise initial conditions and model parameters over a time window in a 4D-Var style setting. Both approaches are novel in the SPH context and potentially transformative; the project can evolve towards an ensemble-centred, a variational-centred, or a balanced study depending on interests and results.

A strong high-performance computing (HPC) component runs throughout this project. Ensemble DA requires multiple realisations in parallel, while variational DA with differentiable solvers involves substantial gradient computation; both demand efficient use of multi-core CPUs and GPUs. The project will design and evaluate parallel strategies and workflows on EPCC systems, and will validate the methods on representative benchmark problems (such as dam-breaks and wave impacts) using synthetic and, where possible, experimental data.

Overview of the research area

The project sits at the intersection of three active research areas: SPH, data assimilation and HPC. SPH represents fluids by moving particles and naturally captures complex interfaces, splashing and fluid-structure interaction. It is widely used in marine, hydraulic and coastal engineering, yet model calibration is still largely manual and uncertainty is rarely quantified. Data assimilation provides a statistical framework for combining model forecasts with observations to improve state estimates, infer hidden parameters and characterise uncertainty. While DA is mature in geosciences, its application to high-fidelity CFD is still emerging and it is essentially unexplored for SPH, particularly in violent, highly nonlinear regimes. At the same time, modern SPH codes already exploit HPC via GPUs and large clusters, and differentiable physics frameworks now make gradient-based optimisation feasible. This project links these strands by asking how ensemble-based and variational DA can be realised efficiently for particle-based solvers on contemporary HPC architectures, opening up a new area at the interface of advanced CFD, statistics and large-scale computing.

Potential research questions

- How can data assimilation methods developed for Eulerian, mesh-based CFD be reformulated for the Lagrangian, mesh-free SPH setting, and what is the most effective way to represent the SPH state (particle space, mapped grid, or a hybrid)?

- To what extent can assimilating sparse measurements (wave gauges, wall pressures, camera-derived free-surface shapes) reduce errors in unobserved quantities, such as the full free-surface geometry and velocity field, in violent flows?

- In hindcasting mode, can SPH with DA recover initial conditions or key parameters of extreme events (e.g. inflow conditions) more reliably than manual calibration?

- How do design choices in the DA system (ensemble size, observation type and coverage, measurement noise) affect accuracy, stability and uncertainty quantification?

- How can the chosen DA approach be implemented to scale efficiently on modern HPC systems (multi-GPU, multi-node), while keeping communication and I/O costs manageable?

Student Requirements

"Minimum requirement of a UK 2:1 honours degree, or its international equivalent, in a relevant subject such as computer science and informatics, physics, mathematics, or engineering.

You must be a competent programmer in at least one of C, C++, Fortran, or Python and should be familiar with mathematical concepts such as algebra, linear algebra and probability and statistics. You must be willing to learn new techniques and technologies quickly, and an interest in developing new skills and expertise.

- Solid prior exposure to CFD or SPH, plus basic fluid mechanics (e.g. Navier-Stokes, free-surface flows).

- Strong programming skills and willingness to work with and extend large, existing simulation codebases.

- Willingness to work on HPC systems, including Linux-based clusters, and to learn parallel and GPU programming where needed.

- Comfort with data, statistics and uncertainty, sufficient to engage with probabilistic / data-driven methods (the specifics of data assimilation can be learnt during the PhD).

- Problem-solving mindset and adaptability, with enjoyment of creative thinking across coding, maths and HPC.

English Language requirements as set by University of Edinburgh

Recommended/Desirable Skills

- Hands-on experience with SPH codes (e.g. DualSPHysics) or advanced CFD.

- Prior use of HPC or clusters (Linux, job submission, MPI/OpenMP and/or CUDA).

- Exposure to data assimilation, Bayesian inference, control or related topics (e.g. Kalman filters, ensemble methods).

- Good numerical linear algebra (matrix computations, least-squares) and comfort analysing and visualising simulation results.

- Strong analytical and communication skills, with some experience interpreting results and presenting or writing up scientific work.

- Background or interest in coastal, hydraulic or other free-surface flows.

How to apply

Applications should be made via the University application form, available via the degree finder. Please note the proposed supervisor and project title from this page and include this in your application. You may also find this page is an useful starting point for a research proposal and we would strongly recommend discussing this further with the potential supervisor.

Further Information

- DualSPHysics official website. Available at: https://dual.sphysics.org

- Crespo, A. J. C., Domínguez, J. M., Rogers, B. D., Gómez-Gesteira, M., Longshaw, S., Canelas, R., Vacondio, R., Barreiro, A. and García-Feal, O. (2015) “DualSPHysics: Open-source parallel CFD solver based on Smoothed Particle Hydrodynamics (SPH)”, Computer Physics Communications, 187, pp. 204-216. doi:10.1016/j.cpc.2014.10.004.

- Evensen, G. (2009) Data Assimilation: The Ensemble Kalman Filter. 2nd edn. Berlin: Springer.