ASiMoV-CCS: a new solver for scalable and extensible CFD and combustion simulations

21 October 2025

ASiMoV-CCS is a new solver for CFD and combustion simulations in complex geometries. It was developed at EPCC with collaborators as part of the ASiMoV EPSRC Strategic Prosperity partnership.

As a greenfield development, the decision was taken early in the design of ASiMoV-CCS to embrace modern Fortran features to facilitate writing both high-level user-facing “science” code and low-level, performance sensitive backend code in a single language. To achieve this, we have made extensive use of the submodule feature introduced in Fortran 2008 to hide the implementation details behind generic interfaces that help the user to focus on the problem they are trying to solve.

For example, even though PETSc is currently a de-facto hard dependency of the code, as it is used as the basis of parallel linear algebra in ASiMoV-CCS, the user code is entirely independent of PETSc. Users simply create matrices, vectors, etc. without requiring familiarity with PETSc. In addition to simplifying user code, this interface abstraction aids portability of ASiMoV-CCS, the code is not truly dependent on PETSc and an alternative linear algebra backend could be implemented to satisfy the interface if required.

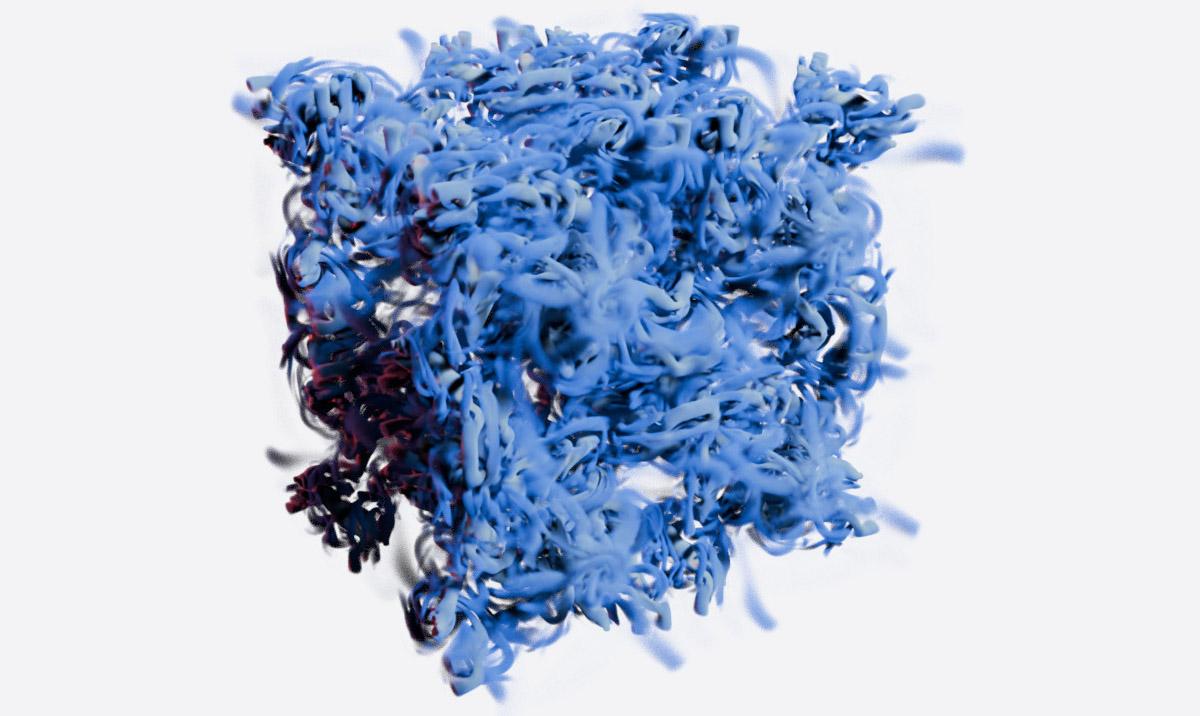

The Lid-Driven Cavity (LDC) and Taylor-Green Vortex (TGV) benchmark problems were used to validate the solver. Comparison with results to benchmark data in the literature showed good agreements between ASiMoV-CCS and the reference data.

ARCHER2 scaling tests

As the TGV problem can be easily scaled up to a desired size, it was used as the basis for strong and weak scaling tests on ARCHER2. In strong scaling tests we found that ASiMoV-CCS scales well until the local workload is approximately 10k cells per core, in line with expectations. Using this limiting workload to perform weak scaling tests, it was found that the overall solver retained over 50% parallel efficiency up to 64 nodes on ARCHER2. Based on the breakdown of timings reported by ASiMoV-CCS, the limiting component was the pressure solver, which tends to be the bottleneck in incompressible flow solvers.

We also tested the performance of parallel I/O, using the ADIOS2/BP4 backend. Considering the bandwidth of the write operations, ASiMoV-CCS was able to scale up to the theoretical I/O bandwidth limit of ARCHER2. Reordering the data layout is an important performance optimisation in PDE solvers, therefore before data can be written it must be prepared to match the original order on disk. Considering the “effective bandwidth” of the entire I/O operation we found that the achieved bandwidth was significantly lower, however the total cost of the I/O step was equivalent to less than 1% of a timestep in these tests and therefore acceptable.

Throughout this initial development of ASiMoV-CCS we have focused on the CPU-based ARCHER2 system. It is clear that future supercomputers will be accelerator based, and porting and optimising ASiMoV-CCS for such systems will be one of the major efforts at EPCC for the VECTA EPSRC Strategic Prosperity Partnership that follows the ASiMoV project.

Links

The paper, ASiMoV-CCS - A New Solver for Scalable and Extensible CFD & Combustion Simulations, presented at PASC’25 in Brugg-Windisch, Switzerland describes the design of ASiMoV-CCS, solver validation and performance tests on ARCHER2. Authors: Paul Bartholomew (EPCC), Alexei Borissov (EPCC), Christopher Goddard (Rolls-Royce Plc), Shrutee Laksminarasimha (Infosys), Sébastien Lemaire (EPCC), Justs Zarins (EPCC), Michèle Weiland (EPCC).

ARCHER2 is the UK national supercomputing service. It is operated by EPCC and hosted at the Advanced Computing Facility.

Below: Visualisation by Sébastien Lemaire of a Taylor Green vortex simulation run on ASiMoV-CCS.