Exploring Nekbone on FPGAs at SC20

16 November 2020

This Friday I am presenting work on accelerating Nekbone on FPGAs at the SC20 H2RC workshop. This is driven by our involvement in the EXCELLERAT CoE, which is looking to port specific engineering codes to future exascale machines.

One technology of interest is that of FPGAs, and a question for us was whether porting the most computationally intensive kernel to FPGAs and enabling the continuing streaming of data could provide performance and/or energy benefits against a modern CPU and GPU. We are focusing on the AX kernel, which applies the Poisson operator, and accounts for approximately 75% of the overall code runtime.

For this work we used a Xilinx Alveo U280, and High Level Synthesis (HLS) which enables us to write FPGA code in C++ and then synthesise it down to the Hardware Description Language (HDL) level. We initially focused on a single kernel, to try to optimise this as much as possible before scaling it up. Running across a 24-core CPU (Xeon Platinum Cascade Lake) delivered 65 GFLOPS for our problem size, and our first HLS kernel version was over 3000 times slower! Not a great start, but equally plenty of opportunity for optimisation.

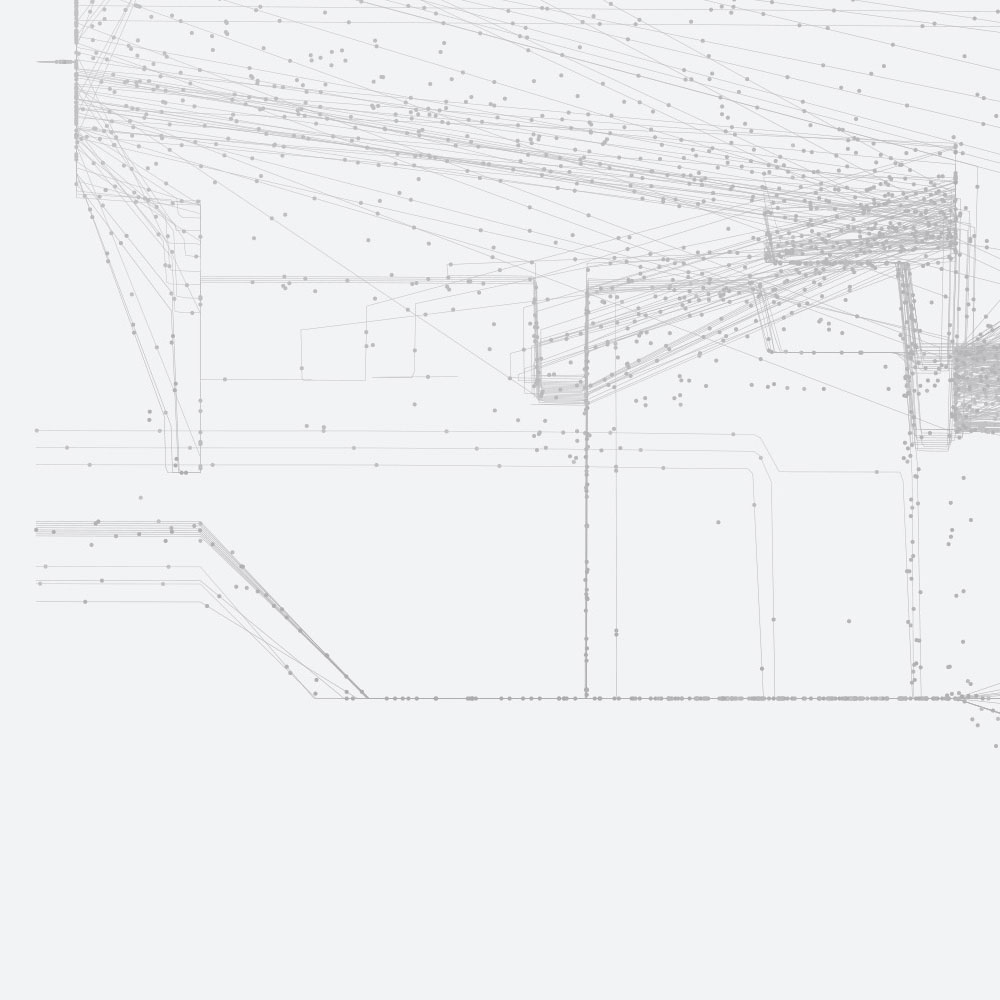

The problem we had was that our algorithm was still Von-Neumann based, and over a number of different optimisation steps, which we include in our paper and will discuss in the presentation, we managed to improve the performance of our HLS code by over 4000 times compared to the initial version. This is illustrated in our diagram on the left, which shows our application specific dataflow machine for Nekbone, with different parts running on different elements of data (to keep them running concurrently) and some data buffering for reordering.

The performance differences demonstrate how important it is to move from the CPU’s Von-Neumann based algorithm, to a dataflow approach delivered by designing our code as a bespoke application specific dataflow machine. Probably unsurprisingly, we found that the key is keeping all parts concurrently busy and streaming data through, and by doing so ended up with a single kernel delivering around 77 GFLOPS.

Where things become really interesting however is scaling the number of our FPGA kernels and using this to also compare against the GPU and explore power efficiency. Our kernel is fairly complex, and scaling it required quite a few bits and pieces. These included aspects such as selecting appropriate on-chip memory spaces for different data regions in the kernel, and some trial and error in the place and route stage of the tooling to avoid dynamic downclocking or routing errors.

The headline is that we managed to fit four of our FPGA kernels onto the U280, which in total delivered around 290 GFLOPS. This is way ahead of the 24-core CPU (65 GFLOPS), but the V100 GPU achieved 407 GFLOPS. Whilst it might seem a little disappointing that our FPGA approach only achieved 71% the performance of the GPU, it should be noted that this was a tough test because the code has been optimised for GPUs over many years.

Furthermore, we found the power efficiency to be far more compelling. Both the GPU and CPU drew around 170 Watts and in comparison, our four FPGA kernels drew 72 Watts, around 2.5 times less than the other technologies! When considering the power efficiency, our four FPGA kernels deliver 4 GFLOPS/Watt, compared to 2.34 GFLOPS/Watt on the GPU and 0.37 GFLOPS/Watt on the CPU.

Therefore, we consider this work demonstrates the role of FPGAs for these sorts of workloads in rather a positive light. Of course there are other things to explore, such as moving from double precision floating point to reduced precision or even fixed point, in the future and also new FPGA technologies promised in the next 12 months by Xilinx.

In the talk at the H2RC workshop I will discuss the optimisation strategies in detail and other actions undertaken, this will be on Friday the 13thbetween 11:05 am and 11:35 am ET. The pre-print of the paper is available on arXiv here.

Sixth International Workshop on Heterogeneous High-performance Reconfigurable Computing (H2RC'20)