Mapping change: bringing Scotland’s 19th-Century gazetteers to life

9 September 2025

The Mapping Change research project, funded by the Royal Society of Edinburgh, set out to transform the Historical Gazetteers of Scotland (1803–1901).

In the Mapping Change project [1] we built an AI-driven pipeline, an open resource [2,3] that converts this collection from noisy page-level Optical Character Recognition (OCR) into over 50,000 article-level place descriptions that can be queried, linked, and visualised on maps.

Gazetteers were the reference books of the 19th Century: short entries describing towns, rivers, castles, parishes, and industries. They captured Scotland’s rapid change during industrialisation, urbanisation, and infrastructure growth.

The National Library of Scotland digitised these volumes as 13,000+ OCR-derived XML pages [4]. But OCR encodes words, not articles. A single page might contain half an entry, or three different ones. Place names repeat (for example “Abbey” appears in multiple contexts). Typography shifts between editions. Headers, footnotes, and page numbers are mixed with content.

In short: the Gazetteers were digitised, but not easily usable for comparing or analysing how places (eg Dundee or Leith) evolved over time. That is the challenge the Mapping Change project set out to solve.

From messy OCR to structured knowledge

Our AI pipeline tackles the core obstacle: article segmentation. Each Gazetteer edition uses different conventions, from uppercase inline headers (1803) to bold page-edge headings (1884). Traditional rule-based parsers cannot handle this variation without brittle, edition-specific scripts.

Instead we used ELM [5] (the University of Edinburgh’s AI platform) together with GPT-4o to design edition-specific prompts for detecting and extracting article places across pages. These enabled us to extract coherent articles across layouts, then store them in new knowledge graphs structured with the Heritage Textual Ontology (HTO) [6]. Each place-article includes its text plus metadata such as edition, volume, and page numbers. We then enriched the knowledge graphs with:

- Concept evolution: clustering equivalent places across editions into a single “concept” (eg all descriptions of Leith).

- Geospatial information: extracting coordinates and place types using Named Entity Recognition (NER) techniques.

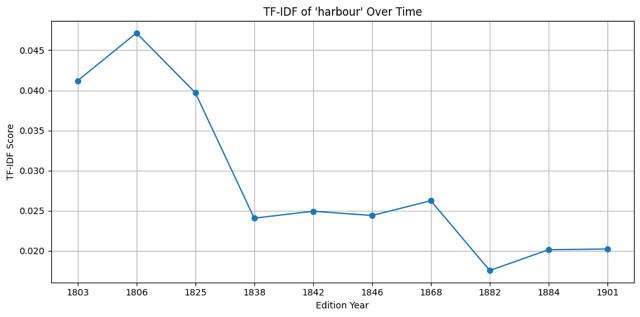

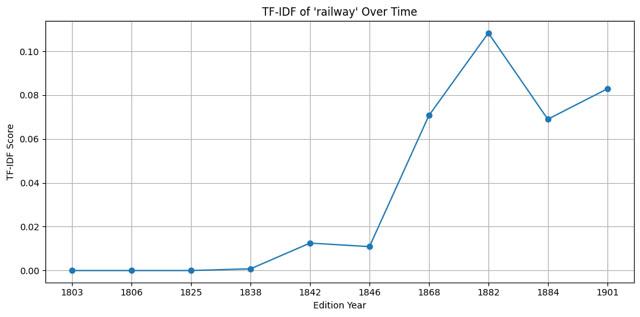

Together, these components form a temporal and semantic knowledge base: a way to track, link, and map Scotland’s 19th-Century geography. This resource makes possible new kinds of analysis [7] that were previously infeasible, for example obtaining a diachronic perspective on concepts as they rise and fall across a century (see Figure 1).

Figure 1: In the early 19th Century, “harbour” dominates, showing Scotland’s dependence on maritime trade. By the late century, “railway” takes over, charting the country’s transition toward industrial infrastructure.

Importantly, the pipeline is reusable. The same approach can be applied to other historical text collections, from encyclopedias to newspapers, to extract/segment information (eg newspapers articles).

Frances: making it explorable

For researchers and the public, we integrated everything into Frances [8], our semantic web platform. Frances makes it possible to:

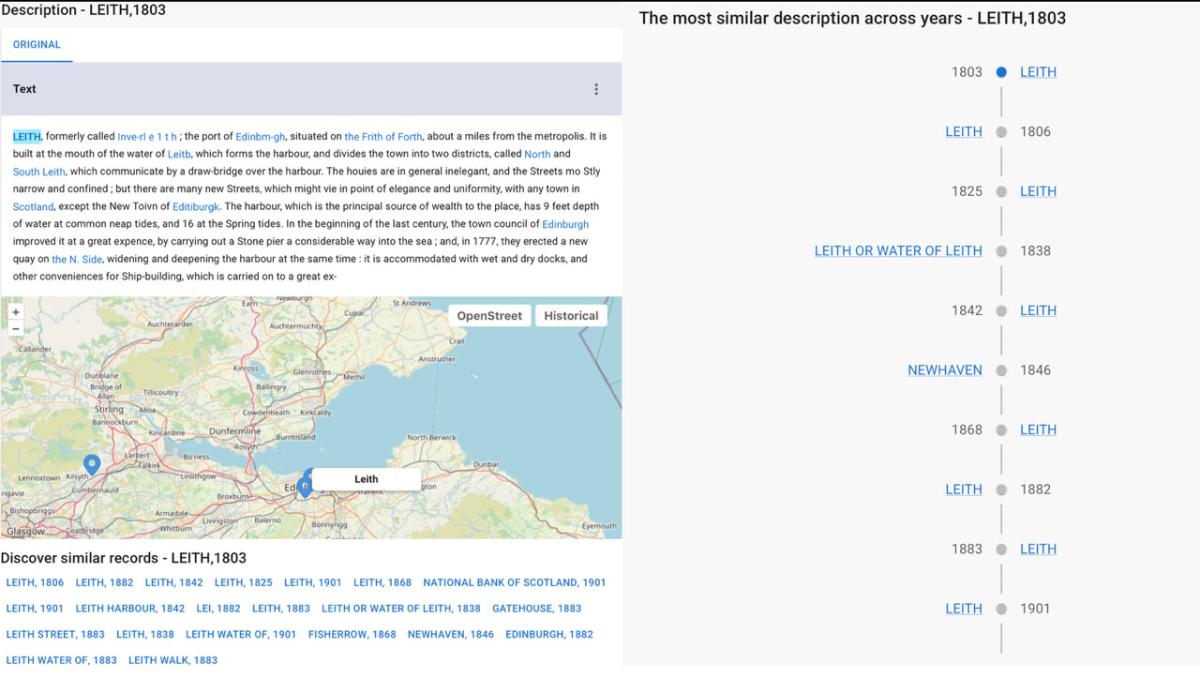

- Search for a place or keyword, choosing which collection to query (eg Gazetteers of Scotland),

- Read articles with highlighted location entities (see Figure 2)

- Explore historical maps side by side with modern ones (see Figure 2)

- Discover similar descriptions of places across editions

- Trace provenance back to the digitisation source

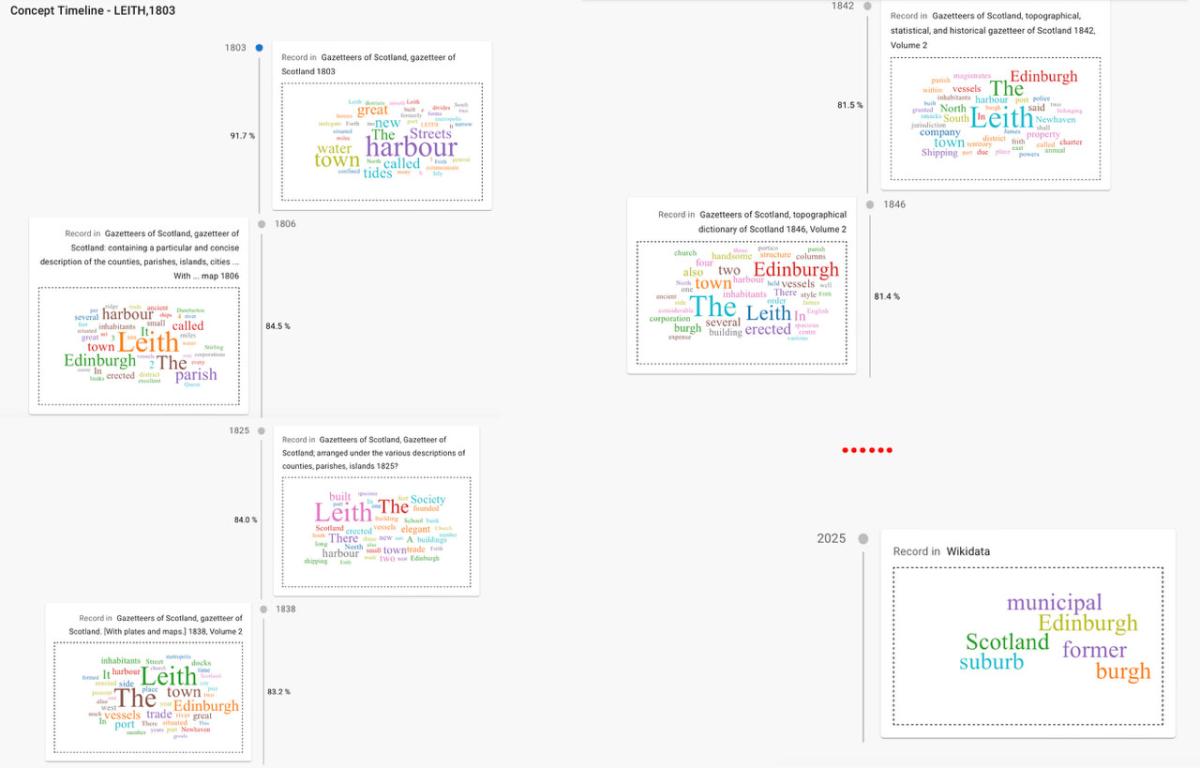

- Browse timelines, showing how a place was described across years, linked to modern resources like Wikipedia or DBpedia (see Figure 3).

By combining semantic search, interactive maps, data linkage, and provenance tracking, Frances bridges the gap between digitised scans and interactive exploration, making 19th-Century Scotland’s geographical record accessible like never before.

Figure 2 (below): Frances interface for Leith, 1803, showing the extracted article text with locations highlights, a map view, and semantically similar entries over time.

Figure 3 (above): Frances article concept timeline for Leith in 1803, visualised via word clouds, showing its semantic shifts across editions and final alignment with Wikidata.

Why it matters

Mapping Change is the first project to semantically structure the Historical Gazetteers of Scotland (1803–1901) at article level. It demonstrates how AI + Semantic Web + cultural heritage can transform unstructured OCR into reusable, semantically explorable resources.

- For historians and social scientists, it opens new questions about Scotland’s changing landscape.

- For the public, it makes archival collections engaging and explorable.

- For EPCC, it showcases how data science, AI, Semantic Web, and HPC expertise can serve the humanities.

And the journey does not stop here. As part of the project, Frances is now being migrated to the Edinburgh International Data Facility (EIDF) [9], providing sustainable cloud infrastructure to support large-scale analysis.

References

[2] https://github.com/francesNLP/MappingChange

[3] https://zenodo.org/records/15397756

[4] https://data.nls.uk/data/digitised-collections/gazetteers-of-scotland/

[5] https://information-services.ed.ac.uk/computing/comms-and-collab/elm

[7] https://github.com/francesNLP/MappingChange/blob/main/Notebooks/Exploring_AggregatedDF.ipynb

[9] https://edinburgh-international-data-facility.ed.ac.uk/

Authors

Lilin Yu (EPCC PhD candidate) and Rosa Filgueira (EPCC).