NESS (Next Generation Sound Synthesis project) bows out

12 April 2017

The Next Generation Sound Synthesis project (NESS) has concluded its five-year journey. With true inter-disciplinary focus, genuine user-engagement and over 75 publications overall, the project has been a great success for the University of Edinburgh, and for EPCC in particular.

In 2010, the School of Informatics funded a series of seed projects under the iDEA Lab initiative. One of these supported a collaboration between EPCC and Dr Stefan Bilbao, at the School of Music (now in the Acoustics and Audio Group) of the University of Edinburgh, to investigate the feasibility of employing Graphics Processor Units (GPUs) to accelerate synthetic music codes.

Physical modelling synthesis

Digital sound synthesis goes back 60 years, with simple early algorithms computationally efficient but unable to produce naturalistic sound. Of particular interest to Stefan Bilbao is physical modelling synthesis, and especially Finite Difference Time Domain methods, where the acoustic space is represented as a grid, and the computation evolves with time. These methods can generate sound of great quality, they allow the development of models that may represent real or imaginary instruments, and can be used to also model accurately sound travel in 3-D spaces.

The main drawback of these methods is their computational complexity. This affects the applicability of some instrument-models, where real-time execution is very desirable, and it also makes 3-D modelling expensive.

NESS is born

With the iDEA Lab pilot showing promise that the codes could be ported to parallel architectures, Stefan Bilbao was successful in securing NESS, a prestigious five-year European Research Council fellowship. The project was set up with three teams. The algorithm team at AAG developing models for instrument families like Brass, Percussion and Strings, and for 3-D spaces. The emphasis of the algorithm team was on creating portfolios of models that can be used for sound synthesis and room modelling, with some work also on algorithms that are more computationally effective. The acceleration team at EPCC ported the Matlab models into C++, optimised and accelerated them using various techniques and types of hardware, and made them available through a simple user interface. Finally, NESS had funding for visiting composers, who made use of these accelerated models - and of the studio that Edinburgh College of Art made available to the project - to develop new pieces of music.

Code acceleration

NESS was very interesting for EPCC. One of the findings of the project was that some of the instrument families, eg brass, do not need much in the way of acceleration, and can run faster than real-time on our now superseded, older server. Others, eg the snare-drum code, were notoriously non-conducive to sufficient acceleration, and research was carried out by the modelling team to replace some of the algorithms used.

Instruments

The true showcase of EPCC's contribution to the acceleration effort was the multi-plate code. As published in 20151, James Perry of EPCC employed a combination of GPU, multicore, vectorisation, banded matrices, merging of operations, and mixed precision to accelerate the code. Depending on the size of the problem, it is still around 240 times slower than real time, but it is between 60 and 80 times faster than the original, unoptimised Matlab code.

Accelerating the NESS Multi-plate code: the plates make use of AVX vectorisation and multi-CPU techniques, with the airbox taking advantage of the GPU processors.

3-D rooms

EPCC contributions to the 3-D rooms code were of a slightly different nature. In this case, EPCC's Paul Graham ported the code to MPI and ran various demonstrative examples of sounds in big rooms on ARCHER, the UK national supercomputer. The code runs slower than on the project's GPU server, but much bigger rooms are possible because of the sheer amount of available memory. The amount of computation required is significant: a 16x16x16 m3 room model, sampled at a rate of 44.1kHz, requires 28 GB of memory and it takes 240 processor-cores of the order of 80 minutes of wall-clock time to produce one second of audio output.

Our website includes some of the sounds generated, but these stereo renditions do not do justice to the excitement the mosquito model generates on an eight or 16-channel set-up.

Mosquito motion and sound modelling

Original mosquito sound, followed by modelled sound with visual representation of mosquito motion.

Project legacy

NESS was very successful and allowed us to enhance the GPU and numerical modelling skillset at EPCC. The collaboration between the teams was excellent, with the models iterating between the algorithms and acceleration teams before being made available to the composers. EPCC's Alan Gray was the second supervisor for two of the associated six NESS PhDs and we are happy that, of the 77 NESS publications to date, seven involved EPCC.

My personal highlight was realising the value that NESS has added to the day-to-day work of our composers. I will always remember listening to the first NESS-based piece, Gordon Delap's "Ashes to Ashes", when it was performed publicly for the first time in the NESS studio.

It is easy to get carried away with the endless possibilities for sound synthesis, but where we see real room for growth is the 3-D rooms. These models will need to be improved, refined and validated, but they can make tremendous impact in various strands of civil engineering. This is where the University of Edinburgh excels, building highly skilled, cross-disciplinary research teams that can influence people's everyday life. I look forward to future opportunities to build on the success of NESS.

1. J. Perry, A. Gray and S. Bilbao. Optimising a Physical Modelling Synthesis Code Using Hybrid Techniques. Proceedings of the International Conference on Parallel Computing, Edinburgh, September 1-4, 2015.

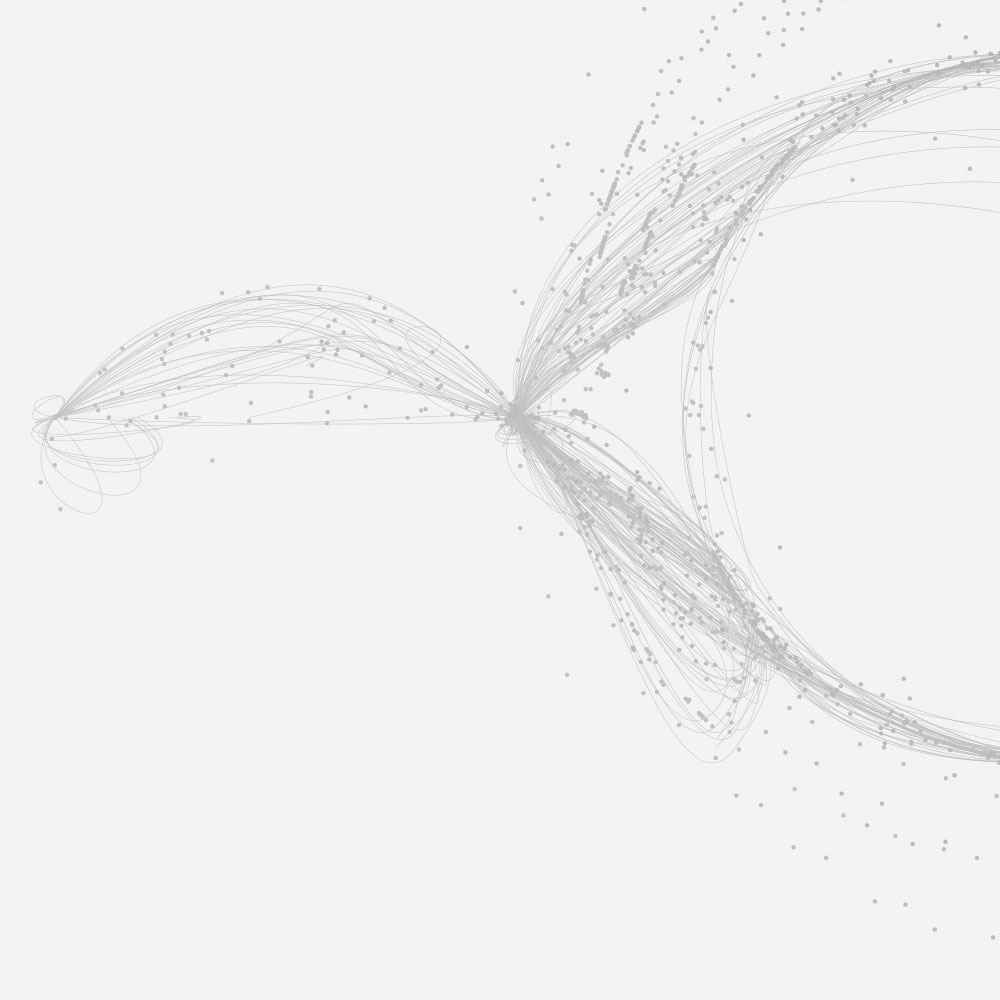

Image at top of page shows part of the illustration "Example of directionally-dependent dispersion error on cubic, FCC, BCC grids", which appears on the Ness website: http://www.ness.music.ed.ac.uk/archives/systems/virtual-room-acoustics.

Links

- NESS website.

- Stereo versions of all the NESS composer pieces.

- Interviews with Gordon Delap and with James, Stefan and all our PhD students are available on our 12-video NESS Project YouTube channel.