Research paper: Better Architecture, Better Software, Better Research

25 September 2025

Prof. Neil Chue Hong writes about the outcomes of a workshop designed to bridge the gap between Research Software Engineering and Software Engineering Research.

This paper had its beginnings in a workshop held at Schloss Daghstuhl, a unique venue run by the Leibniz Centre for Informatics in Germany that provides a remote residential location in a castle in the countryside - perfect for exploring new ideas and generating new research.

Research Software Engineering (RSEng) is the practice of applying knowledge, methods and tools from software engineering in research. Software Engineering Research (SER) develops methods to support software engineering work in different domains. The practitioners of Research Software Engineering working in academia – Research Software Engineers (RSEs) – are often not trained software engineers. Nevertheless, RSEs are the software experts in academic research. They translate research into software, enable new and improved research, and create software as an important output of research [2].

Hypothetically, the RSEng community and the SER community could benefit from each other. RSEs could leverage software engineering research knowledge to adopt state-of-the-art methods and tools, thereby improving RSEng practice towards better research software. Vice versa, Software Engineering Research could adopt RSEng more comprehensively as a research object, to investigate the methods and tools required for the application of state-of-the-art software engineering in research contexts [3].

To bridge the current gap between the two areas, practitioners from both fields were brought together for a five-day workshop to enable direct collaboration between participants from both the SER and the RSEng communities.

One key outcome was the identification of software architecture as an important area for Research Software Engineering that was often overlooked, and a group of participants at the Daghsuhl workshop took this forward, working with additional collaborators from the development teams of different research software projects whose architectures are studied in the paper.

Accidental software architectures

Research software is often long-lived, becoming more complex as it is reused and extended. Due to the nature of research culture and funding, development teams change frequently. Each new generation of researchers (often PhD students) and Research Software Engineers (RSEs) working on the software brings their own culture, experience, and domain background with them. This may benefit the software but can also lead to loss of knowledge and accrual of technical debt; lack of effort or documentation may result in “accidental” architectures that hinder, rather than support, the maintenance, extensibility, and evolution of the software.

The negative effect of accidental architectures may be amplified by the research context, where value is placed on new features that enable novel research, not on maintainability and refactoring. Similarly, research funding rewards novelty and provides few resources for maintenance, refactoring, and improving architecture. Reusability is not a static condition of a “final” version but a condition of adaptability to changing needs that requires as few resources as possible, even when adapting to new hardware architectures, such as GPU programming models.

Evaluating software quality

Software engineering research has developed metrics and tools to evaluate different aspects of software quality. Metrics, often derived using static code analysis, are generally helpful in gauging overall software quality. This is especially relevant for developers joining an existing or legacy software project. These insights can serve as starting point for an in-depth investigation of the software architecture that considers design documentation and original developers. Metrics can highlight potential architectural issues whose resolution should be prioritised to increase software maintainability.

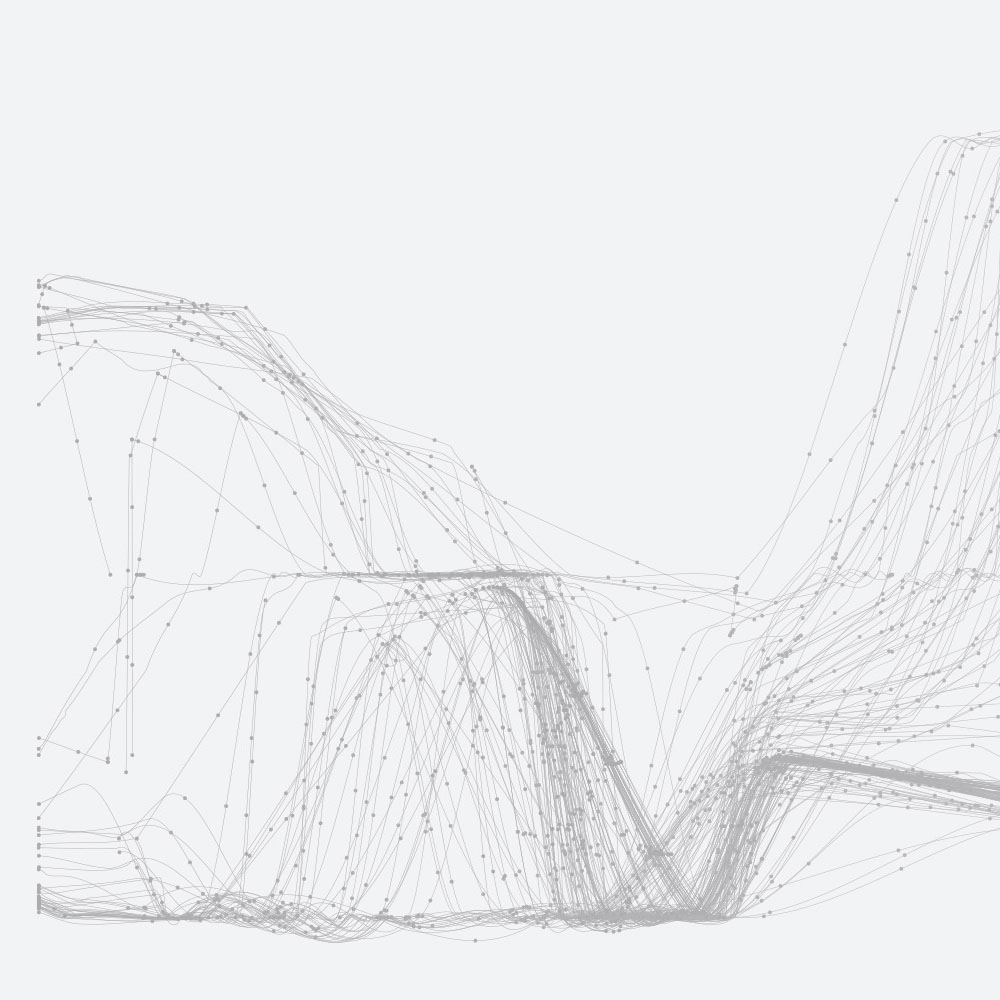

In the paper Better Architecture, Better Software, Better Research, we explored the feasibility of evaluating software maintainability by applying different architecture-related metrics to two pieces of software: Hexatomic, graphical platform for manual and semiautomated multilayer annotation of linguistic corpus data [4]; and Fluctuating Finite Element Analysis (FFEA), a biomolecular modelling program designed to perform continuum mechanics simulations of globular and fibrous proteins [5]. This was done using two popular static code analysis tools: SonarQube and CodeScene.

Limitations of metrics

Static code analysis tools are helpful in analysing specific aspects of software architecture. Metrics, such as cyclomatic and cognitive complexity, as well as some types of issues, such as code smells, relate to the understandability and maintainability of a codebase. However, not all relevant metrics are provided by established static code analysis tools. More complex metrics related to architecture are often context-dependent or hard to quantify, or require the use of additional tools providing more in-depth analysis for specific architectural measurements or specific programming languages. Metrics for more easily measurable attributes are often readily available, but their improvement does not necessarily have a positive impact on architecturally relevant attributes.

Recommendations

So what does this mean in practice? To achieve better software through better architecture, and better research through better software, we recommend new research software projects spend time considering potentially suitable designs and design principles for the software they will implement, taking into account maintainability, extensibility, adaptability, and reusability, in particular implementing modularisation and documenting architectural design decisions. RSEs should not be concerned with getting everything “right” at the beginning of development, but should be aware of balancing the short-term needs of the research with the longer-term implications for the software. Architecture and software design can be iterated alongside the development process, where new insights into the problem domain may trigger a design iteration.

For developers joining legacy research software projects, we recommend that static code analysis metrics be used by RSEs as an entry point to an architectural analysis of the software they will work on. Higher-level architectural views, such as those presented in the paper, can support a basic understanding of the modularity of hitherto unfamiliar software.

We also hope that this work leads to Software Engineering Researchers working more closely with RSEs to understand their unique needs, and develop suitable metrics and usable open source tools to support them. In any case, considering the design and architecture of research software, learning and following basic good practices, and leveraging existing tools that support this effort is time well spent. It may save RSEs time and effort over the software lifecycle, and helps making research software more maintainable, extensible, adaptable, and reusable, ultimately improving the quality of research outcomes.

Read the paper: Better Architecture, Better Software, Better Research

References

[1] "Research Software Engineering: Bridging Knowledge Gaps” workshop: https://www.dagstuhl.de/24161

[2] J. Cohen, D. S. Katz, M. Barker, N. Chue Hong, R. Haines and C. Jay, "The Four Pillars of Research Software Engineering," in IEEE Software, vol. 38, no. 1, pp. 97-105, Jan.-Feb. 2021, doi: 10.1109/MS.2020.2973362.

[3] M. Felderer, M. Goedicke, L. Grunske, W. Hasselbring, A.-L. Lamprecht, and B. Rumpe, “Toward Research Software Engineering Research,” Zenodo, Jun. 2023. doi: 10.5281/zenodo. 8020525.

[4] S. Druskat, T. Krause, C. Lachenmaier, and B. Bunzeck, “Hexatomic: An extensible, OS-independent platform for deep multi-layer linguistic annotation of corpora,” J. Open Source Softw., vol. 8, no. 86, Art. no. 4825, Jun. 2023, doi: 10.21105/joss.04825.

[5] A. Solernou et al., “Fluctuating finite element analysis (FFEA): A continuum mechanics software tool for mesoscale simulation of biomolecules,” PLoS Comput. Biol., vol. 14, no. 3, Mar. 2018, Art. no. e1005897, doi: 10.1371/journal.pcbi.1005897.