Analysing historical newspapers and books using Apache Spark and Cray Urika-GX

In our October 2018 blog post on Analysing humanities data using Cray Urika-GX, we described how we had been collaborating with Melissa Terras of the College of Arts, Humanities and Social Sciences (CAHSS) at The University of Edinburgh to explore historical newspapers and books using the Alan Turing Institute's deployment of a Cray Urika-GX system ("Urika"). In this blog post we describe additional work we have done, to look at the origins of the term "stranger danger", find reports on the Krakatoa volcanic eruption of 1883, and explore the concept of "female emigration".

The historical books and newspapers analysed range from the 16th to the early 20th centuries. The books and newspapers had been scanned via optical character recognition (OCR) into XML documents. We looked at four datasets:

- British Library Books (BLB) from 1510-1899, a collection of 63700 ZIP files (~220GB), one per book. Each ZIP file contains one metadata document per book and one document per page.

- British Library Newspapers (BLN) from 1714-1950, a collection of 179699 XML documents (~424GB), one per issue.

- Times Digital Archive (TDA) newspapers from 1785-2009, a collection of 69699 XML documents (~362GB), one per issue.

- Papers Past New Zealand and Pacific newspapers (NZPP) from 1839-1863, a collection of 13411 XML documents (~4GB), each with up to 22 articles.

The copies of the datasets used are hosted at the University of Edinburgh within the University's DataStore provided by Information Services' Research Data Services. The authors discovered that some of the datasets were incomplete. Parts 4 to 6 of the BLN were missing and there were only TDA files spanning 1785-1848. Melissa arranged for access to the full datasets which were delivered via hard-drive, then copied, using rsync, into the DataStore. The DataStore directories then were mounted onto Urika using SSHFS and were then copied into a connected Lustre high-performance file system, again using rsync. This is necessary because Urika's compute nodes have no network access and so cannot access the DataStore via its network mount points. Equally importantly, data movement and network transfers need to be minimised to ensure efficient processing.

"defoe" for analysis of historical books and newspapers

In our original work, we extended two Python text analysis packages originally developed at University College London (UCL): i_newspaper_rods, for analysing newspapers, and cluster-code, for analysing books. These packages run queries across the datasets via Apache Spark. Each package provides an object model that represent newspapers in terms of issues, and articles and books in terms of ZIP file archives, books and pages, respectively. The object models reflect how the data is physically stored.

While these packages share common code and behaviour they are implemented and run in slightly different ways. They also include code to support the use of high-performance computing (HPC) environments specific to UCL. It was proving challenging for the authors to continue to develop both these codes, especially since there was no way for the authors to test the HPC-specific code. Consequently, i_newspaper_rods and cluster-code were merged and refactored into a single new code, defoe.

defoe allows BLB, BLN and NZPP datasets to be queried from a single command-line interface and in a consistent way. Improvements to usability were made and HPC environment-specific code was removed. The development of defoe was done by the authors as part of Living with Machines, a collaboration between the Alan Turing Institute, the British Library and a number of universities, and funded by the Arts and Humanities Research Council and UK Research and Innovation. Living with Machines seeks to understand the impact of technology across society during the Industrial Revolution by studying newspapers, journals, pamphlets, census data and other publications from that era.

As part of our work with Melissa, defoe was extended with a new object model to support the NZPP dataset. The object model represents NZPP XML documents in terms of collections of articles and single articles within these. Again, the object model reflects how the data is physically represented.

To complement defoe, a new repository of Jupyter notebooks called defoe_visualization was also developed. These notebooks allow researchers to explore the query results and also to post-process the results to extract information of use to them. The notebooks are complemented with data files with the query results produced by the authors.

Reporting the Krakatoa eruption of 1883

Krakatoa (Krakatau in Indonesian) erupted during 26th and 27th August, 1883, and was one of the most spectacular volcanic eruptions of contemporary times. Melissa was interested in references to the eruption in contemporary newspapers during the year 1883. A query was run to search for occurrences of any word in a list of keywords and get information on each matching article including title, matching keyword, article text and filename. Results were grouped by the publication dates of the newspapers. This query was run with the keywords "krakatoa" and "krakatau" across BLN and TDA. Within 1883 itself, 62 articles referencing "Krakatoa" or "Krakatau" were identified within BLN and 17 within TDA. This query motivated the development of an object model for NZPP. However, it was discovered during this work that this dataset predated 1883, spanning 1839-1863.

Origin of the term "stranger danger"

A social sciences colleague of Melissa's was interested in how the phrase "stranger danger" has been used over time and where and when it might have originated. A query was implemented which searches for two words (ie "stranger" and "danger") which are co-located and separated by a maximum number of intervening words (for example 12). For each match, information about the matching book/issue, including the book/article title, the matching words, the intervening words, and the book/newspaper file name were returned. These results were grouped by the publication dates of the books/newspapers. This query was run, with the words "stranger" and "danger" and a maximum number of intervening words of 12, across BLB.

Exploring female emigration

Melissa was also interested in exploring the concept of female emigration. For this, existing queries were modified and new queries developed and these queries were run over both BLN and TDA.

"keysentences by year" searches for key sentences (or phrases) and returns counts of the number of articles which include the sentence. The results are grouped by year. This query was used to search for references to female emigration societies (eg "The East End Emigration Fund" or "The South African Colonisation Society").

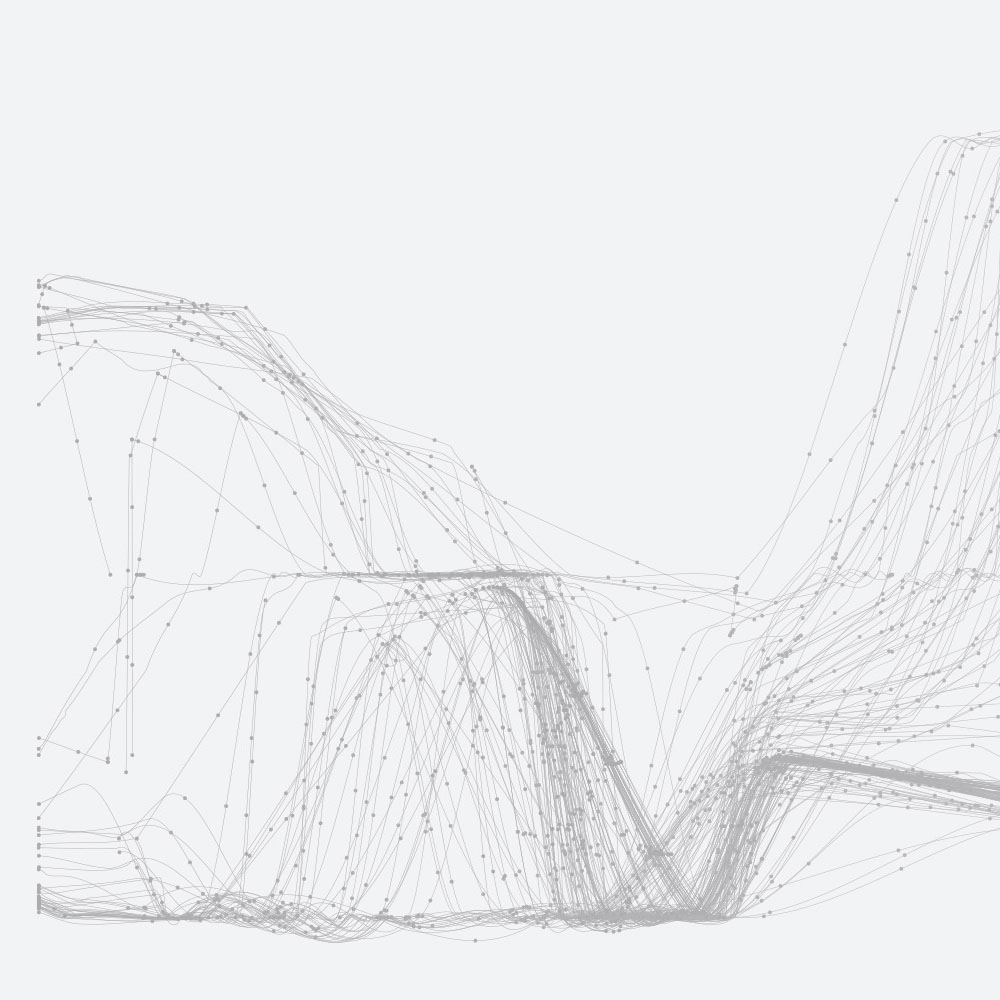

"target_and_keywords by_year" and "target_and_keywords_count_by_year" were used to search BLN and TDA for occurrences of a target word, "emigration", co-located with keywords from a taxonomy of terms relating to "female emigration" (eg "colony", "service", or "female", "governess"). These queries were run over both BLN and TDA, and results were analysed later in Jupyter Notebooks using n-grams to visualise the occurrences of the taxonomy terms (see Figure 1, below).

"target_concordance_collocation_by_date" searches for occurrences of a target word, "emigration", co-located with keywords from the taxonomy of terms relating to "female emigration". This query returns each keyword plus its concordance (the text surrounding the keyword, in this case the ten words preceding and following the keyword). The results are grouped by year. This query was also used to search for references to female emigration societies.

Each of the queries allow the user to specify the preprocessing that should be applied to words in documents and to words provided as part of the query (for example, the keywords). There are three types of preprocessing available: normalisation, stemming and lemmatisation. Normalisation removes all non-'a-z|A-Z' characters and makes the words lower case. All queries in defoe have been implemented to normalise words by default. Stemming reduces normalised words to their word stems (for example, "books" becomes "book" and "looked" becomes "look"). Lemmatisation reduces inflectional forms of normalized words to a common base form. As opposed to stemming, lemmatisation does not simply chop off inflections. Instead, lexical knowledge bases are used to get the correct base forms of words. The Python Natural Language Toolkit (NLTK) implementations of stemming and lemmatisation are used. When running the queries, normalisation and lemmatisation was applied.

Figure 1: N-gram for the normalised frequencies of the words "daughter", "engagement", and "empire" across the TDA corpus.

Future work

Not all researchers have access to Urika. It is therefore useful to enable researchers to run defoe in other HPC environments upon which, unlike Urika, Spark has not been pre-installed and configured. As part of the BioExcel project, Rosa developed scripts to deploy and configure a Spark cluster on demand as part of a traditional batch job submission (described in Rosa's blog post on Spark-based genome analysis on Cray-Urika and Cirrus clusters). These were tested on the Cirrus HPC service. As part of an internal project at the University of Edinbugh, Rosa worked with Melissa and David Fergusson (Research Data Services, Information Services, University of Edinburgh) to customise her scripts to run on the University's Linux computing cluster (EDDIE).

Owing to its origins as two separate codes, defoe now has a number of object models: one for BLN and TDA which represents newspapers as issues and articles; one for BLB which represents books as ZIP archives, books and pages; and one for NZPP which represents collections of articles and single articles. In each case, the object mode reflects how the data is physically represented.

There are two problems with this design. The object models and the associated query code are based upon the physical representation of the data, not its conceptual representation. For example BLN, TDA and NZPP are all newspaper data but two different object models are used. In contrast, a newspaper dataset used within Living with Machines stores newspapers as metadata documents and page documents (like BLB) and can be easily queried but using the BLB object model. In addition, the Python code implementing queries are also object model-specific. So, for example there are three queries to count the number of words, one for each object model. It would be very useful to introduce conceptual object models (eg books/pages, issues/articles) and to introduce a layer to parse the physical representations of the datasets into these conceptual object models. For example, newspapers from BLN, NZPP and the newspapers dataset used within Living with Machines would all be mapped to a conceptual model of issues/articles. This would allow the query code to be specific to conceptual models (eg "count number of articles"). A base model could represent information common to all documents (eg books, newspapers etc) with a complementary set of basic queries (eg "count number of words"). Development of these conceptual object models will be done as part of Living with Machines.

A detailed report on our work, also titled "Analysing Historical Newspapers and Books Using Apache Spark and Cray Urika-GX", is available for download (PDF) from the Alan Turing Institute Research Computing Service website. A research paper, called "defoe: A Spark-based Toolbox for Analysing Digital Historical Textual Data" has been recently accepted for publication at the IEEE eScience Conference 2019 in San Diego, USA, in September.

Acknowledgements

This work, which ran from March 2018 to March 2019, was supported by funding from Scottish Enterprise to the University of Edinburgh as part of the Alan Turing Institute-Scottish Enterprise Data Engineering Programme.