Concluding ASiMoV at EPCC

28 November 2024

The EPSRC Strategic Prosperity Partnership for Advanced Simulation and Modelling of Virtual Systems (ASiMoV) concluded with a final project meeting hosted by Rolls-Royce in Derby.

Figure 1 The Rolls-Royce Trent 1000 exhibit at the Derby Museum of Making. Paul Bartholomew, Creative Commons Attribution (CC BY) https://creativecommons.org/licenses/by/4.0/.

The ASiMoV project was a large 6-year industrial-academic collaboration led by Prof Leigh Lapworth (Industrial PI, Rolls-Royce) and Prof Mark Parsons (Academic PI, EPCC). Its overarching goal was to develop the computational tools that will enable the simulation of aeroengines with sufficient accuracy, fidelity and reliability of prediction that they can be virtually certified. This capability would represent a major benefit for UK industry, leading to significant savings in the development of new products and enabling the exploration of revolutionary designs.

To achieve this objective, advances across a range of topics in large-scale scientific computing are required. Each branch of research was led by one of the project partners:

- Rolls-Royce led the industrial integration and trusted computing components

- The University of Cambridge led solver development

- EPCC led research into extreme-scale computing and application performance

- The University of Oxford investigated robustness of physics

- The University of Warwick led research into lean computing

- The University of Bristol led the future computing platforms topic.

Looking at the Trent 1000 engine shown in Figure 1 it is easy to see the complexity of the myriad interacting components that must all cooperate as the engine runs. In a similar manner, close collaboration was vital to the success of the ASiMoV project, with Rolls-Royce’s industrial integration serving as the common point to bring the work packages together.

The EPCC team

The ASiMoV project involved many EPCC staff over the years including Paul Bartholomew, Evgenij Belikov, Nico Bombace, Alexei Borissov, Erich Essmann, David Henty, Adrian Jackson, Sébastien Lemaire, Laura Moran, Rupert Nash, Justs Zarins, Michèle Weiland (Co-I and EPCC lead) and Mark Parsons (PI). In addition two PhD students, Shrey Bhardwaj and Chris Stylianou, conducted their studies at EPCC as part of ASiMoV, focusing on I/O performance and sparse linear algebra optimisation respectively.

Our work included analysing the performance of the simulation codes used by the project at scale on both ARCHER and ARCHER2, and working with the other groups to turn these analyses into optimisations. We have also worked on multi-physics coupling of those codes at scale – coupling is imperative to simulate the interactions between different jet engine components. For the latter part of the project, much of the work at EPCC has revolved around the development of ASiMoV-CCS.

ASiMoV-CCS

ASiMoV-CCS is a general-purpose Computational Fluid Dynamics (CFD) code being developed at EPCC in collaboration with Rolls-Royce to simulate the combustion chamber of a jet engine.

A novelty of the design is using the concept of “submodules” in modern Fortran (F2008 standard) to create a software architecture that facilitates a highly modular design where one implementation of a feature can be easily swapped for another at link time. This approach brings two major benefits to the project:

• We avoid lock-in effects from either compilers or libraries

• We can maintain a hard separation between the open-source code and Rolls-Royce’s proprietary functionality.

The first feature ensures portability and enables experimentation, because different libraries may be explored without affecting the rest of the code as we depend on our internal (and not the libraries’) interfaces. The latter aspect of this design is key to industrial-academic partnerships. By maintaining a clear separation, we can work freely with the core code, including as part of student projects, without any risk of exposing Rolls-Royce’s proprietary code, which is part of their competitive advantage. \

The ASiMoV-CCS code is under heavy development and is available on GitHub https://github.com/asimovpp/asimov-ccs under the Apache 2.0 licence.

OverFlow Tool

As part of our research into extreme scale computing at EPCC, we sought to address the issue of code bugs which only reveal themselves at scale. These so-called “scale-dependent” bugs occur when the inevitable dependence of the code on the problem size or degree of parallelism leads to unintended behaviour. An example of this might be when mesh indices are encoded as 32-bit integers and, at very large problem sizes, the number of mesh points exceeds the 32-bit integer limit.

Clearly, finding these bugs through normal testing procedures is prohibitively expensive and difficult. To aid development of codes suitable for extreme scale computing we developed the OverFlow Tool (OFT). OFT works by analysing the source to find variables that depend on the scale of the input, such as mesh size or number of processors. Building on LLVM infrastructure, OFT instruments the application binary to record the values of these variables at runtime, and then runs a regression model to extrapolate these from small to large scales where problems may occur. The OFT was successfully demonstrated, finding scaling bugs on several codes, and presented at the ISC’22 conference [1].

Scientific communication

Our work on extreme scale computing also encompassed the visualisation of large-scale simulation data. One of the challenges faced by science is how to communicate the results. Presenting results clearly and intelligibly is crucial to the success of research, whether in an academic setting to communicate with peers, at a large company such as Rolls-Royce where people with a range of specialisations must work towards a common goal, or the general public, who are entitled to the outputs of work funded by government research councils.

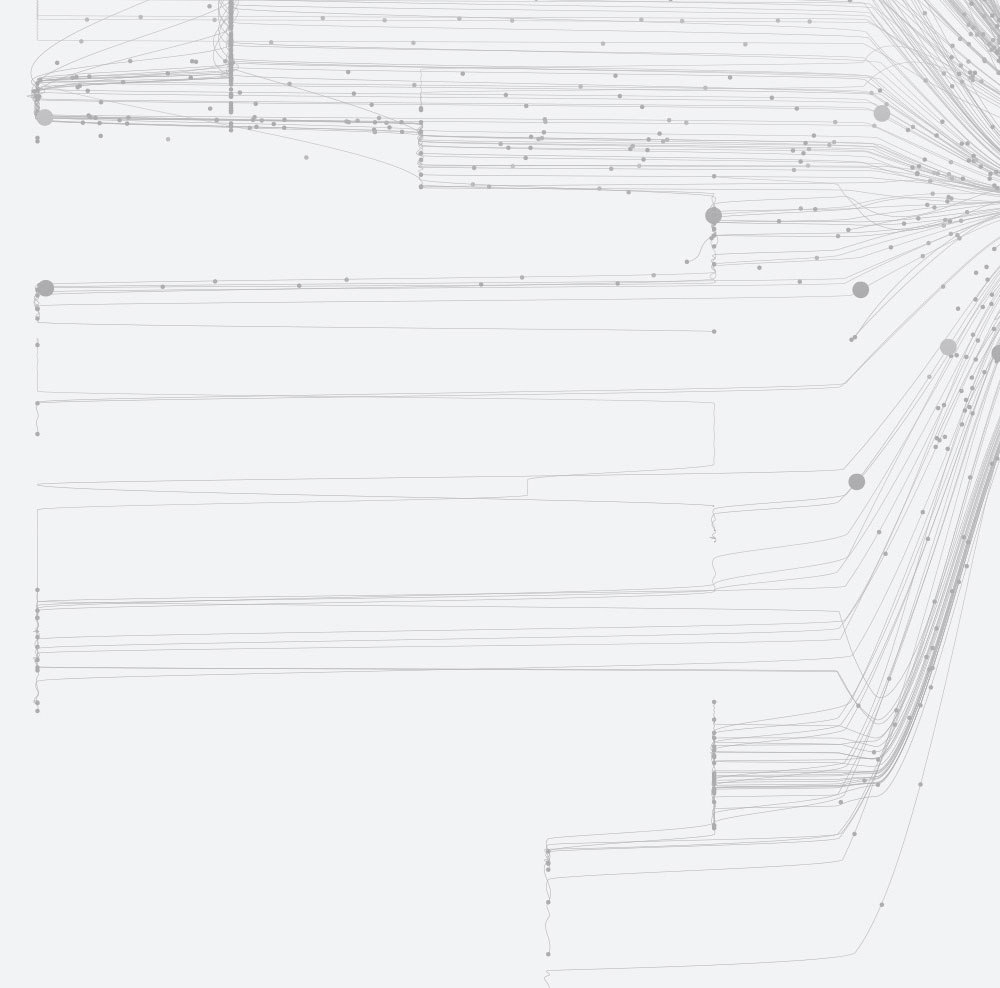

In projects like ASiMoV, which target very large-scale simulations, processing and rendering the vast volumes of simulation data becomes an additional challenge. EPSRC provided additional funding to support the generation of high-fidelity visualisations from the ASiMoV simulations for scientific communication and outreach. As part of this, EPCC purchased “Scylla”, shown in Figure 2, a powerful graphics workstation powered by 6xNVIDIA RTX 4090 GPUs.

Figure 2 The Scylla graphics workstation at EPCC. Sébastien Lemaire, Creative Commons Attribution (CC BY) https://creativecommons.org/licenses/by/4.0/.

The graphics power of Scylla enables the processing of large volumes of simulation data to produce high resolution visualisations and videos for wider dissemination.

In addition to supporting the procurement of the hardware, the EPSRC grant funded the development of a pipeline to take the simulation data through to a final product, such as the rendering shown in Figure 3. Further information about this project can be found in this article: How to make an impactful scientific visualisation.

Figure 3: still image from the wind turbine rendering on Scylla, based on simulation data from Xcompact3D. Sébastien Lemaire, Andrew Mole, Michèle Weiland and Sylvain Laizet. This work won the video category of the ARCHER2 Image and Video Competition (2024).(CC BY) https://creativecommons.org/licenses/by/4.0/.

What next?

At EPCC we are continuing the development of ASiMoV-CCS through an ARCHER2 eCSE project, continuing our collaboration with Rolls-Royce. This allows us to focus more effort on the code optimisation, building on the functionality implemented as part of the ASiMoV project. In addition to this work, we plan to port ASiMoV-CCS to run on GPUs as the likely hardware architecture of future supercomputers. Here our novel design based on submodules will again be of benefit by enabling us to develop a portable code base with different implementations of modules targeting CPU and GPU hardware.

Beyond the ASiMoV project, Exascale capabilities will enable ASiMoV-CCS to be used in the design of the next generation of propulsion technologies running on new fuels such as Sustainable Aviation Fuel (SAF) or hydrogen. Such radical changes are required to meet the future challenges of low-carbon and Net Zero "green" aviation.

References

[1] J. Zarins, M. Weiland, P. Bartholomew, L. Lapworth, M. Parsons; “Detecting scale-induced overflow bugs in production HPC codes”; In International Conference on High Performance Computing (2022)

ASiMoV grant number: EP/S005072/1.