ePython: can we have our cake and eat it?

17 August 2020

Maurice Jamieson is a third-year PhD student at the EPCC working on the programmability of micro-core architectures, both in terms of design and implementation, through the development of the ePython programming language.

We recently published a paper at the Scientific Computing with Python (SciPy) 2020 conference that outlined the latest developments to ePython, a version of Python specifically written to leverage micro-core architectures. The paper provides a detailed discussion of the design and implementation of ePython, touching on the latest updates to manage arbitrary large data sets and native code generation. This post will summarise key elements of the paper.

Background

ePython was designed to target micro-core architectures with the aim of supporting a novice in going from zero to hero in less than a minute. In other words, ePython strives to provide a simple, yet powerful, parallel Python programming environment that allows programmers, with no prior experience of writing parallel code, to get kernels executing in less than a minute on micro-core architectures. The previous ePython blog post describes the language design drivers to leverage the power / performance balance of micro-core architectures and address their limitations, namely the extremely limited on-chip memory (32KB in the case of the Adapteva Epiphany III) and the impact of accessing the significantly slower off-chip shared memory. Here we highlight the work to manage arbitrary large data sets and the approach to significantly reduce the performance gap between kernels running on the ePython interpreter and those hand-coded in C. The challenge was to see if we could have our cake (produce code small enough to run on micro-cores) and eat it (approach the performance level of C)?

The initial purpose of ePython was educational and as a research vehicle for understanding how best to program micro-core architectures and prototyping applications upon them. It supports a rich set of message-passing primitives, such as point-to-point messages, reductions and broadcasts between the cores. ePython also supports virtual cores(implemented as threads) on the host to enable the simulation of devices with a higher core count than the physical device attached to the host.

The following simple code example is executed directly on the micro-cores. Here, each micro-core will generate a random integer between 0 and 100 and then perform a collective message passing reduction to determine the maximum number which is then displayed by each core:

from parallel import reduce

from random import randint

a = reduce(randint(0,100), "max")

print "The highest random number is " + str(a)

This initial approach was developed with the objective of quickly and easily running simple examples on the micro-cores directly and exposing programmers to the fundamental concepts of parallelism in a convenient programming language. However, we realised that it was possible for ePython to support real-world applications running on micro-cores and a more powerful approach to programming and integration with standard Python was required.

Offloading

As not all parts of an application are suited for offloading to the micro-cores e.g. file I/O and many applications use Python modules such as NumPy, an approach to enable specific functions to be selected for offload was developed. ePython was extended to integrate it with existing Python codes running in any Python interpreter on the host. This is implemented by a Python module that programmers import into their application code that provides abstractions and the underlying support for the offloading of Python functions as kernels to the micro-cores. Similar to the approach taken by Numba, the programmer annotates the kernel functions using a specific decorator, @offload. In the following example, the mykernel() function is marked for offloading as a kernel and is executed on all the micro-cores. The example also highlights the simplicity with which data can be passed to and from the micro-cores as function parameters and return values. You might have guessed that as there is a kernel return value for each core, they are returned to the calling Python code as a list.

from epython import offload

@offload

def mykernel(a):

print "Hello with " + str(a)

return 10

print mykernel(22)

Although not shown above, The @offload decorator accepts a number of arguments that control how the kernels are executed e.g. the targetarguments takes a single ID or a list of core IDs that the kernel will be executed on. The async argument controls whether the kernel executes in a blocking or non-blocking manner where the function call will return a handler immediately that can be used to test kernel completion, waiting for all kernels to complete etc. The auto argument allows the programmer to allocate the number of cores the kernel is executed on, when they are available, without specifying which ones explicitly. Finally, the deviceargument specifies the type of micro-core in heterogeneous micro-core systems.

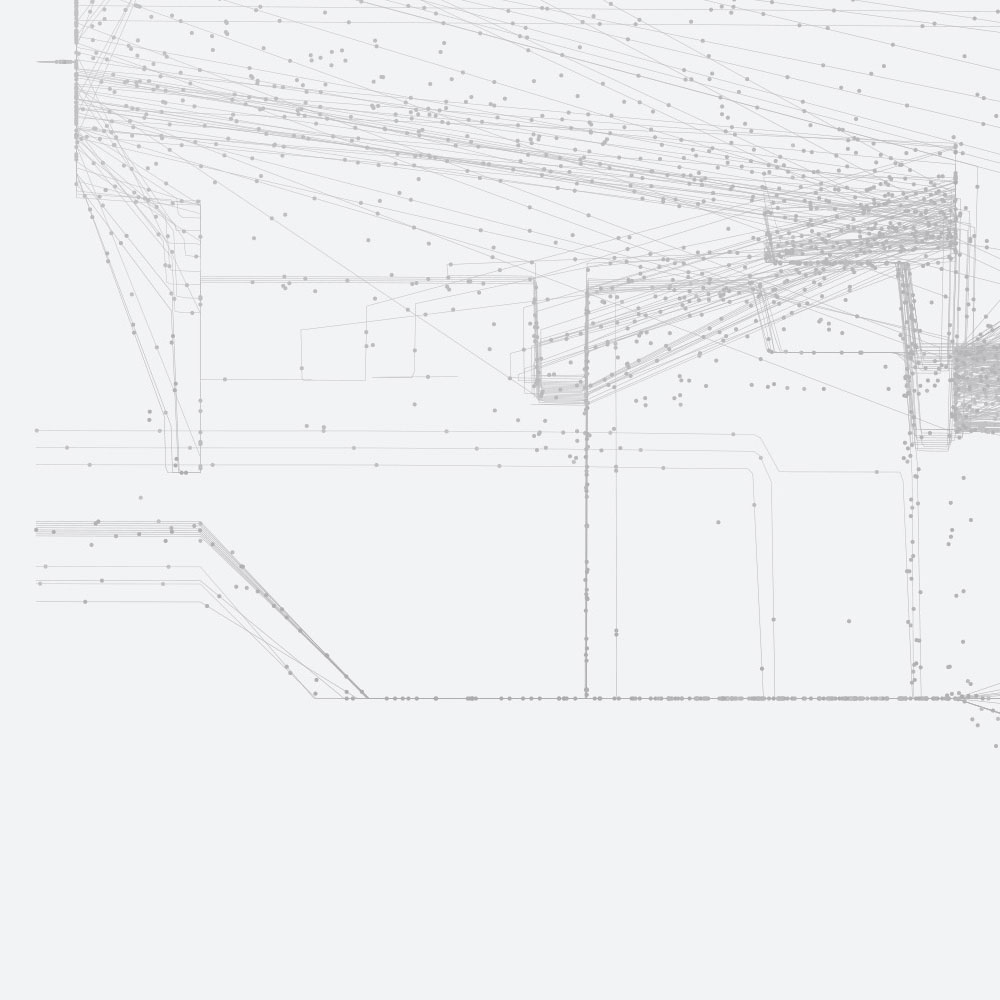

Arbitrary large data sets

I think you will note that the usefulness of the above approach is limited by the amount of data that can be manipulated on the micro-cores and that a mechanism for managing the movement of data from the much larger, but much slower, shared DRAM to the fast on-chip SRAM is required to enable the deployment of useful applications to the micro-cores. As you can see from the diagram above, even this core-addressable shared DRAM is limited in size (32MB for the Adapteva Parallella). Perhaps what is less clear from the offload example above, is that the kernel argument semantics is pass by reference, following a similar approach to CUDA’s Unified Virtual Addressing (UVA). However, in ePython, this is achieved at the software rather than hardware level and the runtime performs the data transfer. Whilst this appears expensive, there are options to prefetch the data and the Epiphany provides two DMA channels that can perform data transfers independently of the processor cores.

To enable greater flexibility, we provide a mechanism to allow the programmer to specify the location of variables, over and above the implicit placing determined the scope level i.e. local kernel variables are on-chip and the global Python script variables are on-host. This is implemented using the ePython module memkind class, which provides multiple locations in the memory hierarchy for allocating variables, allowing kernels to access memory not addressable by the micro-cores.

Native code generation

As shown in the above diagram, the updated ePython architecture retains the heavy lifting code compilation tasks (lexing, parsing, optimisation and generation of byte code or native binaries) on the ARM host. Furthermore, the integration with standard Python environments and the marshalling of data and control also take place on the host. Interestingly, on the micro-cores, the architecture-specific runtime library and communications protocol / code are used by both the interpreter and the directly executing native code, ensuring that natively compiled code kernels can leverage all of the existing ePython runtime support. Unsurprisingly, the original byte code interpreter and runtime were designed to be as small as possible (c. 24KB) to fit in the 32KB on-chip memory limit of the Adapteva Epiphany III, with the necessary trade-off between minimum size and maximum performance. It is important to remember that even the interpreter for the embedded version of Python, MicroPython, is too large for micro-cores at 256KB for code and 16KB for runtime data. However, even with the size / performance trade-off, ePython delivers better performance on micro-cores than Python running on ARM and Intel Broadwell processors for machine learning benchmarks.

A number of different approaches were considered for the ePython native code generation, including just-in-time (JIT) compilation and ahead-of-time (AOT) compilation by generating micro-core assembly language. Unsurprisingly, the extremely limited on-chip micro-core memory makes a JIT approach infeasible and the generation of assembly language would inhibit the ability to compare performance across different micro-core architectures as different assembly language source would need to be generated for each specific instruction set architecture (ISA). Allied to this, previous experience developing native code generation for other high-level programming languages highlighted that generating optimised assembly language is difficult (register colouring / allocation, dead store elimination etc.) and significantly better results can be achieved by generating high-level C source code and leveraging the extensive code optimisation techniques found in modern C compilers such as GCC and Clang / LLVM. Another benefit for ePython, is that generating C source code required relatively few changes to the existing architecture, with the key changes being modifications to the lexer and parser to generate an abstract syntax tree (AST), a new C code generation module and a new micro-core dynamic loader / runtime library to support the new native code kernels.

The initial performance results for a Jacobi benchmark on a range of processor architectures, including the Adapteva Epiphany, Xilinx MicroBlaze, PicoRV32 RISC-V, MIPS32, SPARC v9, ARM Cortex-A9 and x86-64, show only around a 10% performance overhead for the ePython native code generation version over an optimised (-O3) hand-coded C version, resulting in the ePython native code version providing between 250 and 500 times speedup over the version running on the interpreter on the Epiphany. We find these results very encouraging as the C code model is also able to support high-level language features, such as objects and lambdas / closures, as well as enabling dynamic code loading. The latter feature allows kernels to dynamically load natively compiled functions as required, and for them to be garbage collected when complete, effectively supporting kernels of arbitrary size. Also, as the current bootloader is 1.5KB, ePython is now able to target micro-core architectures with significantly lower RAM than even the Epiphany, such as the 2GRV Phalanx.

Summary

We think that our new models for arbitrary sized data and dynamic code loading are really exciting for ePython as they now allow real-world applications to be run on micro-cores. As we have also significantly narrowed the performance gap between ePython and hand-coded C on the micro-cores, I think you will agree that we can now have our cake and eat it.