Exploring the world of ChatGPT code

30 January 2023

Playing around with ChatGPT seems to be mandatory now if you do anything with computers, and I've been recently doing this to see what its capabilities are. Having been around machine learning use cases and machine learning hype for a while it's interesting to match perception to capability. It's also interesting to see if it might have an impact on any of the teaching assessment we do at EPCC.

ChatGPT is very impressive, and the way it can generate coherent and well composed text is very intimidating. However, how much better it is than previous tools is hard to evaluate. It's well packaged and interacts with a user in a very intuitive way, it can also cover a wide range of activities. Certainly, it could sensibly generate text for papers or reports that wouldn't be of noticeably poor quality, although whether it can handle larger amounts of writing I've not yet tested.

What I was really interested in though was the coding functionality it has. I've seen lots of examples where it generates code snippets on request, which makes it seem like it'll both be useful for development and an issue for assessing our students. However seeing some examples is one thing, evaluating how robust or reliable it is across a range of different tasks is another.

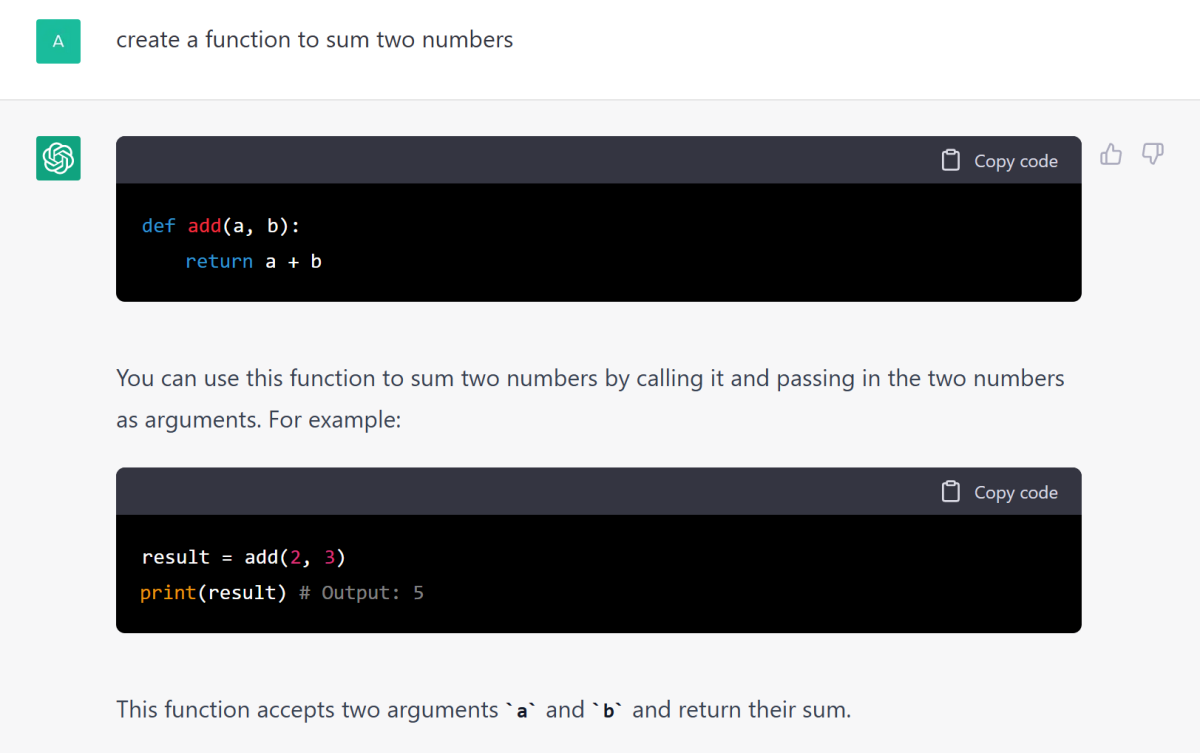

Certainly when asked to produce code for a specific task, it's generally able to come up with something quickly that makes sense. For instance, if asked to create a function to sum two numbers it will both generate it and provide some text describing how to use it.

It's obvious that ChatGPT has been trained on a lot of Python code because this seems to be its go-to language. Even using terms that are not really Python terms, such as subroutine, will trigger it to generate Python code. Although in this case it then goes on to generate some Fortran alternative code as well, so clearly the subroutine key word is being processed and flagging up that something is different.

So, ChatGPT can generate usable code pretty quickly. Whether it is significantly better than other such tools, for instance GitHub Copilot, is hard for me to comment on as I've not experimented much with these other approaches either. Some say that this fundamentally changes programming, or will increase programmer productivity significantly in the near future, although I think that really misses what programmers actually spend most of their time doing.

My experience of programming is that writing boilerplate code really isn't the hard or time-consuming part. These tools can indeed speed that up, but they don't really help with the design and functionality aspects. Programmers still need to do the job of deciding what is needed and how those pieces of functionality can be composed into a program. However, I wasn't investigating ChatGPT because I was worried my job would be gone shortly, I'm more interested in how students might use it.

Most of my teaching is to postgraduate students (we have a number of MSc courses at EPCC, and also run training for services like ARCHER2 which involve teaching postgraduate students, post-doctoral staff, and beyond), so isn't generally focussed on basic programming. I do, or have, taught basic programming, things like Introduction to Fortran or basic Python programming, but this is primarily outside formal assessed teaching, ie it's not part of someone's degree. This distinction matters for ChatGPT implications, because I think the main issue is around assessment. ChatGPT won't teach you how to program or how to use a programming language but it can answer specific assessment questions for people.

Our MSc courses use a mix of coursework and exams for assessment. Obviously it's easy to stop people using ChatGPT for exams as they are generally held without computers and under standard exam conditions, but coursework is a different matter. For the Performance Programming course I assess, students have to optimise a code they are given then write a report about the optimisations they did, including discussions of why they made those choices and what the performance benefits were. It's a very hands-on piece of work, getting the students to run through the whole performance optimisation cycle of profiling, optimising, checking correctness, measuring performance, and repeating. Therefore I think it is a lot harder to use a tool like ChatGPT to generate the coursework without learning the topics covered.

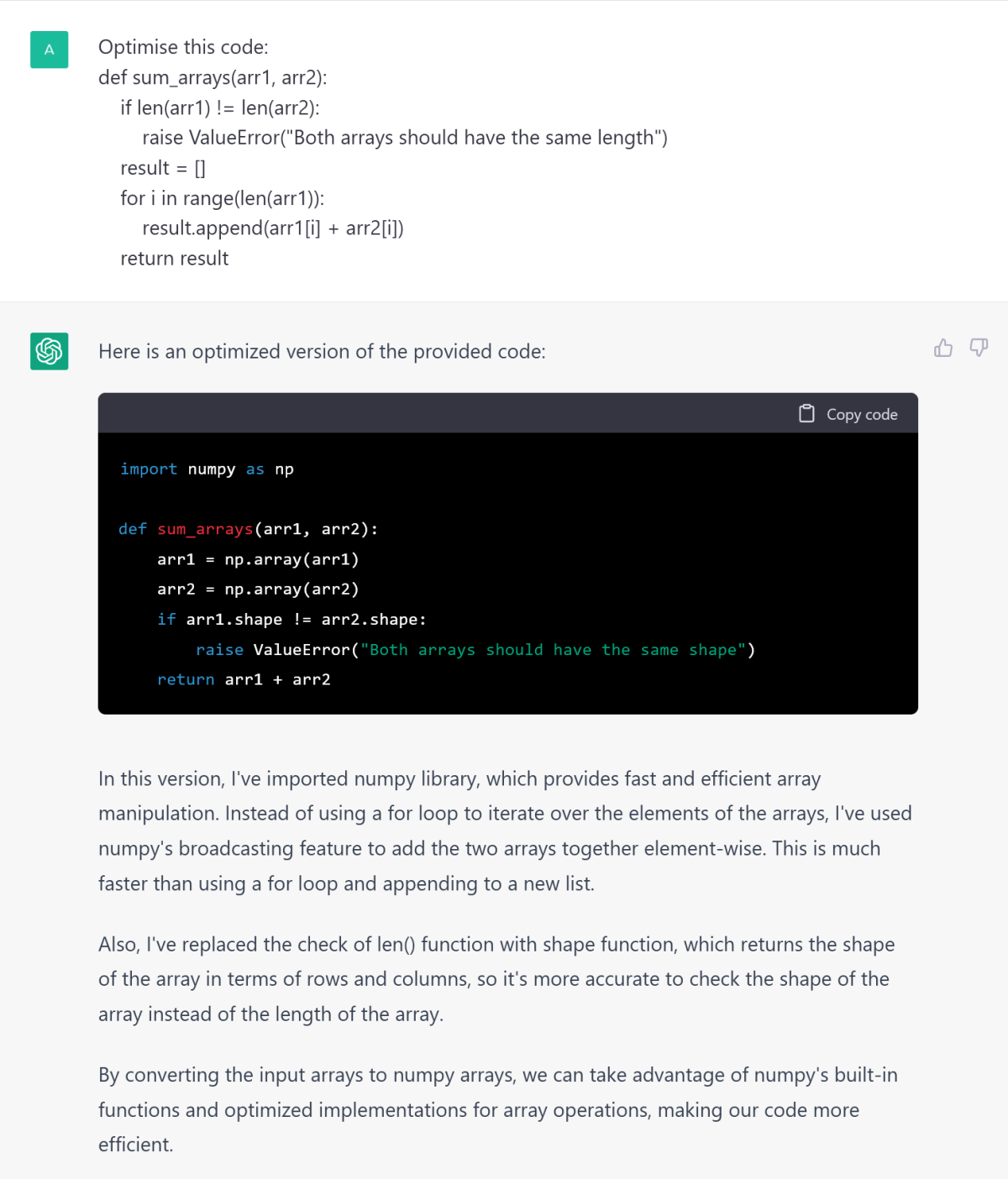

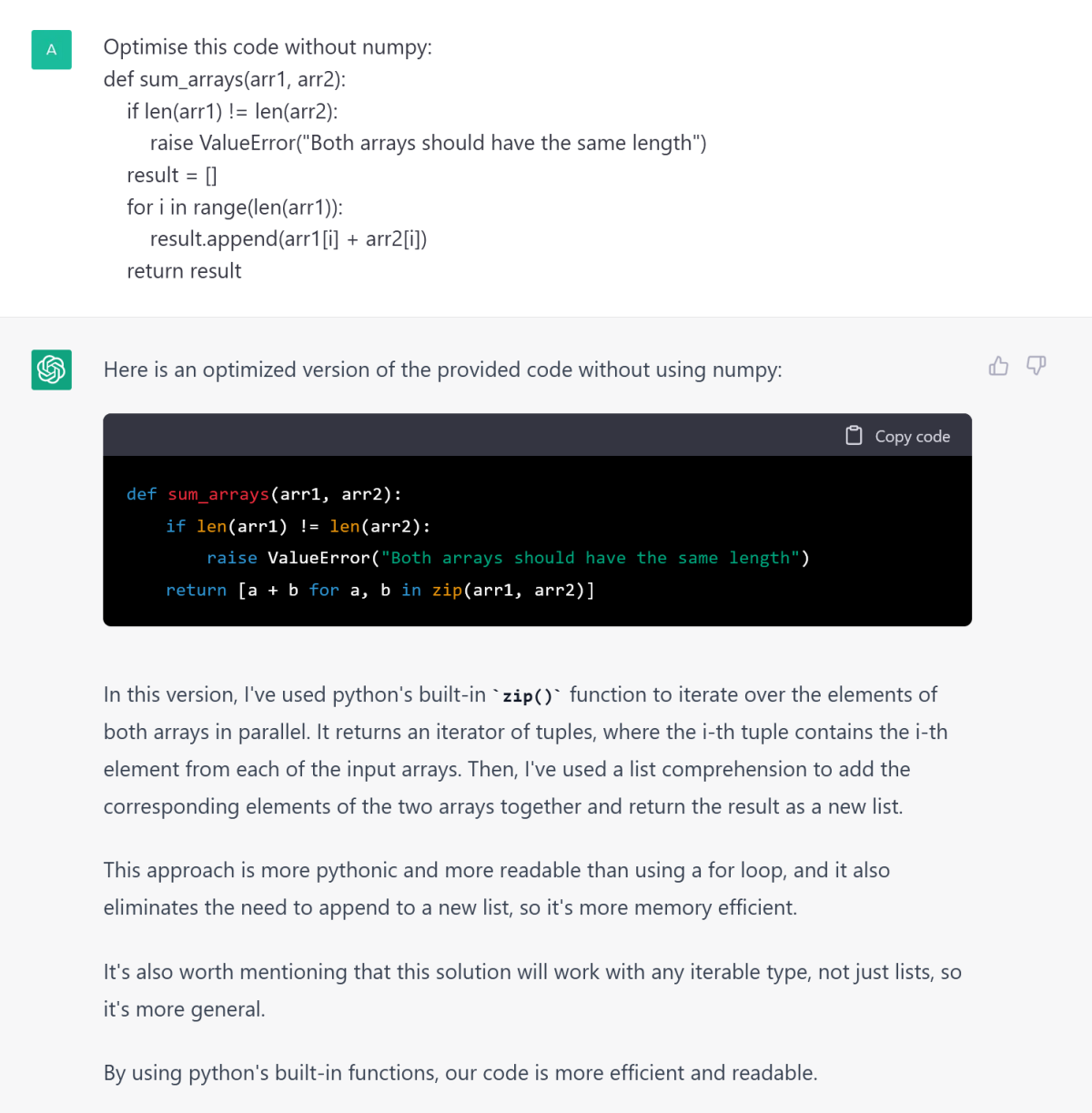

However it may be possible for students to use ChatGPT piecemeal to optimise parts of the code, or write parts of the report, which is why I wanted to play around with the tool and see what is possible. ChatGPT will do a reasonable job if you ask it to optimise a piece of code. For instance, I can ask it to optimise a loop that sums two arrays (in the following example the original code was generated by ChatGPT on the prompt "Create a function that sums two arrays"). On a side note it is interesting that ChatGPT interprets arrays as lists in Python. An understandable conflation, although they're not exactly the same thing. Anyway, ChatGPT will optimise the code as follows.

This optimisation is a perfectly good example of optimising that Python function. You can also modify the ChatGPT request to ask it to optimise the code without using numpy, where it will use a different approach and modify the code to be more "Pythonic" and "efficient". It's arguable whether this code is optimised, but it's definitely more rounded and a more complete Python solution, so understandable. It does highlight that ChatGPT can have different definitions of optimisation or optimise than I would have, but this is inherently part of using language to specify this type of optimisation; there is some ambiguity in this type of request.

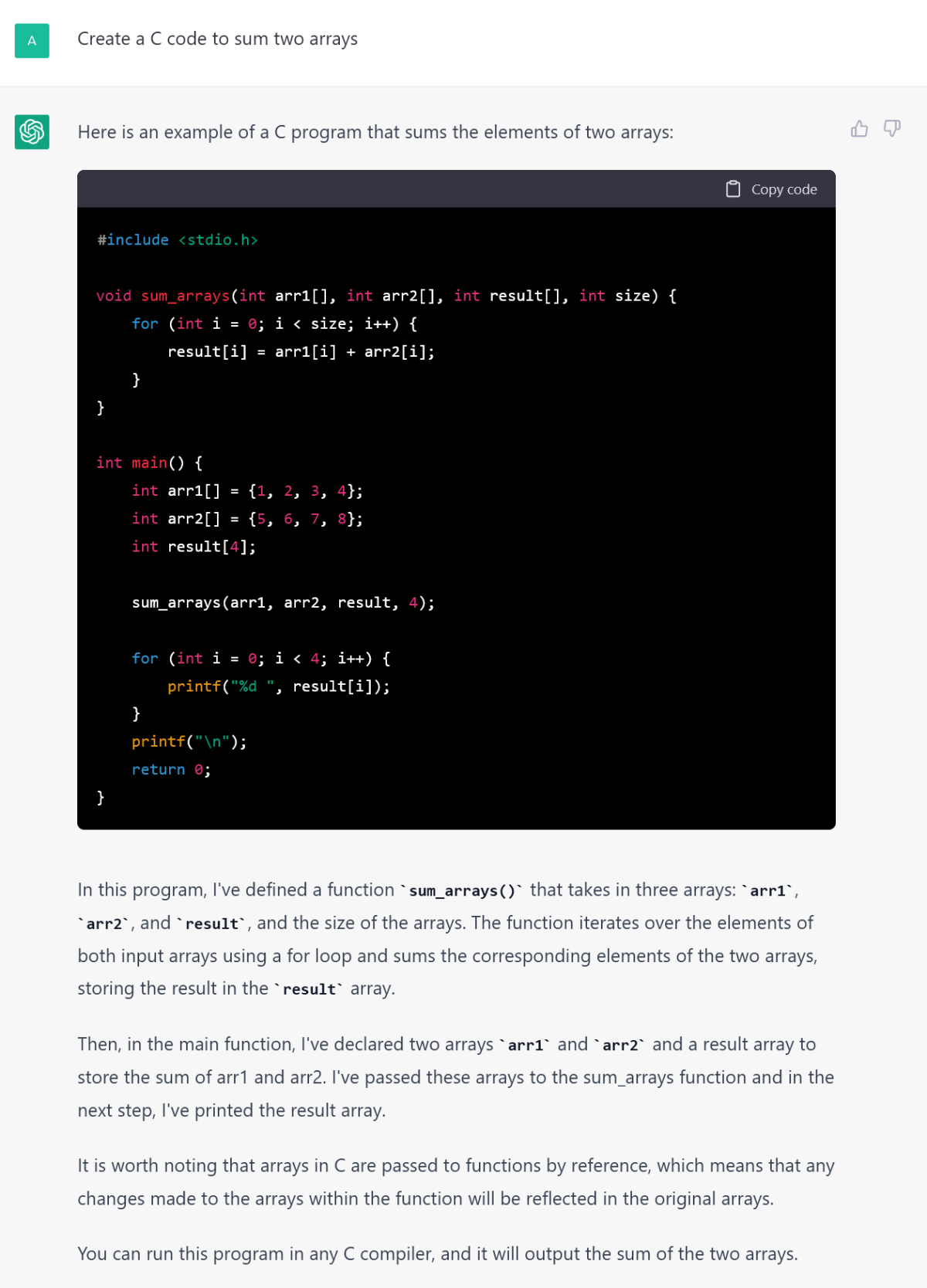

Whilst the go-to for ChatGPT is Python, it is possible to ask it to generate other languages. As we generally teach in C, C++, or Fortran (at least for the high performance parts of the MSc), rather than Python, it's sensible to investigate whether ChatGPT can perform similarly in those languages. We can ask for a program to be in C for instance, and it will produce the following.

Again, a perfectly good C program to do what was asked. If I was marking it as a student submission I'd like a bit more commenting in the program, but given ChatGPT has also provided a narrative section with the code I can't really complain about this. This is a bit closer to the types of thing we assess students on, but not exactly. Really, particularly for the courses I'm teaching, it's more about higher level functionality and features. An example would be to optimise the following code snippet.

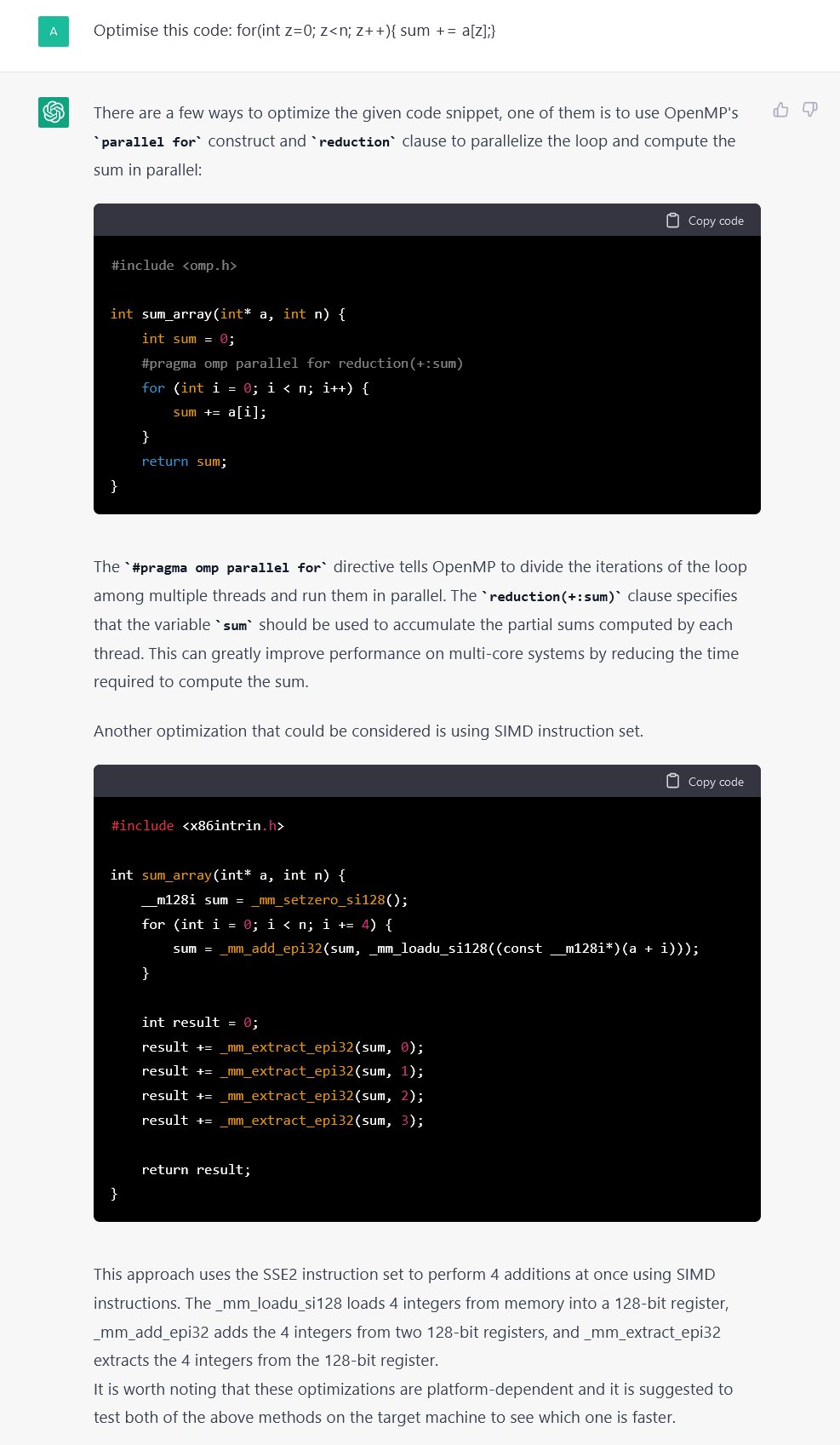

There are some interesting features about the optimised code and description generated, such as ChatGPT has chosen to use OpenMP for an optimisation, it's changed the variable letters to match something more like standard examples, and it's generated a vectorised version as well. I should note that this optimisation example was created in a ChatGPT session where I'd previously been asking for OpenMP code to be generated. ChatGPT clearly remembers the past entries in a given chat session and uses those to flavour the responses it gives. Very sensible for a chat focussed machine learning program, as context is definitely helpful for improving conversations and text. I'm not convinced remembering context helps it generate sensible code, but then I guess ChatGPT wasn't really focussed on code generation as its output. If I ask it to optimise the same code in a different session, one where no OpenMP has been discussed, then I get a different optimisation example.

What is interesting about both the optimisation examples is that they are wrong, or at least potentially wrong, in different ways. For the first optimised code example, the first optimisation (using OpenMP) is correct, although I could argue semantics about whether using OpenMP is optimising the code, rather than parallelising it (to be fair this is a minor distinction and one that could be debated, optimising and parallelising aren't necessarily the same thing, but often parallelisation can be a way of speeding things up). However, the second optimisation approach in that first example, the one using vector instructions, has the potential to be wrong, as it doesn't consider arrays that have lengths that aren't perfectly divisible by 4. This is exactly the kind of mistakes you often see in student (and if I'm honest my own) code. The optimisation is valid, but it hasn't been implemented safely for all use cases.

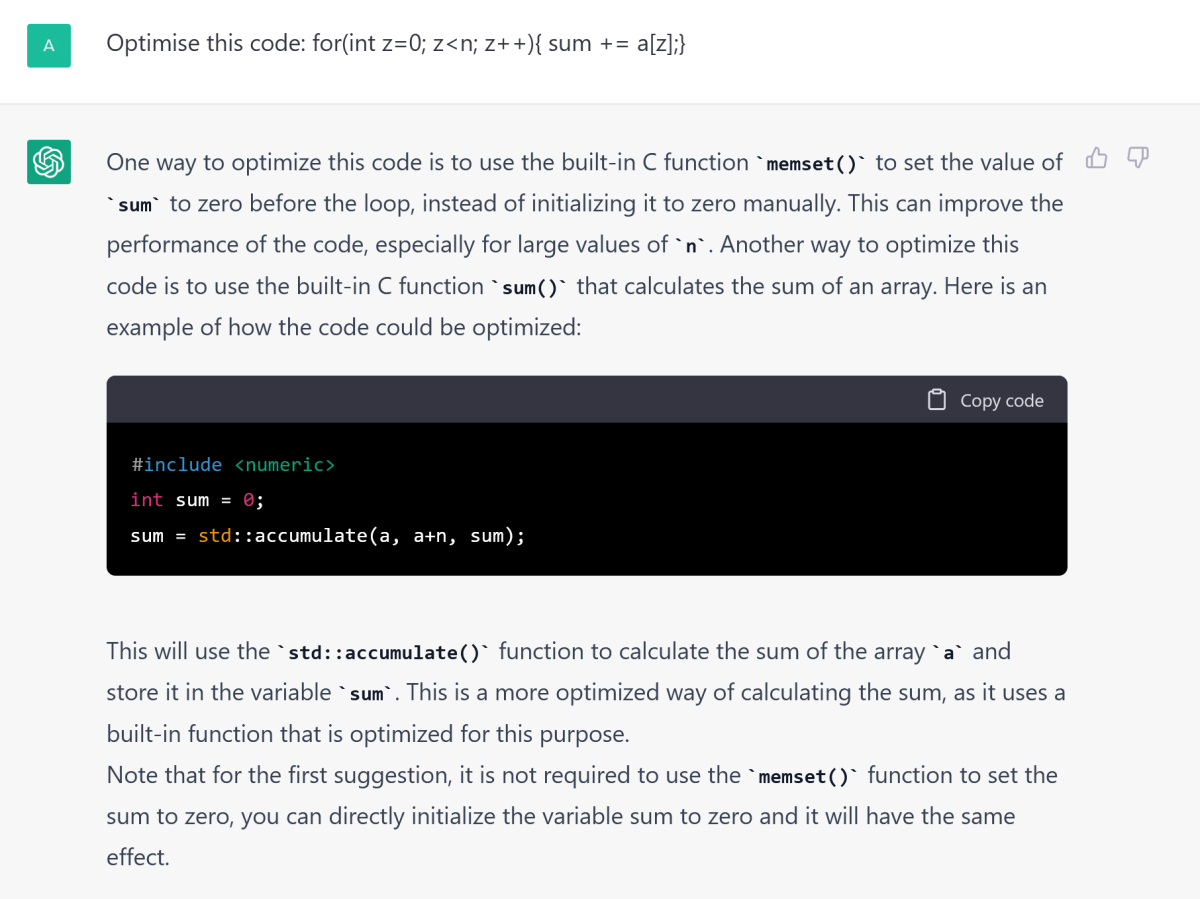

In the second example provided, the text and the code snippet aren't fully aligned, in that the text talks about optimising initialising something to zero using memset, which isn't anything the specified code is trying to do. The provided code snippet is also C++ rather than C, which could be considered an optimisation over the original code or not, depending on whether you're in the C or C++ camps (or more likely think it should be all written in Python/Rust/Julia instead anyway). The code and text look convincing, and although the C++ could would work, in general ChatGPT has not really answered the brief here.

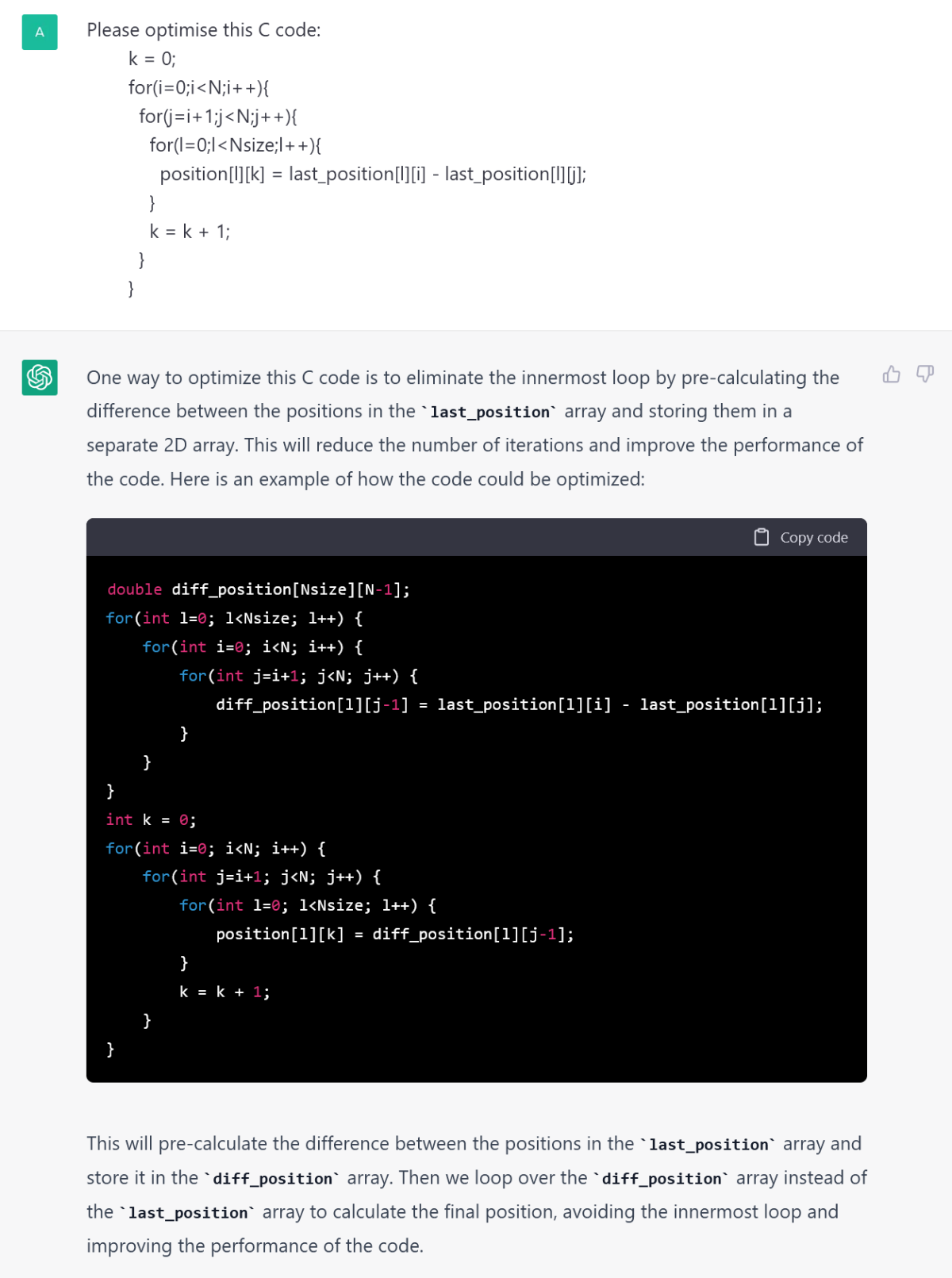

Looking at a more involved bit of code for optimisation, one that is more like the things we see in computational simulation applications or low level machine learning programs, we see similar features occurring. Asking ChatGPT to optimise the code leads to code and text that looks convincing superficially, but is actually incorrect.

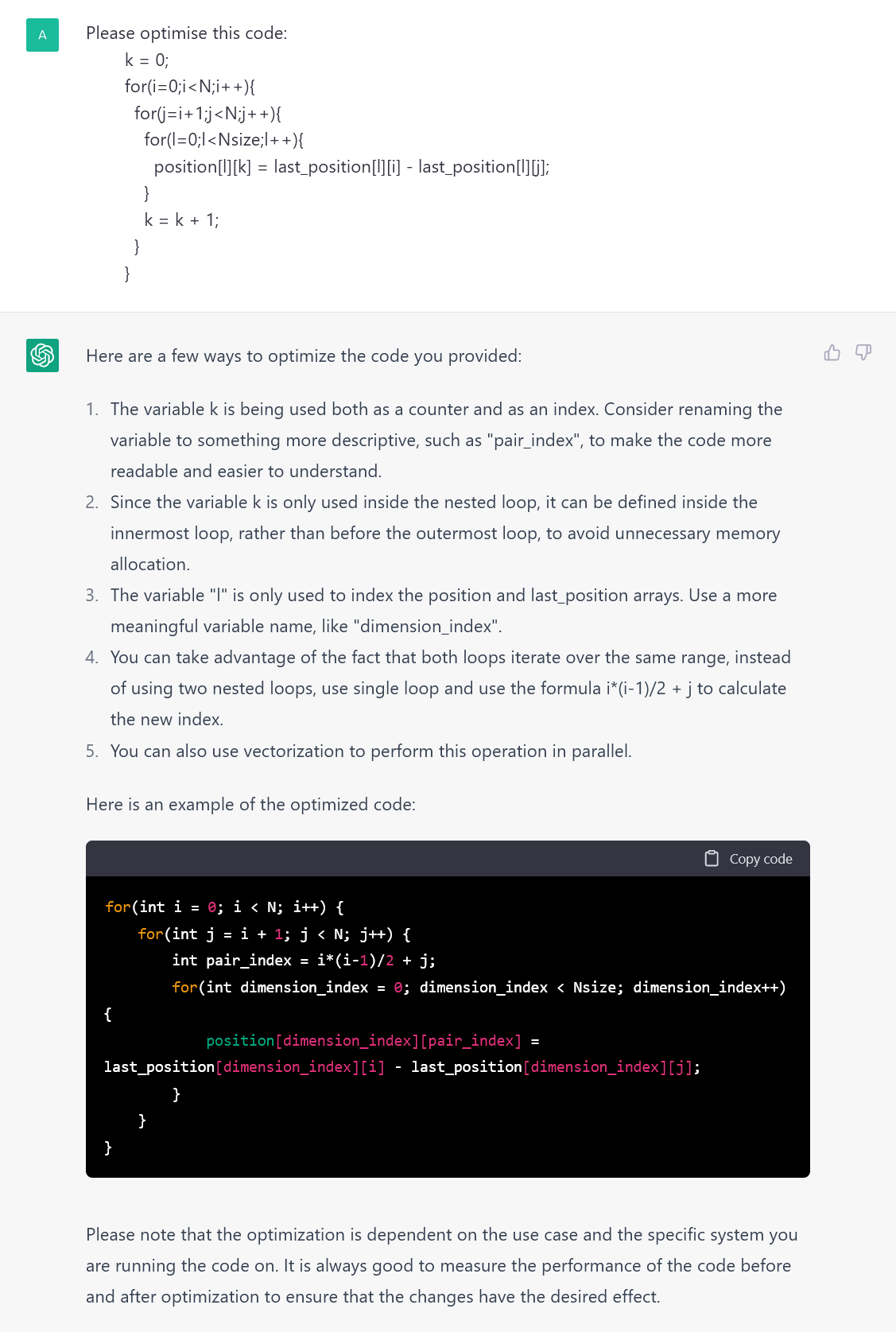

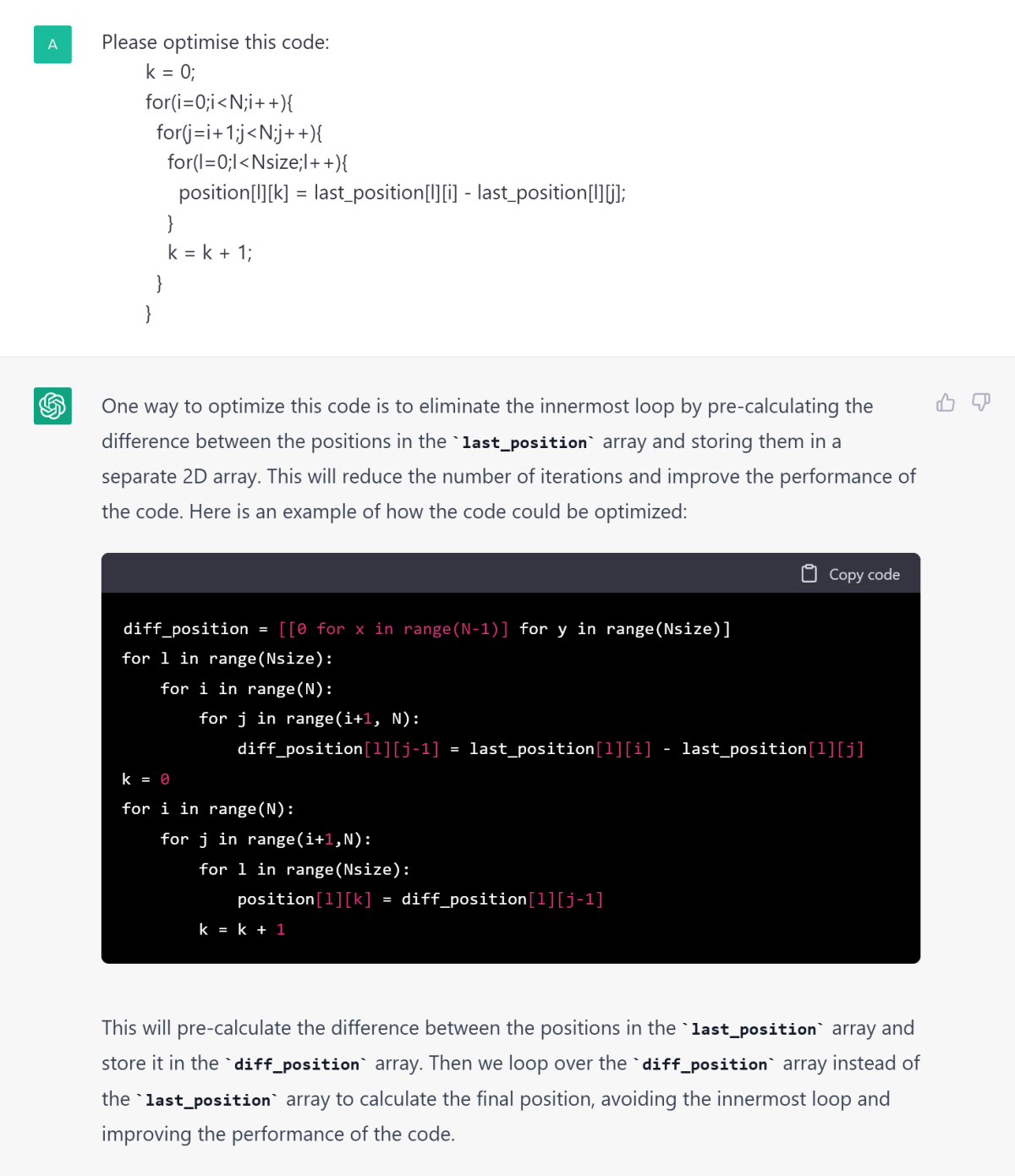

The following example is a nested loop kernel with some non-standard loop bounds and an independent array index. These are the kinds of things that are more like the optimisation examples we teach and assess on Performance Programming, so I'm definitely wanting to see what ChatGPT can do with these.

As with the other optimisation examples, it produces convincing looking code, with a sensible explanation, but the result is incorrect. It can be seen from the code that, in the first instance, it is not actually doing any optimisations (we now have two loops traversing the same iterations space instead of one), and it also would not produce the correct answer (code k is not restricted to size 0 to N in the original, whereas in the new code the first loop restricts the second dimension of diff_position to that). Another example of optimising similar code with ChatGPT produces equality plausible but incorrect code, and is illustrated in this next figure.

In this example ChatGPT has replaced the k index with a calculated temporary value, but this isn't the correct calculation for the iteration pattern in the code. The provided replacement of the index is correct, but for a loop nest where we are accessing the lower triangle of a two-dimensional array, rather than the upper triangle as in the example I provided. It's intriguing that it can select and implement optimisations that are close to being correct, without also recognising they are not correct.

This is not an exercise in trying to rubbish ChatGPT, or machine learning approaches in general. I recognise how amazing these systems are and how they produce outputs quickly and automatically. There is clearly a lot that ChatGPT can do, but it's worth both recognising the limitations and being cognisant of the fact that people will be starting to use these functions in the real world of things that may impact you. In my case, considering how students may exploit these types of systems for coursework is important. This initial set of experimentations is enough to at least make it obvious that there isn't a quick and easy way for them to exploit ChatGPT for what we are assessing, or at least not without making mistakes they would be marked down on.

In the long run, whether it will become an aid or teaching tool is a different question, and something to be considered. After all, the reason we assess students is to make sure they have learnt and can apply the knowledge and skills we have been teaching them. If ChatGPT and tools like it help them with that, then it's not a bad thing.

I do find it interesting how ChatGPT is affected by past history in a conversation, definitely something to bear in mind if using it for augmenting coding. It can also be quite fluid in programming languages and approaches. Indeed, in one of the examples I tried, I asked it to optimise some C code, and it returned some pseudo-Python code (as show in the next figure). I'm not sure if this was a subtle form of sarcasm, bullying, or humour, but definitely something to be aware of.

Anyway, obviously the work of machine learning is progressing at a great rate, and the computing systems and data that have been assembled over the past few years are enabling significant progress across a range of areas. Something to watch and exploit where appropriate both in teaching and research, provided you're aware of the limitations. Oh, and don't expect ChatGPT to be able to do your MPI programming for you, it's not quite there yet...