High-performance ray tracing for room acoustics

3 September 2018

The Auralisation of Acoustics in Architecture project is considering how to improve the modelling of sound qualities in rooms, whether existing, planned or ruined. Brian Hamilton of the University of Edinburgh's Acoustics & Audio Group writes about this collaboration with EPCC.

Last August EPCC’s James Perry, Kostas Kavoussanakis and I started work on the Auralisation of Acoustics in Architecture (A3) project. One of its goals was to explore the use of ray-tracing techniques to model the sound qualities of a room. Such a tool could help optimise the acoustics of an existing or future concert hall, improving the audience’s listening experience. It could also help recreate the sound characteristics of ruined historical spaces.

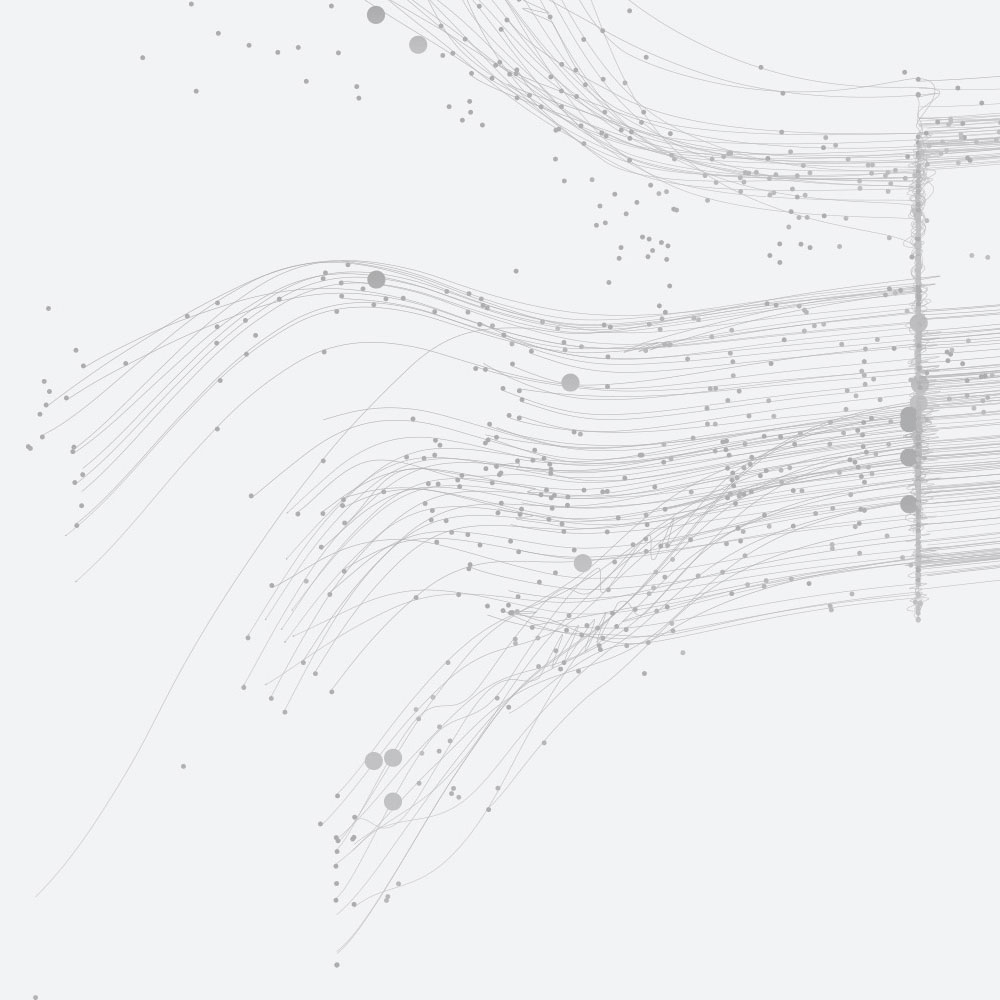

Ray tracing in acoustics bears many similarities to ray tracing in graphics, primarily in that the brunt of the work involves finding the nearest triangle (in a surface mesh) that intersects a given ray, and this is generally accomplished using acceleration structures such as bounding-volume hierarchies (BVH) or voxel grids. In graphics the goal is to generate an image, whereas in architectural acoustics the goal is to generate an impulse response, which allows one to analyse and recreate the acoustics of a room such as a concert hall.

While ray tracing is strictly a high-frequency approximation (unlike wave-based methods for acoustics simulation, which are valid for all frequencies), it is useful as its computational costs can be significantly less than brute-force wave-based methods. Then again, ray tracing for room acoustics is by no means trivial. Using only the strict principles of classic geometric acoustics (see L. Cremer and H. A. Müller, Principles and Applications of Room Acoustics, vol. 1. Chapman & Hall, 1982), the computational costs of ray tracing in room acoustics scales primarily with the reverberation time of the room (the time it takes a sound to decay by a factor of 1000 in amplitude), and in a typical concert hall this might mean firing billions of rays, and each ray may bounce through the room hundreds of times.

Because this team has previously worked together through the five-year ERC-funded NESS project, the collaboration in this project has been extremely productive, with prototype codes written in Matlab by myself, and initial ports to C++ by James Perry, and further back-and-forth testing and optimisation by both of us. Through many optimisations, including efficient parallelisation over multiple CPUs and advanced vectorisation, we were able to ray trace a concert hall in a matter of minutes, as opposed to the days required by Matlab prototype codes.

Our aim is to get this new ray-tracing tool (and other wave-based tools developed as part of the parallel ERC-funded Wave-based Room Acoustic Modelling project) into the hands of practitioners, to study how auralisation can be utilised in early phases of architectural design.

The nine-month A3 project was funded by a grant from the CAHSS Challenge Investment Fund.