Mining digital historical textual data

Author: Rosa Filgueira

Posted: 23 Oct 2019 | 10:43

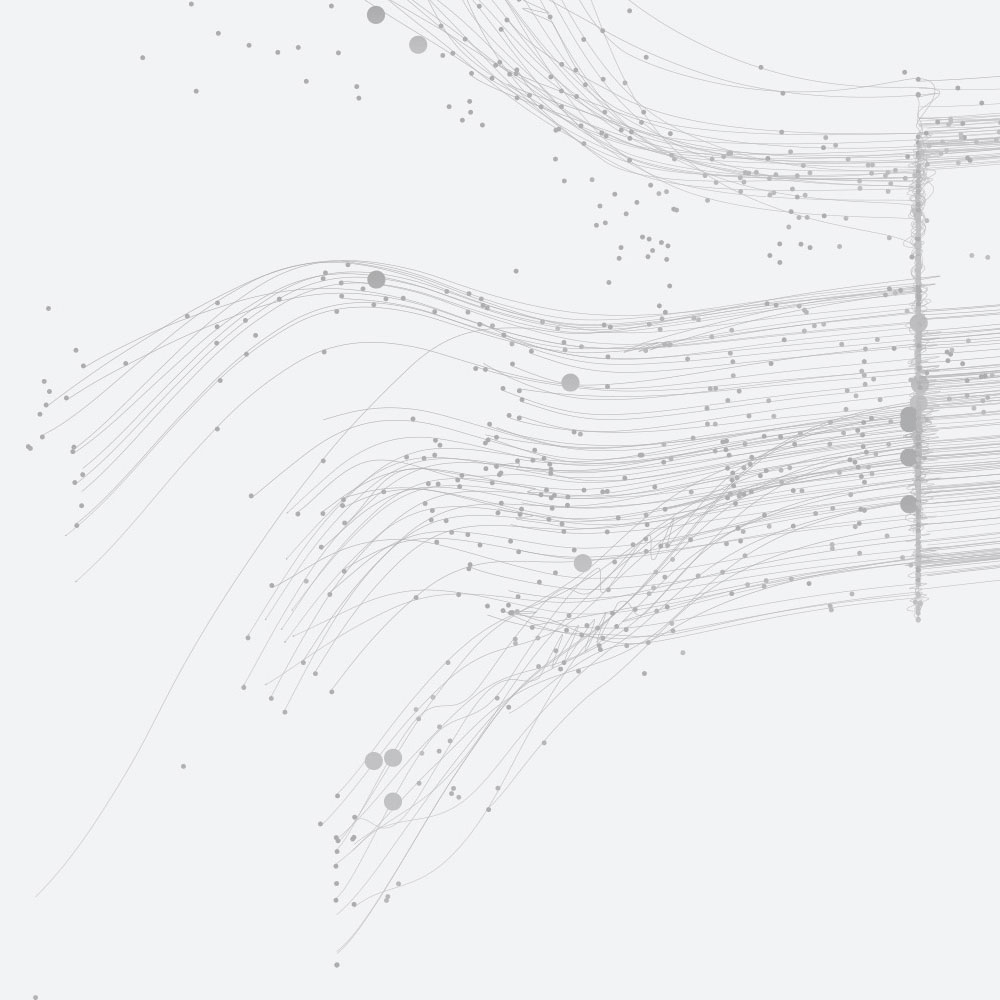

Over the last three decades the collections of libraries, archives and museums have been transformed by large-scale digitisation. The volume and quality of available digitised text now makes searching and linking these data feasible, where previous attempts were restricted due to limited data availability, quality, and lack of shared infrastructures. One example of this is the extensive digital collection offered by the National Library of Scotland (NLS) (see Figure 1) [1], which can be accessed online and also downloaded for further digital humanities research.

However, mining historical text data at scale is not an easy task and comprises several challenges. For example, digital collections can have different levels of quality of data (OCR), and the ones with poor quality can create issues for searching and researching based on imperfect digital copies of text. Another challenge is the heterogeneity for representing the structure and textual content of a digital document. There are many XML schemas that can be used for used for digitising documents, such as METS [2], ALTO [3], MODS [4], and libraries-specific schemas. It also well requires capacity and/or skills to use High-Performance Computing environments and analytics frameworks.

Therefore in EPCC we have been working across several projects (ATI-SE, Living with Machines, and Text Data Mining services) to mitigate some of these obstacles by providing a new scalable text-mining toolbox, called defoe [5][6]. defoe allows us to query the wealth (Footnote 1) of digitised newspapers and books openly licensed at scale, which enables historians to be in control of their text mining research.

“ All this work provides the means to search across large scale datasets and to return results for further analysis and interpretation by historians.”

Melissa Terras, Professor of Digital Cultural Heritage, College of Arts, Humanities, and Social Sciences at the University of Edinburgh

Coming back to the NLS digital collection, we have been collaborating with Melissa Terras [7] to start running text analyses using defoe across the first eight editions of Encyclopaedia Britannica, issued from 1768-1860, which comprise a total of 143 volumes [8].

After downloading the full dataset (Footnote 2) available (155,388 ALTO XML files, 195 METS files and155,388 image files), we have started by simply exploring the popularity of different topics over time, such as:

- Sports (eg golf, rugby, tennis, football, shinty, tennis)

- Scottish cities (Aberdeen, Dundee, Edinburgh, Glasgow, Stirling, Inverness, Perth)

- Scottish philosophers (Francis Hutcheson, David Hume, Adam Smith, Dugald Stewart, Thomas Reid)

For doing this, depending on the topic, we first run either keysentence_by_year [9] (eg for the Scottish philosophers topic) or keyword_by_year [10] (eg for the Scottish cities topic) defoe query, which obtains the frequencies of keywords/keysentences by page and groups the results by year.

Later we run a normalize [11] query, which iterates through the Encyclopaedia's editions and counts the total number of documents, pages and words per year. Results of this query allow us to normalise the previous frequencies. And finally, results are displayed using n-grams.

In the near future, the plan is to create a new text mining platform to allow researchers that are non-computational experts, to select and run large-scale text-mining queries across different digital collections. With a simple and easy user interface, researchers will be able to customise the query to run by selecting which corpus to use (eg Encyclopaedia Britannica) and some configuration parameters (eg keysentences lists). defoe will be at the backend of this platform, receiving the query and its configuration parameters, and it will run the query across the specified dataset, returning back later the results to the platform. Different visualisations will be also offered to the researchers at the platform for displaying the results.

Footnote 1: defoe supports METS, MODS, ALTO, British-Library-specific and PaperPast-specific XML schemas. It also has 5 object models (PAPER, NZPP, ALTO, FMP, NLS) to map the physical representations and XMLs mentioned before.

Footnote 2: METS describes the structure of collection. ALTO describes the textual content of a digitised page and also the spatial coordinates of every column, line, and word as it appears on the page.

References

[1] https://data.nls.uk/data/digitised-collections/

[2] http://www.loc.gov/standards/mets/

[3] https://www.loc.gov/standards/alto/

[4] http://www.loc.gov/standards/mods/

[6] https://github.com/alan-turing-institute/defoe

[7] https://www.ed.ac.uk/profile/professor-melissa-terras

[8] https://data.nls.uk/data/digitised-collections/encyclopaedia-britannica

[9] https://github.com/alan-turing-institute/defoe/blob/master/defoe/nls/queries/keysentence_by_year.py

[10] https://github.com/alan-turing-institute/defoe/blob/master/defoe/nls/queries/keyword_by_word.py

[11] https://github.com/alan-turing-institute/defoe/blob/master/defoe/nls/queries/normalize.py