Optimising sparse-matrix vector multiplication on Intel Xeon Gold processors

27 August 2020

Edoardo Coronado visited EPCC from 18th May–15th August 2019 under the HPC-Europa3 programme. Here he gives us an update on his research since his previous blog article.

During my visit to EPCC in 2019 under the auspices of the HPC-Europa3 programme, I worked on optimising routines to perform the Sparse Matrix-Vector (SpMV) product, a common operation in lots of scientific applications, on Intel Xeon Gold processors and Graphics Processing Units (GPUs).

I studied scan algorithms that could be used for parallel processing in order to speed up the reduction step, where all the information is gathered back together, as required for the SpMV operation. I found several interesting works oriented towards GPUs [1] [2]. These covered different performance issues for the reduction and scan operations, such as: memory coalescing, divergent branching and workload imbalance among the threads. CUDA implementations of the algorithms were presented for the reduction and scan operations, but none of these was targeted at the Intel Xeon architecture.

A little more digging led me to a post entitled: “Can You Write a Vectorized Reduction Operation?” [3] in the Intel Developers' Zone blog, where its author, Clay B., implemented and explained a reduction function using the Intel Advances Vectorized Instruction (AVX) for 256-bits registers. Thus, the scarce amount of information for the Intel Xeon architecture and the current integration of 512-bits registers on the Intel Xeon platform motivated me to write this post. This post thus updates Clay B.'s post, and offers two implementations using AVX-512 instruction for the scan operation. A quantitative performance comparison is then made between these two variants: the first is based on the down-sweep algorithm while the second is based on the work-efficient algorithm; both variants are implemented using double precision arithmetic.

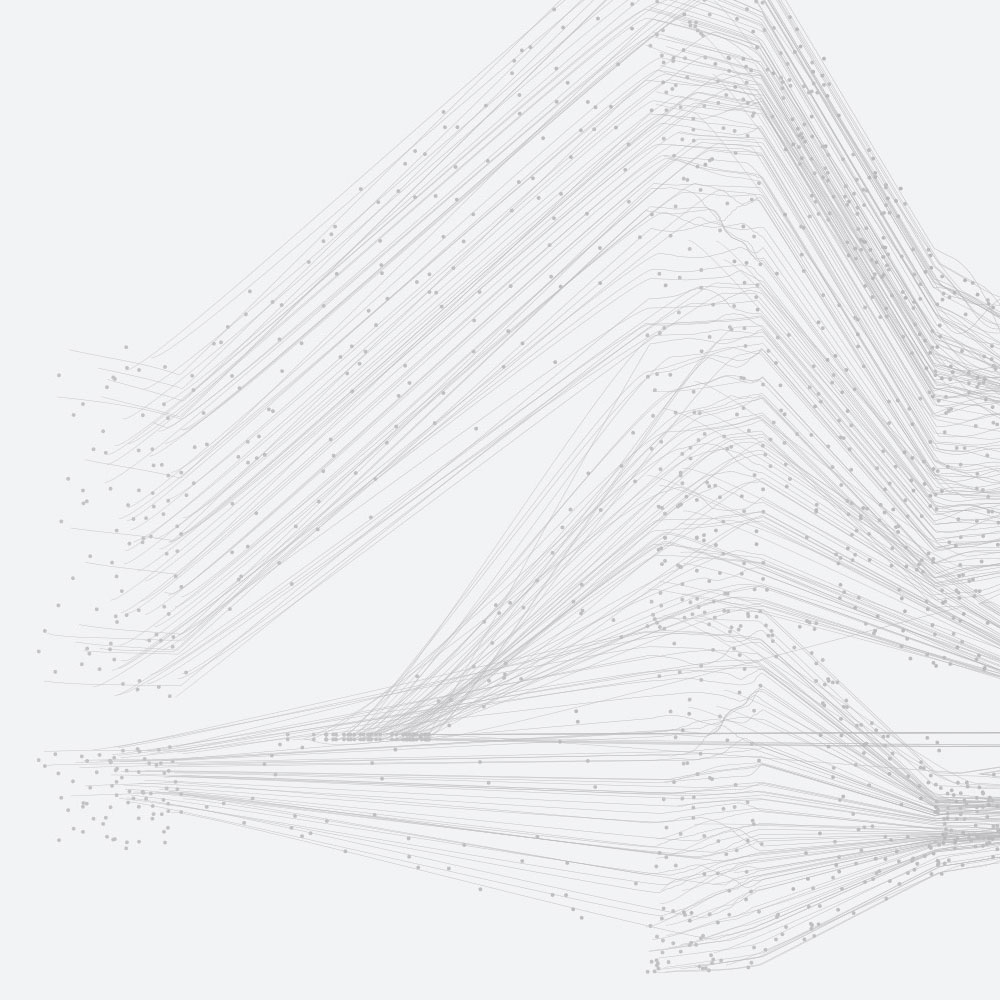

In order to clarify the scan algorithms employed in this work, the Figures 1a and 2a illustrate the scan operation for an eight-digit sequence for each strategy.

Figure 1: Down-sweep scan. A green font is used to indicate line number in Listing 1.

Similarly, to illustrate the use of the AVX-512 instructions used for both implementations, the computational kernels to perform the scan operation for an eight-digit sequence are shown in Listing 1 (the down-sweep algorithm) and Listing 2 (the work-efficient algorithm).

__m512d dsScan( __m512d vtVal )

{

__m512i vtIdx = _mm512_set_epi64 ( 6 , 5 , 4 , 3 , 2 , 1 , 0 , 0 );

__m512d vtAux = _mm512_permutexvar_pd ( vtIdx , vt Val );

__m512d vtRed = _mm512_mask_add_pd ( vtVal , 0xFE , vtVal , vtAux );

vtIdx = _mm512_set_epi64( 5 , 4 , 3 , 2 , 1 , 0 , 1 , 0 );

vtAux = _mm512_permutexvar_pd( vtIdx , vtRed );

vtRed = _mm512_mask_add_pd( vtRed , 0xFC, vtRed, vtAux );

vtIdx = _mm512_set_epi64( 3 , 2 , 1 , 0 , 3 , 2 , 1 , 0 );

vtAux = _mm512_permutexvar_pd( vtIdx, vtRed );

vtRed = _mm512_mask_add_pd( vtRed , 0xF0, vtRed, vtAux );

return ( vt Red );

}

Listing 1: Kernel for the down-sweep scan.

__m512d weScan(__m512d vtVal)

{

__m512i vtIdx = _mm512_set_epi64( 6 , 6 , 4 , 4 , 2 , 2 , 0 , 0 );

__m512d vtAux = _mm512_permutexvar_pd( vtIdx, vtVal);

__m512d vtRed = _mm512_mask_add_pd( vtVal, 0xAA, vtVal, vtAux);

vtIdx = _mm512_set_epi64( 5 , 6 , 5 , 4 , 1 , 2 , 1 , 0 );

vtAux= _mm512_permutexvar_pd(vtIdx, vtRed);

vtRed = _mm512_mask_add_pd(vtRed, 0x88, vtRed, vtAux);

vtIdx = _mm512_set_epi64( 3 , 6 , 5 , 4 , 3 , 2 , 1 , 0 );

vtAux = _mm512_permutexvar_pd( vtIdx, vtRed );

vtRed = _mm512_mask_add_pd( vtRed, 0x80, vtRed, vtAux);

vtIdx = _mm512_set_epi64( 7 , 6 , 3 , 4 , 3 , 2 , 1 , 0 );

vtAux = _mm512_permutexvar_pd( vtIdx, vtRed );

vtRed = _mm512_mask_add_pd(vtRed, 0x20 , vtRed, vtAux);

vtIdx = _mm512_set_epi64( 7 , 5 , 5 , 3 , 3 , 1 , 1 , 0 );

vtAux = _mm512_permutexvar_pd( vtIdx, vtRed);

vtRed = _mm512_mask_add_pd(vtRed , 0x54, vtRed, vtAux);

return ( vtRed);

}

Listing 2: Kernel for the work-efficient scan.

For illustrative purposes, a memory map is shown in Figures 1b and 2b for the output variable of each code line from both functions. Figures 1 and 2 show that each step, for both algorithms, requires the consecutive use of the same instructions.

Figure 2: The down-sweep scan. A green font indicates the line number in Listing 2.

We only need to look at one step of either algorithm to understand each function and to see how the AVX-512 instructions needed by these kernels is used. Let us start the breakdown by looking at step 1 in the dsScan function in Listing 1, assuming that the variable vtVal has in its memory space the values of the input sequence shown in Figure 1a.

In line 3 of Listing 1, the instruction __mm512_setepi64 packs eight 64-bits integers into the variable vtIdx, these integers are used as indices to indicate the vector lanes from which data is to be copied from. Notice that the order of the integers in the code is inverse to the order of the memory map. In line 4 of Listing 1, the instruction __mm512_permutexvar_pd shuffles the 64-bit elements in vtVal across the lanes set out by vtIdx and stores them in vtAux. The variable vtAux contains the values from vtVal necessary to perform the addition marked by step 1 of the down-sweep algorithm in Figure 1a. Lastly, in line 5 of Listing 1, the instruction __mm512_mask_add_pd adds the elements from vtVal positioned in the second place (from left to right) and also vtAux if the corresponding bits of the mask 0xFE are set to 1 then it copies the elements of the vtVal positioned in the first place (from left to right) if the corresponding bits of the mask 0xFE are set to 0; notice that the first and second hexadecimal of the mask 0xFE affect the last and the first four lanes of the vector variable (Figure 1).

The dsScan and weScan functions in Listings 1 and 2 can only scan eight-digit sequences. However, these functions can be used as basic blocks to construct routines to scan larger sequences of numbers. In this work, these functions were used to code routines to scan sequences of: 64, 512, 4096, 32768 and 262144 elements. These functions were tested by running 5000 iterations of each implementation and using the 40 threads of the Intel Xeon Gold 6148 (Skylake) processors contained in the GPU nodes of the Cirrus EPCC service. The results obtained are shown in Table 1.

Function

Number of elements in the input sequence

64 512 4096 32768 262144

dsScan

weScan

8.19

8.32

28.07

17.98

48.58

33.07

78.39

40.57

101.82

58.33

Table 1: Average time (in µs) expended during the scan operation for sequences of different lengths.

Clearly, the work-efficient scan is faster than the sweep-down scan on the Intel Xeon platform. Despite the fact that the former requires more steps, and consequently requires more instructions to be executed, than the latter, its performance improves as the size of the input sequence increases. The highest percentage of improvement (48.25%) was obtained for a sequence of 32768 elements.

In conclusion, this exercise attempts to exemplify the use of the AVX-512 instructions by implementing two variants of a very important operation for a myriad of numerical applications such as the scan operation. By doing so, this works proves that the work-efficient scan algorithm is as fast on the Intel Xeon platform as it is on the NVIDIA architecture (Table 1) and it also validates the performance metric over the lines of code metric.

References

[1] Keynote titled "Optimizing Parallel Reduction in CUDA" by Mark Harris

[2] "Efficient Parallel Scan Algorithms for GPUs" by Shubhabrata Sengupta, Mark Harris and Michael Garland

[3] https://software.intel.com/content/www/us/en/develop/blogs/can-you-write-a-vectorized-reduction-operation.html