Planning for high performance in MPI

25 January 2018

Many HPC applications contain some sort of iterative algorithm and so do the same steps repeatedly, over and over again, with the data gradually converging to a stable solution. There are examples of this archetype in structural engineering, fluid flow, and all manner of other physical simulation codes.

This repetitive pattern of operations is also found in machine learning (ML), in particular Deep Learning (DL). To learn, the application iteratively builds a network of transformations that takes some kind of input and produces a desired output. The first transformations are just wild guesses, but with feedback about the quality of the output used to adjust each subsequent transformation, better guesses are discovered until the network converges on a solution.

Repetitive computational operations benefit from planning ahead: anticipating and reserving the resources to be used for each operation to avoid oversubscription and contention.

In MPI, planning is achieved by using ‘persistent’ operations, but currently only point-to-point communication operations can be persistent in MPI. A proposal is being considered by the MPI Forum to extend this planning concept to collective communication operations, so-called persistent collectives. For anyone familiar with MPI as it is now, the syntax is the natural extension of the point-to-point persistent syntax, with an initialisation function, followed by starting and completing the operation, and finally freeing the resources associated with the operation.

However, there are interesting aspects in the semantics of how persistent collectives work that are different to how existing persistent point-to-point operations work and different to how existing non-persistent collective operations work.

One difference, which is very helpful for the Deep Learning use-case, is ordering. For each MPI communicator, collective operations in MPI must be called in the same order by all MPI processes that will participate in them so that MPI knows which calls match up. For persistent collective operations, it turns out that this ordering requirement only needs to apply to either the initialisation functions or the start functions but not both. The current proposal chooses to require ordering of initialisation functions - which is good for DL because the setup of the training network is always done in order but the collective operations might be started in a different order at each process because of load-imbalances or system noise effects.

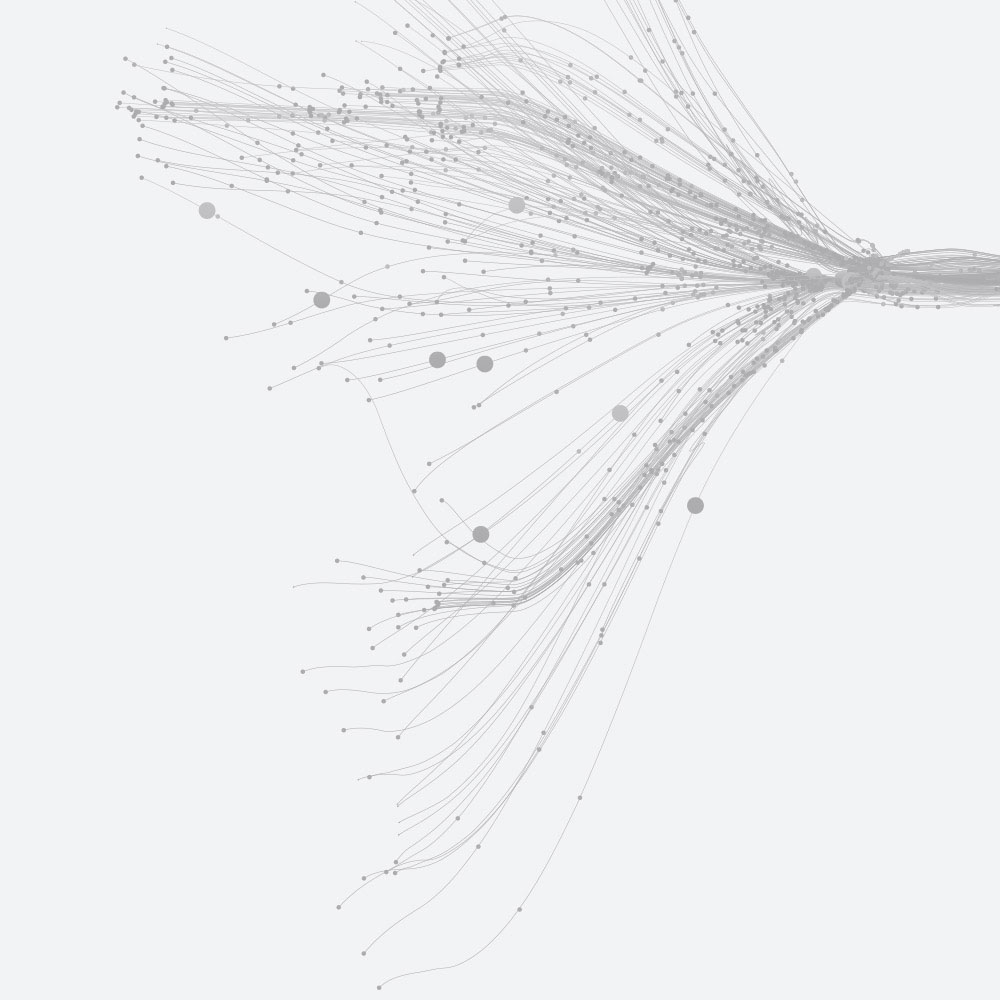

A simple implementation of the initialisation functions could just duplicate the communicator, resulting in each persistent collective operation using a different communicator and therefore freeing MPI from needing to impose any ordering on subsequent ‘start’ function calls. Of course, it is hoped that MPI library writers will find much better ways to implement this such as using tagged collectives or reserving hardware resources (such as buffers or even dedicating paths through the communication fabric hardware and creating triggered operations in network cards) for each operation.

The impact on applications should be a dramatic improvement in the time-to-solution because a major source of communication overhead can be effectively removed from the critical path. This proposal is close to being ratified by the MPI Forum - it might even be announced at SC18 later this year and available in MPI libraries soon after that.

Further reading

1) MPI Persistence WG. 2017. Adding persistent collective communication. In MPI Forum proposal. URL: https://github.com/mpi-forum/mpi-issues/issues/25

2) Bradley Morgan, Daniel J. Holmes, Anthony Skjellum, Purushotham Bangalore, and Srinivas Sridharan. 2017. Planning for performance: persistent collective operations for MPI. In Proceedings of the 24th European MPI Users' Group Meeting (EuroMPI '17). ACM, New York, NY, USA, Article 4, 11 pages. DOI: https://doi.org/10.1145/3127024.3127028

3) Bradley Morgan, Daniel J. Holmes, Anthony Skjellum, Purushotham Bangalore, and Srinivas Sridharan. 2018. Planning for Performance: Enhancing Achievable Performance for MPI through Persistent Collective Operations. In Parallel Computing. Elsevier. [In press]

4. Auburn researchers develop new functionality to improve supercomputer performance: http://eng.auburn.edu/news/2018/03/bradley-morgan-supercomputer-research.html