Spark-based genome analysis on Cray-Urika and Cirrus clusters

16 January 2019

Analysing genomics data is a complex and compute intensive task, generally requiring numerous software tools and large reference data sets, tied together in successive stages of data transformation and visualisation.

Typically in a cancer genomics analysis, both a tumour sample and a “normal” sample from the same individual are first sequenced using NGS systems and compared using a series of quality control stages. The first control stage, ‘Sequence Quality Control’ (which is optional), checks sequence quality and performs some trimming. While the second one, ‘Alignment’, involves a number of steps, such as alignment, indexing, and recalibration, to ensure that the alignment files produced are of the highest quality as well as several more to guarantee the variants are called correctly. Both stages compromise a series of intermediately computing and data-intensive steps that very often are handcrafted by researchers and/or analysts.

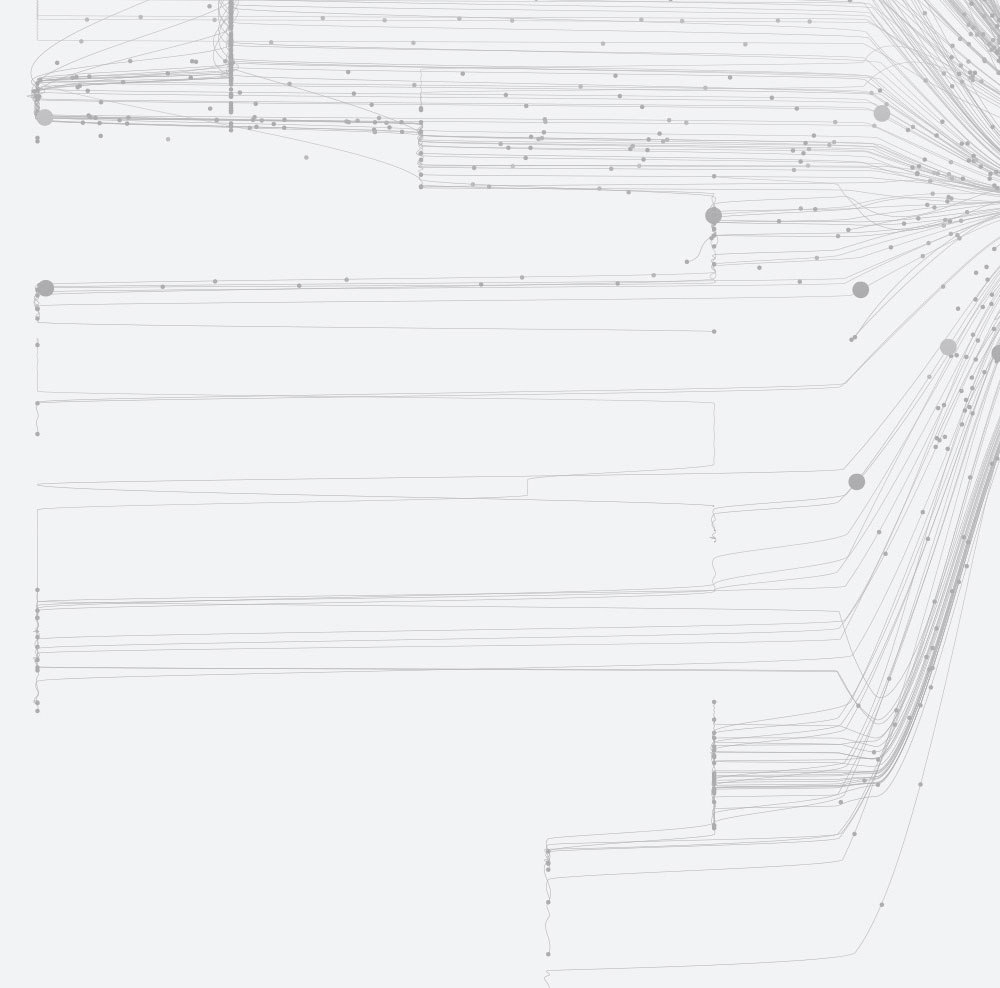

Given the projected increase in the use of genome analysis in medical practice, using data analytic frameworks and computing environments which can handle automatically this large amount of data robustly, with speed, scalability, and flexibility is vital for biomedical genomics research. Therefore, as part of BioExcel, we have focused on improving ease of use and scalability of the cancer genomic analysis by providing a new Alignment workflow for fully automated high throughput analysis in the ‘alignment’ stage. This work resulted in benchmarking two computing intensive tools used in the recalibration step (Figure 1) of the workflow, BaseRecalibrator and ApplyBQSR (provided by GATK), to measure their performance under different settings and computational infrastructures.

Figure 1: Recalibration step of the Alignment workflow[1], which uses two GATK spark-based tools.

The recalibration process involves two key steps that can be summarised as follows. For an aligned sequence data file, the BaseRecalibrator tool recalculates systematic errors made by the sequencer when it estimates the quality score of each base call by applying a machine learning algorithm, which uses the known sites file to help distinguish true variants from false positives. And it computes a recalibrated table with the adjustments necessary for the whole sequence. Then the ApplyBQSR tool applies the recalibrated table to the initial sequence file and produces a new adjusted/recalibrated aligned sequence file for further analysis.

With the GATK latest release, both tools have been completely reengineered to support an open-source model that relies on Apache Spark to provide single-node multithreading and multi-node scaling capabilities to increase their speed and scalability. In prior versions, these tools were limited to run on a single-node shared-memory environment.

For running these new Spark-based tools in parallel using a single-node (multithreading), it is not necessary to have a Spark cluster configured. The GATK engine can still use Spark to create dynamically a single-node standalone cluster in place, using as many cores available as are available on a node. But in our case, we were interested to test the scalability of BaseRecalibrator and ApplyBQSR using a multi-node Spark Cluster. Therefore, we used two different HPC environments for our experiments: Alan Turing Institute’s deployment of the Cray Urika-GX system and Cirrus (see Cirrus User Guide).

Cray Urika-GX

The Urika-GX system is a high-performance analytics cluster with a pre-integrated stack of popular analytics packages. It includes 12 compute nodes (each with 2x18 core Broadwell CPUs), 256GB of memory and 60TB of storage (within a Lustre file system). On the other hand, Cirrus is an HPC and data science service hosted and run by EPCC at The University of Edinburgh and is one of the EPSRC Tier-2 National HPC Services. It includes 280 compute nodes (each with 2x18-core Intel Xeon processors with hyper threading), 256GB of memory, and 406TB of storage, also within a Lustre file system.

Little work was needed to run the recalibration step of the Alignment workflow in Urika-GX using several nodes. The Urika-GX software stack includes a fault-tolerant Spark cluster configured and deployed to run under Mesos, which acts as the cluster manager. Therefore, we just needed to adjust four Spark parameters: Spark master with the URL of the Mesos master node; number of executors (worker nodes' processes in charge of running individual Spark tasks); number of cores per executor (up to 36 cores); and the amount of memory to be allocated to each executor (up to 250GB). Notice that even though Urika-GX has 12 nodes, at the time of our experiments only 9 nodes and 324 cores were available.

Cirrus

However, to run the same recalibration step in Cirrus, we had first to spin up an ad-hoc multi-node standalone Spark cluster within a PBS-job. This PBS-job provisions on-demand and for a specific period of time the desired Spark cluster by starting the master, workers and registering all workers against master. We decided to configure the Spark cluster with one node as the master, and 30 nodes[2] as workers. Each node in Cirrus has 36 physical cores (or 72 virtual cores using HyperThreading). Therefore we decided to test the performance of GATK Spark-based tools in Cirrus configuring workers with and without HyperThreading, which means that each worker was configured with 250GB of memory and either with 36 cores or 72 virtual cores. Therefore, we had 1080 cores (or 2160 virtual cores) available for our experiments. Since we used the standalone mode, the master is used for scheduling resources and distributing data across the Spark cluster, making it lightweight (without a scheduler like Yarn or Mesos), but on the other hand creating a single point of failure. After having set up the Spark cluster in Cirrus, the same parameters (spark master URL, number of executors, number of cores per executors, memory) needed to be configured for running both Spark-based tools within the workflow.

Experiments

It is worth mentioning that for both computing environments, each Spark-executor runs in a different worker node. And each executor can be configured either with 36 cores (in Urika and Cirrus without Hyperthreading) or with 72 cores (in Cirrus with Hyperthreading).

For our evaluations, we configured the recalibration step to use an initial sequence file (~145GB) and known site files (~3MB) and it produced a recalibrated table (260KB) and a new recalibrated file (~230GB) as output files. We performed several recalibration runs to capture each execution time by varying the total-executor-cores flag in our Spark-jobs. This flag represents the total amount of cores – of all executors – assigned to a Spark application. So increasing the total-executor-cores automatically increases the number of executors for our runs. For example, if we set up in our Spark-job the total-executor-cores to 144, it will automatically use two executors in Cirrus if HyperThreading is enabled (144/72), while it will use four executors in Urika-GX and in Cirrus if HyperThreading is not enabled (144/36).

Figure 2 shows how both tools decrease their execution time when we use more than one executor (each executor runs in a node). Figure 3 shows the speedup for both applications. Note that Urika-GX uses HDFS for storing the input and output files, making it more resilient to failures but increasing the time for producing a single file when a merge operation of all parts is required first, which is the case for the ApplyBQSR tool. Furthermore, Baserecalibrator scales better than ApplyBQSR in both HPC environments (with and without HyperThreading) because it has more parallel tasks.

Figure 2: Execution times in minutes (axis Y) of GATK Spark-based tools on Cirrus with Hyperthreading (orange), without HyperThreading (blue), and Urika-GX (grey) modifying the number of executors (axis X). The number of executors used is calculated by dividing the total-executor-cores by the number of cores (72 in Cirrus with HyperThreading and 36 in Urika-GX and in Cirrus without HyperThreading) available per node.

We also ran several tests in both environments modifying the memory available per executor, but little improvement was achieved by using values higher than 20GB.

Conclusions

In conclusion, we have seen how both Spark-based tools improve their performance when a multi-node cluster is used. In terms of data analytics capacities of both HPC environments, we have experienced that running Spark applications on Cirrus requires a more complex process than on Urika-GX. We are also more exposed to failures; if the Spark master crashes, no new applications can be created.

Regarding the tools’ scalability, Urika-GX seems to perform slightly better than Cirrus when we increase the parallelism, especially for BaseRecalibrator. However, Cirrus gives us more flexibility to configure a Spark cluster, having the possibility of using a higher number of executors (and cores) for running our applications, therefore reducing even more the execution time of the recalibration alignment.

Figure 3: Speedup (axis Y) is calculated dividing the execution time using one executor (T1) between the execution time using P executors (Tp). S= T1/Tp. Axis X represents the number of executors.

Regarding the use of HyperThreading in Cirrus for configuring our Spark-executors, in general terms Baserecalibrator benefits from HyperThreading, since multiple tasks in this tool operate on separate data. However, when the number of executors is higher than nine, the execution times with and without HyperThreading are closer, although still lower using HyperThreading. On the other hand, ApplyBQSR always performs better when we do not use HyperThreading for our executors, since the number of independent tasks to be performed in parallel is lower in this tool.

Footnotes

[1] The whole Alignment workflow includes a stage before this diagram, using other tools (eg BWA-Mem, Samblaster, SAMtools, etc) to perform the alignment itself.

[2] Given the queue policy, we could increase the spark-workers up to 69 nodes in Cirrus. But, the availability of exclusive nodes when we conducted our experiments was only 31.