TeamEPCC and the 2020 ISC Student Cluster Competition

10 June 2020

At heart, the ISC Student Cluster Competition (SCC) is about meeting interesting people and learning valuable new skills. Unfortunately, due to COVID-19 this year’s competition was forced to be hosted online. As a result, teams now share a remote HPC cluster provided by the National Supercomputing Center (NSCC) Singapore and no longer compete with their own custom-built cluster.

This new format creates many obstacles and difficulties for all teams, the main ones being maintaining effective communication within the team and interacting with other teams and experts. Despite the challenges of remote working, changed rules, and long job execution queues on the shared cluster, our motivation to win remains strong! Here is an update on how TeamEPCC has done so far in the ISC20 SCC.

Getting started

One of the challenges this year was diversity of the environment of the cluster. Even though we had some security problems which made Cirrus and ARCHER unavailable, the team managed to adapt and prepare for the competition. They also smoothly installed and configured applications on the competition cluster within the first few days.

Coding challenge

Starting with the coding challenge, this application is based on Charm++, an alternative of MPI and UPC based on C++ and the actor pattern. The coding challenge consists of a simple benchmark of an 8-neighbour grid communication and it has been implemented to optimise memory usage and allow better vectorisation. Additionally, multiple layers of Charm++ and threads/processes distributions have been used to find the best possible solution.

Some of the original challenges for this year’s SCC were changed. The classic HPC Challenge benchmarks were removed, and new ones introduced. One of them is Gromacs, a molecular dynamics application that simulates the Newtonian equations of motion for systems with hundreds to millions of particles. Despite the obstacles to accessing the cluster, the team compiled and ran the Gromacs challenge on several architectures and clusters, including Cirrus, ARCHER and the NSCC cluster. Performance tests were also run both on CPUs and GPUs.

The other new challenge of this year's ISC SCC is TinkerHP, another popular molecular dynamics application, which has been built with different compiler options, using different libraries. We are also attempting to find the best possible keywords to optimise the simulation. Visual results of the COVID-19 molecules can be seen on TeamEPCC's Twitter feed: https://twitter.com/teamepcc

AI challenge

Regarding the AI challenge, this year’s edition of this relatively new challenge concerned the fine-tuning and optimization of BERT, a Deep Learning Natural Language Processing (NLP) model, for the task of Question Answering using Stanford’s SQuAD 1.1 dataset. This model has grown in popularity since it was published by claiming to have achieved new state-of-the-art results for 11 NLP tasks, including SQuAD 1.1.

At the time of writing, we are at the midpoint of the competition and the team has improved the baseline F1 score by tuning the model’s hyperparameters. We are now exploring new paths for improving this score by leveraging ensemble techniques as well as looking into new ways to tweak the output layer architecture for BERT.

Cosmological simulation software

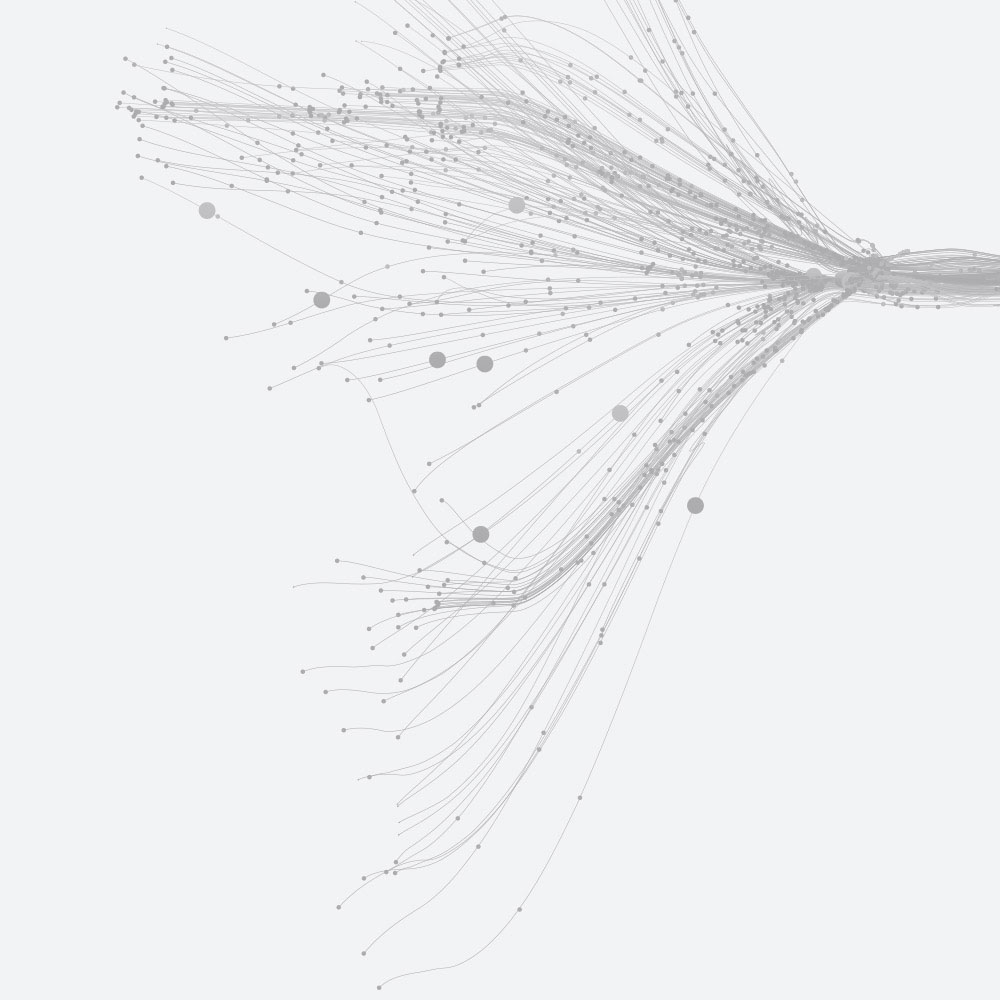

Another challenge of the competition is ChaNGa, cosmological simulation software. It is based on the Barnes and Hut algorithm that performs n-body simulations and provides a complexity of O(nlog n). It is parallelised using Charm++.

During the first week of the competition we successfully ported the code on the competition cluster. In addition we installed, validated and measured the performance of the simulation when built with several communication layers like MPI and UCX on multiple nodes. Next week we want to investigate the performance improvement that different compiler and configuration flags will offer, as well as test the placement of processes and threads.

Glacier modeling software

The final application of the competition is Elmer/Ice, glacier-modeling software that contributes to fighting climate change, it is written in Fortran as a subroutine of Elmer. Our tasks for this challenge are to simulate and analyse the movement of Greenland. Firstly, we built and compiled the application on the shared NSCC cluster, then we ran the application with the given input data. After receiving the output files, we visualised them using ParaView. Finally, we used the profiling tool IPM to improve performance using these profiler results.

For these tasks, we were allowed to try different compilers, third-party libraries and optimisation flags, and configuration files to improve the performance of the application. We were limited to using 4 nodes and 96 cores to run the applications in parallel on CPU node. For GPU-intensive applications we had GPU nodes with up to 4 NVIDIA Tesla V100 GPUs available.

Despite this year's competition rules and the disruption of the pandemic situation, TeamEPCC continues to work as a team no matter the distance. We are still happy to recommend that prospective EPCC students join this competition.

Watch an interview with TeamEPCC's coaches, Xu Guo and Juan Rodriguez Herrera: https://www.youtube.com/watch?v=sfIqnFnC14A&feature=youtu.be