Top500: Change or no change?

November 2017 Top500

My initial impression of the latest Top500 list, released last month at the SC17 conference in Denver, was that little has changed. This might not be the conclusion that many will have reached, and indeed we will come on to consider some big changes (or perceived big changes) that have been widely discussed, but looking at the Top 10 entries there has been little movement since the previous list (released in June).

The Top500 list is not an ideal method for benchmarking or ranking large HPC system, as I've discussed before, because it does not generally reflect performance that user applications would experience on a given hardware platform. However, it is the most widely recognised and quoted ranking system for large scale computer systems, so the Top500 lists are closely watched and eagerly anticipated, at least in some quarters.

Top 10

The latest list only has one new entry in the Top 10, the Japanese Gyoukou system, coming in at fourth place with an impressive linpack performance of around 19 PFlop/s (although currently slightly overshadowed by the recent arrest of the CEO of the hardware manufacturer). Interestingly, this is the first list where more than 10 PFlop/s performance on Linpack is required to get into the Top 10, in the June list just over 8 PFlop/s was sufficient.

It is interesting that there are no new American or European systems in the Top 10, no new 10+ PFlop/s systems in Europe, America, or for that matter China. This may be simply an artifact of the timing of procurement cycles, with a slew of new US and European systems expected over the next few years, but it does reflect a significant period of time without such flagship systems being created.

It's not entirely true that nothing else has changed in the Top 10, in fact one of the systems already in the list has been upgraded, with the Los Alamos Trinity system getting a hefty slug of Intel KNL-based nodes to almost double its Linpack performance (~8 PFlop/s to ~14 PFlop/s). This system highlights the difference between multi-core and many-core processors, with the old Trinity system achieving 8.1 PFlop/s (with a theoretical maximum performance of 11 PFlop/s) using 9408 nodes and requiring 4.2 MW of power.

Power

The new Trinity system achieves 14 PFlop/s, but has a much higher theoretical maximum: 43.9 PFlop/s, using 19,392 nodes but only requiring 3.7 MW of power. On the face of it, the processors being used in Trinity look inefficient, at least from a Flop/s point of view. The theoretical peak has massively increased (>3x), but the achieved performance has not increased anywhere near as much (~1.75x). However, this performance is achieved, at least according to the list data, with less power.

Now, this might not mean what you think it means. The power measurement supplied probably does not include associated power requirements, such as cooling, filesystem, etc... Furthermore, this is a power requirement measure, not an energy used measure. It could easily be that the new system uses less power, but more energy, to perform the Linpack calculation required for the benchmark (the calculation may take proportionally longer than with the older system).

However power is important because it is seen as one of the limiters for Exascale system deployment. The size of system that a centre can host is more likely to be limited by the total power available to run the system than by the cost of running the system (the energy requirement), although this is important too, of course.

So, on the face of it, it looks like the many-core processors are harder to get peak performance on, but much more power-efficient than standard multi-core processors. Indeed, the peak vs achieved performance issue is well known for the Intel Xeon Phi Knights Landing processor. The processors have very wide vector units, which can theoretically provide very high floating point performance, but not all application code maps well to these, or can fully exploit them.

System information

However, the story is not quite as simple as it may seem. One aspect of the Top500 list that is getting more problematic is how system information is recorded and displayed, and Trinity is a good example of this. Trinity is listed as having Intel Xeon Phi 7250 processors (1.4 GHz with 68 cores), with the system having 979,968 cores in total.

However, if you were to research Trinity in more detail, using sources outside the Top500 list, you would find that actually there are two different types of nodes in Trinity, with 9,968 nodes using the KNL (7250) processor, and 9,408 nodes using Intel Xeon E5-2698v3 processors (multi-core Haswell processors).

Another example of this is a new UK entry into the list, the Peta4 system at Cambridge, one of the new UK Tier-2 academic HPC systems. Using just the Top500 list details you would think that this was a large multi-core system built from Intel Skylake processors (Xeon Gold 6142), providing 1.6 PFlop/s Linpack performance. However, investigating further you would find it is part Skylake and part KNL, with two different types of nodes.

Whilst there is no suggestion that the Linpack benchmark was not run across the two different types of node, it would be better if the Top500 list provided information on the heterogeneous nature of the systems to make it easier to understand the hardware actually being used in the systems in the Top500.

Furthermore, it would be nice if the Linpack benchmark was only run on system configurations that users can actually exploit, ie if the plan is that users cannot ever run a single job across different node types, is it fair they can be used to provide an evalution of the performance of the system using Linpack?

Country dominance

As mentioned at the beginning, there was a lot of talk when the list came out about it being a game-changing list. The big trend noted by many is the switch between US and Chinese dominance of the list. Since June, China has become the number one host of Top500 systems, both by number and performance share.

Figure 1: Countries share of the Top500 list, graphs taken from the Top500 website

Figure 1 shows the share of systems in individual countries from the latest list (Novmber 2017) and the preceding list. The graphs, taken from the Top500 website, show both numbers of systems in the list and performance share, and in both China has moved from second to first.

However, interestingly, whilst China has had around a 7% increase in the number of systems in the list, this has not been matched by a commensurate increase in performance share. China's performance share has increased by less than 2%, suggesting that this growth in Chinese systems is in smaller, less powerful, systems, rather than multi-petaFlop machines.

The Japanese, on the other hand, have seen an opposite trend with a negligable increase (0.4%) in the number of systems, but a significantly larger increase in performance share (2.5%). This is reflective of a large new Japanese system in the top 10.

To List or Not To List

Furthermore, country share is probably less important than you may at first assume. After all, there are no rules forcing machine owners/operators to submit their systems to the Top500 list. This means that a large number of commercial systems are often not included in the list at all.

After all, there is a cost to undertaking a Top500 benchmarking run, especially for a large-scale system. It requires a significant amount of compute time, as well as the patience and perseverance to configure and debug such a run. The Linpack benchmark is often run when commissioning a new system, as it happens to be a good way of shaking down a system, checking processors are working correctly and the system can cope with a high-power run. However, commerical system operators may not undertake such tests, or benchmarks.

Therefore, it could be that the rise of Chinese systems in the list is simply a reflection of a desire, or encouragement, to submit all their commercial (or at least non-academic) systems to the list, as well as their research systems, in a way that isn't happening in other countries. Of courses, there is nothing untoward about this, and it also does not detract from the fact that the two biggest systems on the list are Chinese.

Other measures

One aspect that has gone widely unnoticed is the folding of other measures into the Top500 list. The main list is calculated using the Linpack benchmark, also known as HPL. However, there are other benchmarks that are used to measure system performance, including HPCG and Graph500.

Both these measures are also now part of the Top500 list and can be queried along with the Linpack benchmark results. Indeed, the main system that we operate at EPCC, ARCHER, is still the fastest system in the UK if you rank by HPCG results. This is more a reflection of the sparcity of HPCG results rather than the performance of ARCHER, but it's still nice to be top in the UK!

Processors

One aspect of HPC, and systems in general, that was widely discussed at SC17 was new processor designs and manufacturers. There are multi-core processors due to be released from both AMD and Cavium (an ARM-design based processor), that look like they will be strong competitors to Intel processors over the next few years.

They are multi-core processors, including up to 32 cores per processor, with a large number of memory channels, and PCIe channels in AMD's case. They don't quite have the vector sizes of the current Intel processors, but look to match Intel processors in clock frequency and core densities, exceeding existing Intel processors in memory and PCIe bandwidth (although of course Intel has plans for future generations of processors that I'm sure will match these as well).

Most interestingly, from my perspective, they are likely to be significantly cheaper than Intel processors (unless Intel adjusts their pricing), meaning we might start to get some price competition in high-end processors, something we've not had for a good few years.

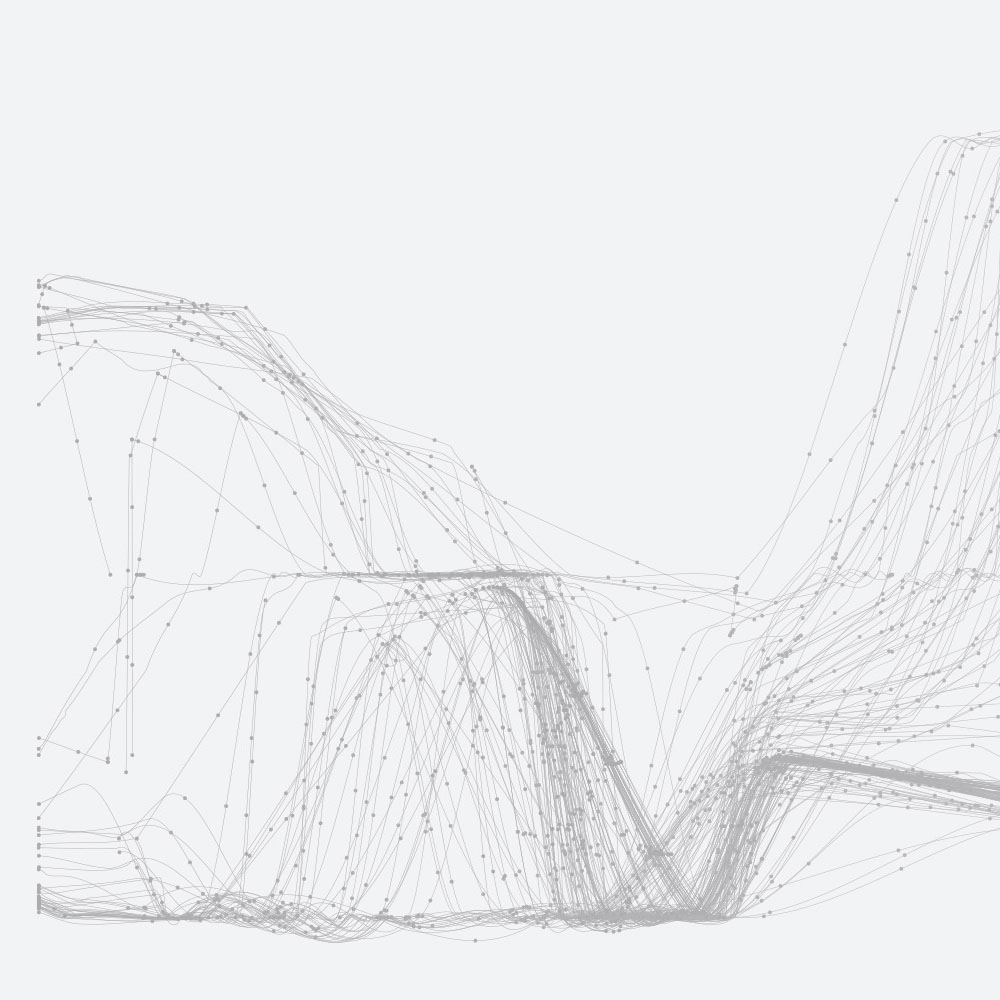

Figure 2: Share of Top500 list by processor generation (not including accelerators)(graphs from Top500 website)

Having said that, whilst there is a lot of buzz around AMD and ARM-based processors, Intel has never been more dominant. Figure 2 (again taken from the Top500 website) shows the breakdown of the current list by processor generation (note, this does not include accelerators like GPUs). It's apparent that nearly all the Top500 is based on Intel processors of one form or another.

Figure 2 also highlights the longevity of systems in the list, with 5 differenet generations of Intel processors present (from Sandybridge to Skylake, annoyingly called Xeon Gold in this graph), and even AMD Opteron processors still present (they've not been sold for a good few years now).

Figure 3: November 2012 Share of Top500 list by processor generation (graphs from Top500 website)

This dominance by Intel is a relatively new feature, we don't have to go back far in the lists to see a different story. Figure 3 presents the same measure from a 2012 Top500 list, where we see a much more diverse processor landscape.

This means that even if ARM-based or AMD processors do come to be a sensible building block for constructing HPC systems, it's likely to take a while for the Top500 list to significantly change to reflect this.