Using FPGAs to model the atmosphere

The Met Office relies on some of the world’s most computationally intensive codes and works to very tight time constraints. It is important to explore and understand any technology that can potentially accelerate its codes, ultimately modelling the atmosphere and forecasting the weather more rapidly.

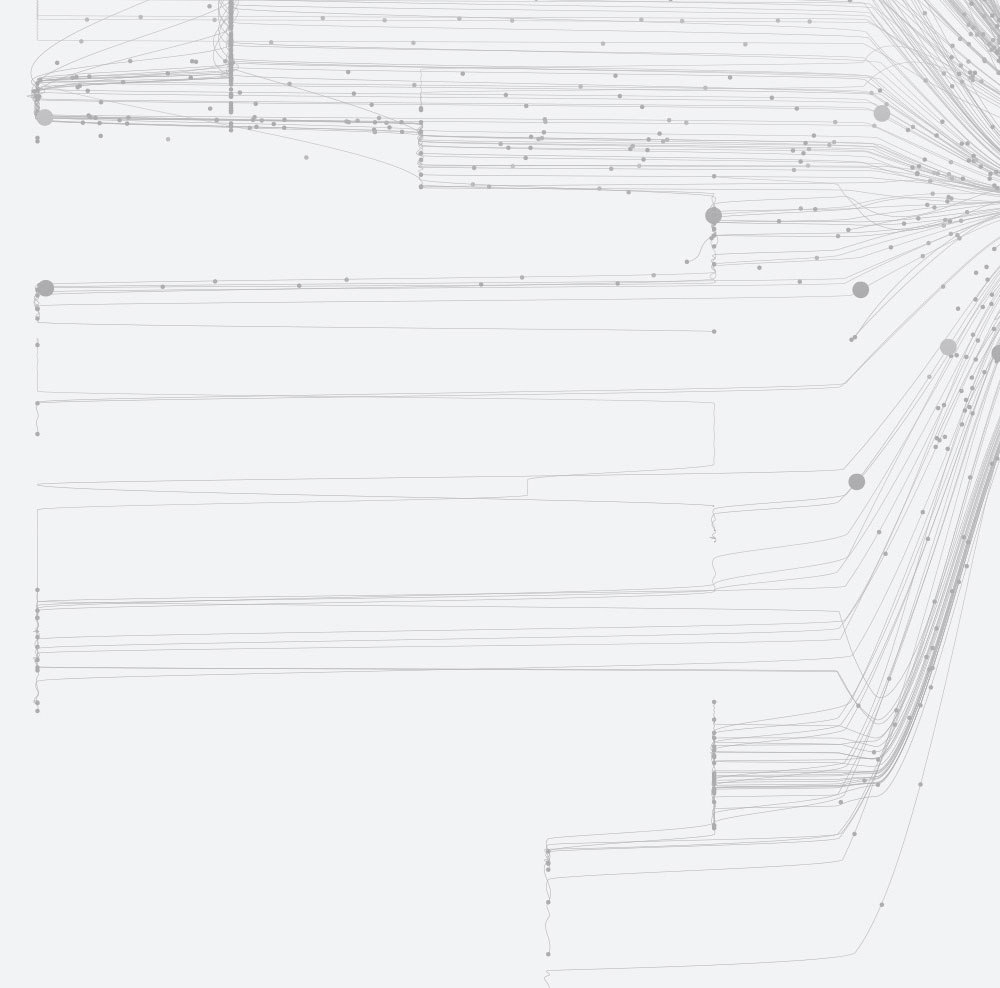

Field Programmable Gate Arrays (FPGAs) provide a large number of configurable logic blocks sitting within a sea of configurable interconnect. It has recently become easier for developers to convert their algorithms to configure these fundamental components and so execute their HPC codes in hardware rather than software. This has significant potential benefits for both performance and energy usage, but as FPGAs are so different from CPUs or GPUs, a key challenge is how we design our algorithms to leverage them.

To explore the potential role of FPGAs in accelerating HPC codes, we focused on the advection kernel of an atmospheric model called MONC, a code that we developed with the Met Office a few years ago. Advection, which is the movement of quantities through the air due to wind, is one of the most computationally intensive parts of the model. We decided to port the advection kernel to FPGAs using High Level Synthesis (HLS), which is an approach where the programmer writes C or C++ code and the tooling synthesises it to the Register Transfer Level (RTL), which can be thought as the assembly code level of FPGAs. These are then exported as IP blocks, which can be dropped into designs called shells and hooked up to other components such as memory.

Initially I made good progress, developing the HLS code and associated shell in just a few days. But I soon realised that when writing FPGA code it is necessary to entirely rethink the algorithm and rewrite it from a data-flow perspective for even adequate performance. This can be a real challenge from a code perspective because, while HLS accepts something syntactically similar to C or C++, to get good performance the programmer must embrace the fact that the semantics are entirely different.

From redesigning how we load data into the kernel to how the computation is structured, the optimised FPGA code bears no resemblance to its CPU equivalent. However, the effort was rewarded: the optimised FPGA version goes over 800 times faster than our first attempt based directly on the CPU code! More generally, for all but the largest systems we out-perform an 18-core Broadwell CPU at significantly lower energy.

One challenge we face is, because the FPGA is mounted on a PCIe card, input data has to be transferred from the host to the on-board DRAM and the results copied back. For our largest problem this involved transferring over 12 GB of data, and even though we optimised it using an approach similar to CUDA streams, data transfer still accounts for around 40% of the runtime.

The investment in programming tools for FPGAs has been really positive and made them a realistic future HPC technology. However code cannot simply be copied from the CPU to the FPGA: problems must be completely rethought and algorithms recast in a data-flow style. But the potential performance and energy benefits are significant.

Image: xxmmxx/Getty Images