What is MPI “nonblocking” for? Correctness and performance

1 March 2019

The MPI Standard states that nonblocking communication operations can be used to “improve performance… by overlapping communication with computation”. This is an important performance optimisation in many parallel programs, especially when scaling up to large systems with lots of inter-process communication.

However, nonblocking operations can also help with making a code correct – without introducing additional dependencies that can degrade performance.

Example – shift around a ring

Let’s look at a simple example – communication around a ring of processes, where each process sends a message to its neighbour in one direction. At EPCC, we teach students to use blocking synchronous mode operations first because this helps ensure that the code is correct.

MPI_Ssend(&sendBuffer, ..., rightProc, ...); MPI_Recv(&recvBuffer, ..., leftProc, ...);

Listing 1: first attempt at ring communication - erroneous because it will always deadlock.

The first attempt (shown in Listing 1) will not work because all processes are blocked in their synchronous-mode send function (that is, MPI_Ssend) waiting for another process to execute a matching receive function, which it will never reach.

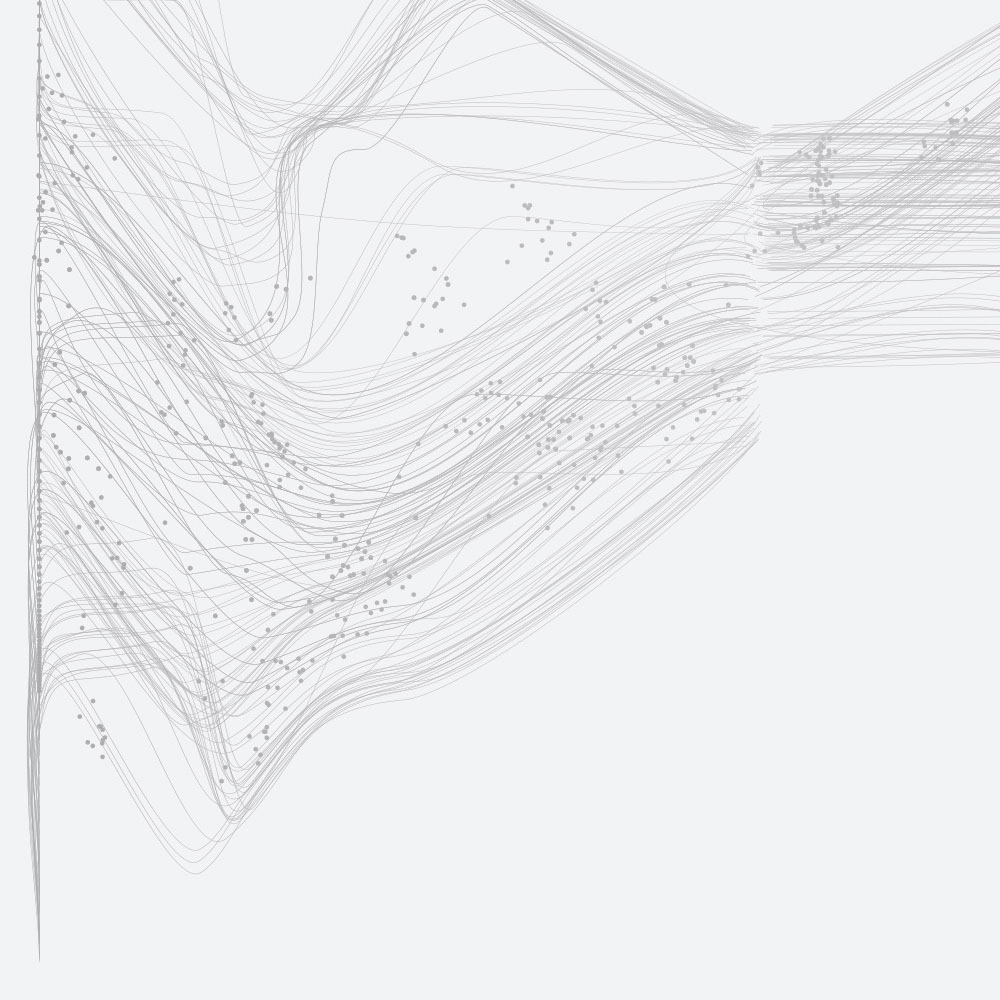

This deadlock happens because in MPI synchronous-mode means that the sender and the receiver must synchronise before the message data is transferred. That means whichever function is called first will block until the other matching function is called. In our case, illustrated in Figure 1, the MPI_Ssend function will be called at all MPI processes and so all MPI processes will block, waiting for receive function calls that cannot happen because all processes are blocked.

What is the best way to make this code correct?

MPI defines many different ways to do communication. Even within point-to-point communication (that is, without even considering collective or single-sided communication), we have a lot of things to try out.

What about different send modes?

Our first attempt uses the synchronous-mode send function, but MPI defines four send modes; we could try the other three.

MPI_Send(&sendBuffer, ..., rightProc, ...); MPI_Recv(&recvBuffer, ..., leftProc, ...);

Listing 2: second attempt at ring communication - erroneous because it will sometimes deadlock (because it always relies on internal buffering in MPI).

The second attempt (shown in Listing 2) will work sometimes but it is still incorrect because it can still deadlock.

The standard-mode send allows MPI to copy the message so that it can be sent later. The MPI library is allowed to allocate some memory for use as a buffer space – a temporary storage location for messages that cannot be delivered straightaway.

If copying the message into this buffer space is successful, then MPI can return control back to the program immediately and the program can then execute the receive function. By returning control from the MPI_Send function, MPI is promising that it will deliver the message eventually, even though it might not have actually sent any message data yet. In this case, the code would work.

However, if the copy into internal buffer space fails (for example, if the message data is too big to fit), then MPI will block execution in the MPI_Send function until a matching receive function is called, exactly like it would for MPI_Ssend. In this case, the program will not work – it will deadlock just like the first attempt. The MPI Standard says that code like this is always erroneous because sometimes it will not work.

The other two send modes are ready-mode (MPI_Rsend) and buffered-mode (MPI_Bsend). Ready-mode requires the additional guarantee that the receive function is called before the send function. This requires additional messages or synchronisation, which is off-topic for this discussion. Buffered-mode requires the program to provide enough buffer space to MPI so that MPI can always copy the message during the MPI_Bsend function and return control without actually sending any data yet. Using buffered-mode would make our simple example work but it would force MPI to copy each message into buffer space, which is not good for performance.

What about re-ordering the function calls?

If we discount ready-mode and buffered-mode, then the problem with calling a blocking send function first is that there is no chance for the program to call the matching receive function because it is blocked in the send function waiting for the receive function to be called.

Calling the blocking receive function first (that is, calling MPI_Recv before the send operation) doesn’t help because then all processes are blocked in the receive function waiting for another process to execute a matching send function, which it can never reach.

Whilst re-ordering the send and receive for all processes does not work, this deadlock can be broken by selectively re-ordering the send and receive calls (shown in Listing 3).

if (isOdd(myRank)) { MPI_Recv(&recvBuffer, ..., leftProc, ...); MPI_Send(&sendBuffer, ..., rightProc, ...); } else { MPI_Send(&sendBuffer, ..., rightProc, ...); MPI_Recv(&recvBuffer, ..., leftProc, ...); }

Listing 3: third attempt at ring communication – correct, but introduces a new dependency.

Here, even-numbered processes send first (to an odd-numbered process) and then receive, whereas odd-numbered processes receive first (from an even-numbered process) and then send. This is sometimes called ‘red-black’ ordering, possibly in reference to the colouring of some checkerboards or in reference to red-black tree structures.

We now have a correct code, but we have also introduced a new dependency – the communication is done in two phases: evens-to-odds, then odds-to-evens. For our simple 1D ring example, this probably won’t have a big impact on performance. However, for more complex examples, like a 3D exchange pattern, it will have a much larger negative impact.

How does nonblocking help?

This is where nonblocking communication comes into the picture. Sticking with our simple example, we could replace the blocking receive with a nonblocking receive and a completion function call (shown in Listing 4).

MPI_Irecv(&recvBuffer, .., leftProc, .., &recvRequest); MPI_Ssend(&sendBuffer, ..., rightProc, ...); MPI_Wait(&recvRequest);

Listing 4: fourth attempt at ring communication – correct, and avoids a new dependency.

In this version of our program, each process initiates a receive operation and then, without waiting for it to complete, it goes on and does a send operation. Finally, each process waits for the receive operation to complete.

The completion function (MPI_Wait in this case) is essential because it lets the program know when it is safe to use the buffer given to the nonblocking function. If the completion function call is missed out then MPI could still be writing the incoming message data into memory when the program tries to read it. The program might get all old values, some old and some new values, or some garbage values. Also, MPI uses up some resources for each nonblocking request and it must be given a chance to release those resources so they can be used again in future. Without the completion function call, the nonblocking request results in a memory leak.

The nonblocking function allows all processes to initiate a receive operation – which will eventually match a send from another process (that is, from leftProc) – and then go on to issue a send that will eventually match a receive operation initiated by a third process (that is, by rightProc). In this version, illustrated in Figure 2, there are no longer any phases of communication because we are no longer introducing a dependency between the incoming and outgoing messages.

Using nonblocking communication allows us to avoid a deadlock without damaging performance by introducing a memory copy (like in the buffered-mode version) or introducing a dependency (like in the re-ordering version).

Is it really that simple?

Well, no – there are many more points to consider.

We could make the send operation nonblocking instead of the receive operation (as shown in Listing 5). Choosing between these alternatives is a much more advanced topic because it needs a deep understanding of how your particular MPI library has been implemented and what hardware support is available on your particular system.

MPI_Isend(&sendBuffer,.., rightProc,.., &sendRequest); MPI_Recv(&recvBuffer, ..., leftProc, ...); MPI_Wait(&sendRequest);

Listing 5: alternative fourth attempt at ring communication – correct, and avoids a new dependency.

None of the versions of our simple example in this blog post permit any overlap of communication with computation. For that we need to make all of the communication functions nonblocking. In a more complex code, where each process communicates with lots of others, nonblocking communication is an easy way to write a correct code, but we again need a deeper understanding of MPI to achieve the best possible performance. We have also not looked at the MPI_Sendrecv function or at any of the collective communication functions that could be used to achieve the same communication pattern.

Perhaps I will cover these advanced topics in future blog posts?