News

Get the edge with EIDF: subscribe, follow, and stay ahead!

Stay updated on everything related to the Edinburgh International Data Facility (EIDF) and our private research cloud infrastructure by subscribing to our newsletter!

Working towards frictionless, UK-wide data-driven research and innovation

EPCC leads the Connect4 project, which is proposing changes to UK national Trusted Research Environments that will work towards federated access to cross-sector sensitive data.

Representing HPC at the RISC-V North America Summit

RISC-V is an open Instruction Set Architecture (ISA) standard.

New Mary Somerville image adorns astronomy research cloud

EPCC's Somerville research cloud has received a new look.

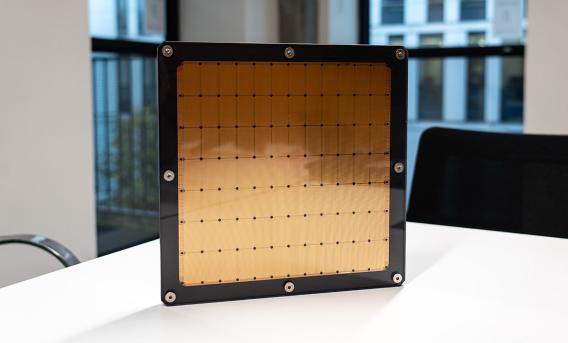

Chip and software breakthrough makes AI ten times faster

Drawing on the Cerebras CS-3 service operated by EPCC, a system has been developed that enables large language models (LLMs) to process information up to ten times faster than current AI systems.

High-Performance Computing Center Stuttgart and EPCC agree to strengthen collaboration

The partnership will build on a long history of cooperation between Stuttgart and Edinburgh to focus on supercomputing, modelling and simulation, artificial intelligence, and emerging computing tec

SC25 session: Building sustainable HPC outreach

As Eleanor Broadway explains, outreach is essential for growing and diversifying the HPC community by communicating the importance of our work to broader audiences.

Breaking I/O bottlenecks in scientific workflows: a new EPCC–University of Turin collaboration

Our new paper, “Overcoming Dynamic I/O Boundaries: a Double-Sided Streaming Methodology with dispel4py and CAPIO”, will be presented at WORKS 2025, part of the SC25 Workshops series.

A busy time at SC25!

Supercomputing (SC), the HPC community's largest conference, is held annually over the course of a week in a major US city, this year St. Louis.

Three cinematic EPCC visualisations at SC25

Three new scientific visualisations by EPCC will be presented at the "Art of HPC" sessions at SC25.