Precision persistent programming

Targeted Performance

Blog post updated 8th November 2019 to add Figure 6 highlighting PMDK vs fsdax performance for a range of node counts.

Following on from the recent blog post on our initial performance experiences when using byte-addressable persistent memory (B-APM) in the form of Intel's Optane DCPMM memory modules for data storage and access within compute nodes, we have been exploring performance and programming such memory beyond simple filesystem functionality.

For our previous performance results we used what is known as a fsdax (Filesystem Direct Access) filesystem, which enables bypassing the operating system (O/S) page cache and associated extra memory copies for I/O operations. We were using an ext4 filesystem on fsdax, although ext2 and xfs filesystems are also supported.

For fsdax to be efficiently implemented it requires memory-like storage, such as B-APM memory. However, fsdax does not bypass the block/page data operations associated with I/O through the operating system, which means that I/O operations on small amounts of data can still be expensive as most filesystems currently use 4096 byte blocks or above as their smallest unit of operation. To truly read and write byte-level (or at least cache-line level) data another programming approach is required. Intel, with SNIA and others have developed the PMDK library and suite of tools to provide this approach.

PMDK is based on the memory-mapped file concept, long supported in Linux and other operating systems, which allows a file based on a storage device to be mapped into the memory space of a system and then accessed as memory. As Optane DCPMM is just another type of memory, managed and accessed through the same memory controller as DRAM, this approach allows variables and arrays to be directly allocated on the B-APM and then accessed by an application in the same way any other dynamic memory is used.

The low-level programming approach is reasonably straightforward, requiring a memory mapped file to be opened with a command such as this:

pmemaddr = pmem_map_file(path, array_length, PMEM_FILE_CREATE|PMEM_FILE_EXCL, 0666, &mapped_len, &is_pmem);

The memory allocated with such a call can then be used like so:

double *a, *b, *c; a = pmemaddr; b = pmemaddr + array_size*sizeof(double); c = pmemaddr + array_size*sizeof(double)*2; for (j=0; j<array_size; j++){ a[j] = b[j]+c[j]*5.6; }

The above code is sufficient to get arrays or variables allocated in B-APM, but does not actually ensure data is persisted to the hardware, ie it isn't a guarantee that the data has been stored safely on the Optane DCPMM DIMM yet. As the B-APM is within the memory system, data allocated on the B-APM could still be in any of the CPU caches of the CPUs reading or writing the data. To ensure the data has actually made it back to the persistent media, and therefore will survive a power-off event, the following function (or similar functions) is required:

pmem_persist(a, array_size*sizeof(double));

The pmem_persist function will ensure that by the time the function has returned, the data (specified by the pointer and number of bytes passed to the function as arguments) is now resident on the B-APM media. This is effectively doing a memory fence and cache flush operation for the specified data to ensure any updates in cache have made it back to the Optane DIMMs. As such, it can be a costly operation, so key to good performance with this low-level PMDK functionality is ensuring the persist operations are only used when necessary, and at the appropriate granuality (ie persisting individual bytes of data when the algorithm only requires larger persistant epochs will not be performant).

Figure 1: IOR PMDK vs fsdax performance – 16mb transfer size.

PMDK also has a wide range of higher level functionality – from memory pools to key-value stores – that deal with the complexities of ensuring memory is persisted correctly and data can be recovered cleanly in power-off events. So, for a lot of applications and developers, going to the low-level pmem_ functions is probably unwise, but for those wanting to access persistent memory directly from their applications and have control of which arrays to store where without completely re-engineering the application to use a different data approach (such as a key-value store), it is desirable.

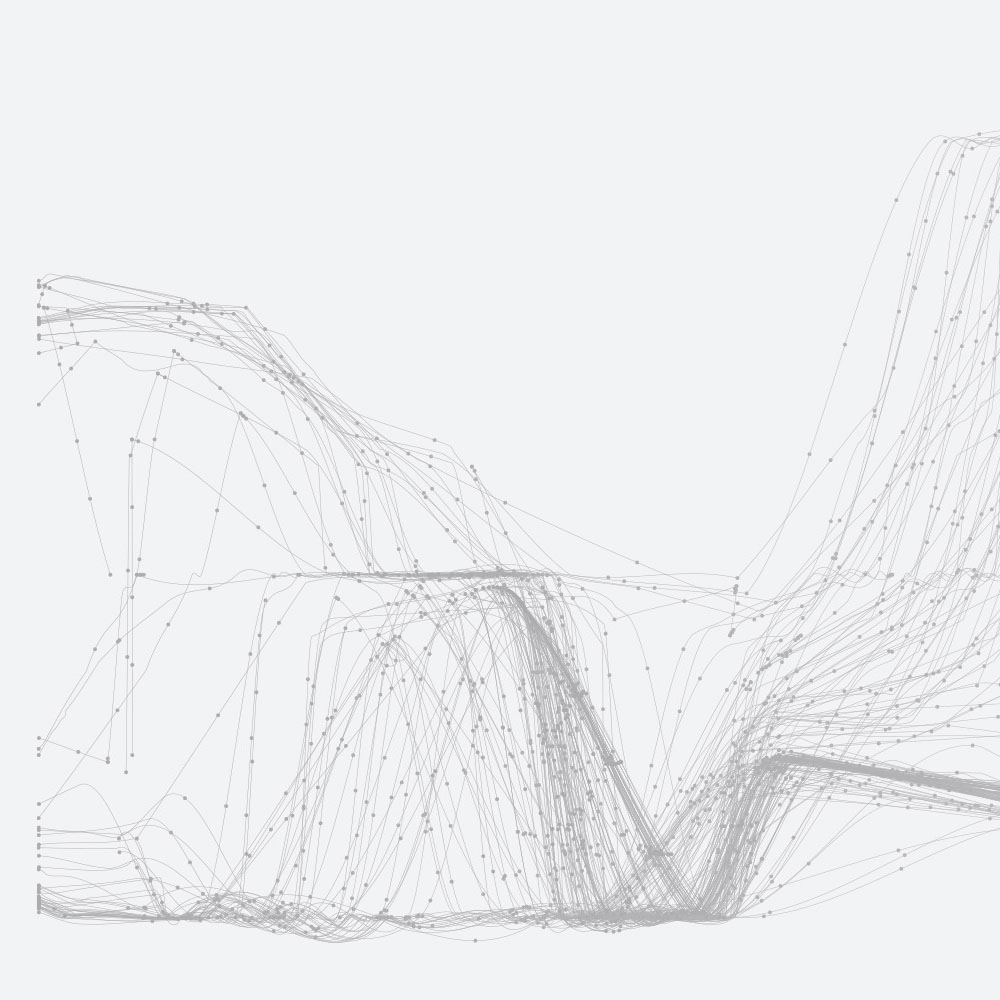

The PMDK approach enables bypassing both the O/S page cache and the block operations that traditional file-based I/O requires, meaning that individual pieces of data can be read or written with operations that only affect the cache line that the data is mapped to. This should enable much quicker I/O than using a file-based approach, as we used when benchmarking the fsdax performance. However, when we extended the IOR benchmark to include a target implemented with PMDK, using pmem library operations to access and update data stored on the B-APM, we did not observe improved performance. Figure 1 shows that the PMDK performance is almost identical to the fsdax performance (this is using 48 processes per node; as discussed in the previous blog post, write performance can be improved by using fewer processes per node, at the expense of read performance).

Figure 2: Single node fsdax read performance using varying transfer sizes.

This was a little surprising to us, given the overheads associated with block I/O we've just discussed. On closer inspection however, we realised we were using a 16mb transfer size in the IOR configuration for these benchmarks, meaning that individual I/O operations are being performed in 16mb chunks. Given the additional performance and functionality benefits of B-APM such as Optane, and for PMDK vs fsdax, are on accessing/update small amounts of data efficiently, forcing the IOR I/O to be done in such large chunks isn't sensible.

Therefore, we re-benchmarked fsdax and PMDK using a range of smaller transfer sizes, from 128 bytes up to 16mb, to see what performance differences could be observed between traditional block-based I/O and PMDK I/O when undertaking different size I/O operations. We expected that PMDK would provide better performance for smaller trasnfer sizes, compared to the standard fsdax approach. Figures 2 and 3 outline the performance (read and write) when using an fsdax filesystem with block I/O for a single compute node with varying transfer sizes. Figures 4 and 5 are the same but using PMDK functionality rather than block I/O.

Figure 3: Single node fsdax write performance using varying transfer sizes

We can see from comparing Figures 2 and 4, or Figures 3 and 5, that the performance of Optane DCPMM is indeed much better at low transfer sizes (ie small I/O operations) when using the PMDK programming approach, compared to standard file I/O. Using the IOR and system configuration we were benchmarking with, the fsdax functionality needs transfer sizes of 64kb and above to get maximum read or write performance (ie performance similar to that observed in our initial benchmarks and shown in Figure 1).

Whereas, PMDK achieves best performance on transfer sizes below 64kb, with a reduction observed for the higher transfer sizes. Why the performance is somewhat reduced for the higher transfer sizes isn't yet clear, but it could be to do with competition in the memory bus or with saturation of the on-board buffers in each Optane DCPMM DIMM. Further benchmarking and profiling will be required to get to the bottom of those performance differences.

Figure 4: Single node PMDK read performance using varying transfer sizes.

The exciting possibility that these benchmarks present, though, lies in the fact that small I/O operations with PMDK and B-APM really are as performant (or even perform better) than large I/O operations. The perceived wisdom for I/O performance, particularly parallel I/O performance, is that large streaming read or write operations are required to get reasonable performance. Using this new hardware, and new programming approaches, it's possible to get outstanding performance for small I/O operations as well.

Indeed, comparing fsdax and PMDK, on the same high performance Optane DCPMM hardware, we can get over 200x faster read, and over 80x faster write performance using PMDK compared to POSIX-based file I/O (comparing transfer size of 128 bytes IOR benchmark results).

Figure 5: Single node PMDK write performance using varying transfer sizes.

Whilst the absolute performance numbers aren't actually important (it's possible the IOR benchmark and the fsdax filesystem could be optimised by tweaking parameters in the IOR configuration or filesystem/O/S setup), the comparison does highlight the functional difference between PMDK and standard file I/O, and open up new possibilities for data access and storage.

Applications need to be re-written to utilise PMDK, but if that work is undertaken there's scope to operate on much smaller resident data sets (data in volatile memory such as DRAM or on-processor high bandwidth memory), and swap data in and out of volatile memory from B-APM with high efficiency. Blocking algorithms that take sub-sets of a full data domain and work on them could enable applications to both achieve high performance and reduce the number of compute nodes they require since smaller amounts of volatile memory are required. Reducing the number of compute nodes in turn can improve performance for collective MPI communications.

Figure 6: IOR PMDK vs fsdax performance – 256 bytes transfer size.

Alternatively, applications that are amenable to it could be restructured to keep large read only data sets in B-APM, and data to be updated in volatile memory, to provide both high performance write functionality and read for very large data volumes (think DNA-sequencing database) whilst not requiring the data to be accessed in large sequential patterns.

Indeed, there are probably many more data access, and algorithmic, patterns that could be adapted to a two-level memory hierarchy to remove file I/O and more efficiently structure data accesses, costs, and capacity. Something to investigate in the coming years. For now, though, if you're interesting in learning more about programming PMDK, or playing with Optane DCPMM hardware, do check out our half-day tutorial on Practical Persistent Memory Programming at Supercomputing 2019 in Denver in November.

More to come soon on NUMA affects with dual-socket systems and B-APM, and application performance experiences. Keep an eye out for future blog posts.

Source code for the IOR used in these benchmarks is at: https://github.com/adrianjhpc/ior/ although the aim is to get this integrated back into the main IOR repositiory if possible. All benchmarking was undertaken on the NEXTGenIO prototype system. We welcome feedback on this blog post, and also if you're interested in using our prototype system with Intel Optane DCPMM do get in touch. Contact details are below.