The risk of unintended consequences

16 December 2022

By Adrian Jackson and Andrew Turner.

EPCC is involved in a number of projects looking at reducing the impact of computing infrastructure on the climate. At the moment a number of pilot projects are running under the umbrella of the CEDA Net Zero Digital Research Infrastructure project (https://www.ceda.ac.uk/projects/ukri-net-zero-dri/), looking at energy and power monitoring, user behaviour, whole infrastructure carbon impacts, and other issues.

These are really just starting the process of gathering and applying data to evaluate how much impact computing infrastructure has on climate, especially compared to other activities researchers and users undertake, whether policy and behaviour change could lead to more efficient computing provision and usage, and how to move computing infrastructures towards limited or no impact on overall climate change overall.

Whilst this is both necessary and laudable, it also needs to be placed in the context of research where the output of computing, or wider digital research infrastructures (DRI), has the potential to greatly reduce climate impacts by developing, discovering, and helping to invent new technologies and approaches that enable greener technologies or technologies that can address climate change.

For example, without advances in research the modern transistor current used for computing would not have replaced much older, less efficient, and therefore more climate-damaging, technologies. The car engines we use today are orders of magnitude more efficient than those used a hundred years ago, due to research over time to improve the operations of the system.

Therefore, whilst from one view point the most carbon-efficient computer is one that is never created or operated, we should bear in mind that an efficiently-operated computer can bring significant benefits to climate change research and energy efficiency over time.

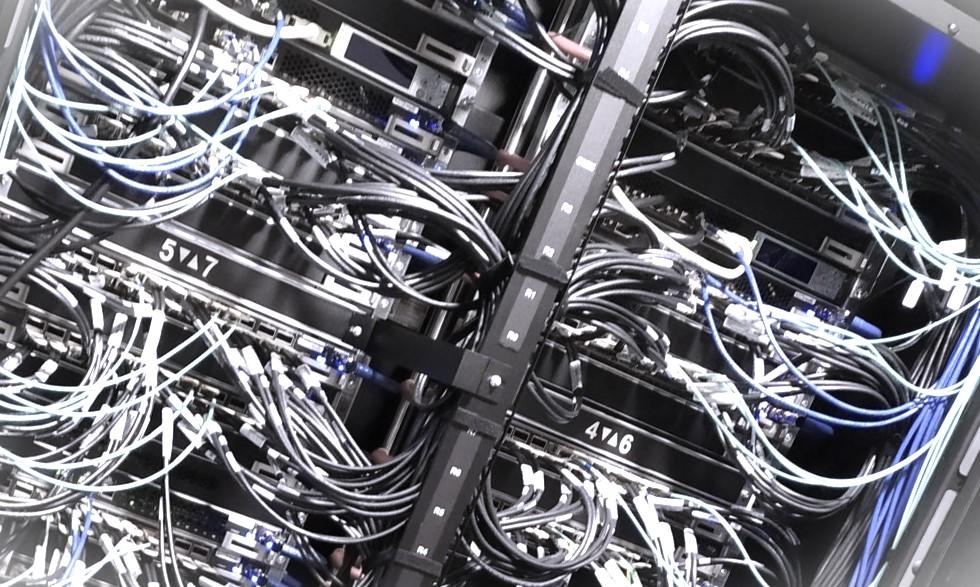

As part of the UKRI DRI Net Zero Scoping project, EPCC has been looking at different aspects of the ARCHER2 service – both around the emissions associated with the service and the energy use of the service. One topic we have been looking at recently is the energy efficiency and consumption of compute nodes when running computational simulation. On ARCHER2 we can query the energy used by a job through the batch system (Slurm) and control the frequency processors can run at when submitting a job.

This work has demonstrated that, for a wide range of applications and use cases, running nodes with processor frequencies below the maximum possible (which is the default on the system) can improve overall energy efficiency of jobs by more than the jobs themselves slow down.

Quoting from the work, “The default CPU frequency is currently unset on the service and so compute nodes are free to select the highest value possible within the constraints of the power configuration for the nodes - typically the base frequency of the processor: 2.25 GHz. The AMD 7742 processors on ARCHER2 support three choices of frequency: 2.25, 2.0 and 1.5 GHz. Recent benchmarking work has indicated that reducing the CPU frequency to 2.0 GHz typically has a minimal effect on application performance (1-5% reduction in performance) but can lead to larger savings in energy consumption (10-20% reduction in energy consumption). This result is consistent with other similar findings at other HPC centres. Reducing the frequency further to 1.5 GHz has a larger impact on performance and often actually increases the total energy consumption.”

Therefore, the overall ARCHER2 system is moving to the lower 2GHz default for most jobs. This change is aimed at reducing the overall power draw and energy use of the service which, in turn, should make the ARCHER2 service more friendly to the UK National Grid in a period where energy shortages are a potential issue, reduce the overall cost of electricity for the service and reduce the cooling overheads.

You may think that reducing the energy demands of the service – the primary aim of this part of the work - would be directly related to reducing the emissions of the service – the overall goal of Net Zero. However, this relationship is not as straightforward as it might seem from a first look. Firstly, the electricity supply to ARCHER2 is provided by a 100% certified renewable energy contract and so, at some level, there are zero carbon emissions associated with the electricity used by the service – any change we make to energy use has zero impact on emissions.

There are also a couple of other reasons why this type of change will not reduce emissions in a straightforward way, even if the energy powering the HPC system is not 100% renewable. The first is to realise that this approach is purely considering compute node energy usage. The profiling data for the energy consumption of the system comes from the compute nodes the jobs are running on. However, systems like ARCHER2 use energy on more than just compute nodes. There are the login nodes and filesystems associated with the system, the high performance network connecting compute nodes and filesystems, and the cooling and power distribution systems of the hosting data centre.

The overhead of filesystems and networks for a system like ARCHER2 we can roughly estimate at around 10% of the compute node energy usage. In terms of power distribution costs and cooling energy usage, this can vary from centre to centre, and across usage conditions (including the load on the system overall, the outside temperature, and on other similar factors). There is a metric used to estimate or assess the energy cost spent on power and cooling infrastructure: PUE (Power Usage Efficiency).

PUE of data centres is, as already mentioned, variable, but if we assume the PUE for the data centre hosting ARCHER2 is 1.1 (not an unreasonable assumption but also not a validated number for the ACF, just being used as an example here), that means that an extra 10% of the overall energy used by the compute nodes, filesystems, and other ancillary resources is used in providing power and cooling to the system overall.

Considering these extra energy costs, we can see that the overall reduction in energy for operating the entire system is no longer 10-20% but 10-20% of the 81% of the energy spent on compute nodes (i.e. ~81% of the energy is on compute nodes, ~9% on networks, filesystem, etc…, and ~10% on power and cooling), or 8-16% overall in this scenario. Still a great energy saving, but not quite the level it may first seem.

Why this is worth noting is that we are not considering the absolute energy saving here to evaluate the success of this measure, but the energy saving relative to the performance reduction for the application. If you reduce the energy by 20% but the job takes 25% longer to run, then this won’t end up with an energy saving overall, although it can bring some power savings which can be important for data centres living within strict power budgets or facing electricity provision challenges.

It is also possible that the reduction in frequencies will bring benefits in the PUE metric as well (i.e. less power loss and less cooling will be incurred/required when running at lower frequencies). This has yet to be evaluated and requires monitoring over a reasonable period to evaluate the overall impact.

However, even without reductions in the PUE metrics, 8-16% is still significantly better than the estimated 1-5% slow down in jobs, providing an overall energy saving in the majority of cases. How significant an impact this will be will depend on the job mix on the system, as some applications do not benefit from this operational mode, and therefore still need to be run at the higher frequency level to maintain overall energy performance.

Where the unintended consequences come into the equation is that the carbon produced by generating the energy used by the operating the system (what I’d call active carbon) is not the only consideration if you want to optimise the overall climate impact of an HPC system on the way to getting Net Zero computing. Indeed, for many HPC systems there is minimal (although it should be noted not zero) carbon associated with the energy used to operate those systems. Many data centres are running on clean energy, ie renewable energy, or energy purchased along with carbon offset certificates. In this context the green house gas emissions, and therefore climate impacts, associated with that energy is very low. I would claim it is non-zero because of the other feature we need to consider, embodied carbon.

Embodied carbon is the carbon emissions incurred in the manufacturing, transportation, and installation of an item. As such, even renewable energy sources such as solar panels and wind turbines will have some carbon (or carbon-equivalent climate gas) emissions associated with them, eg the energy required to mine and fabricate the materials used in their production. Until all sources of energy are carbon neutral, it is likely that many products will include some embodied carbon. Even if all energy production is carbon neutral, some sources of energy can be associated with climate gas emissions (for instance, decaying vegetation in dams used to store water for hydroelectric power).

So, as well as having to consider the carbon equivalent emissions associated with the energy production for running our HPC systems, we also need to consider the embodied carbon within our HPC systems. The carbon emitted creating the processors, memory, motherboards, power supplies, cases, etc used to build our compute nodes. It is this embodied carbon we also need to add to the equation when evaluating whether reducing the active energy associated with running an HPC system has a net positive or negative impact on overall climate impacts.

Clearly we can measure the energy being used to run systems, it’s something that is monitored and billed, so it is easy to evaluate. Taking that energy and applying a carbon mix calculation to it can give us the climate emissions associated with that operation of the HPC system. However the embodied carbon associated with an HPC system is harder to quantify. It involves aggregating the carbon emitted by manufacturing a wide range of hardware and resources, data that may not be available or even known. Estimates for various systems range from embodied emissions making up 10% of the overall carbon emissions associated with the lifetime of a compute resource, all the way up to 50% of the carbon emissions.

The above calculations are both dependent on the specifics of the hardware and hardware manufacturing processes used to create the HPC system, and on how it is operated (the amount of active energy used, the carbon associated with that active energy, and so on). Furthermore, the embodied carbon is a fixed cost, incurred at system creation (or at set times if the system is upgraded during operation) whereas the active energy is spread throughout the operation of the service.

As such, embodied carbon, as a percentage mixed of the overall climate impact of an HPC system, will depend on the lifetime of the HPC system. The longer that system is run for, the lower the percentage the embodied carbon will be of the overall emissions of the HPC system. Why this is being discussed with respect to the reduction in energy being applied to systems like ARCHER2 is that whilst this reduces the overall energy used to run the system by more than it increases the runtime of applications, it does have the consequence of reducing the amount of work the system can do within a defined lifetime.

If we assume a 5% reduction in application performance, that equates to a 5% reduction in overall work from the system. If embodied carbon is 50% of the overall carbon associated with running an HPC system, and we reduce the active carbon by 8%, this equates to a 4% reduction overall, for a 5% reduction in the amount of work accomplished by the system, meaning we actually have a 1% increase in the overall emissions for the work done on the system.

As noted above, if the active energy used to run the system is all effectively climate neutral, then we achieve no reduction in the climate impact of the system by reducing the energy used to run the system, but we have a 5% reduction in the amount of work achieved for the embodied carbon of the system. Not a huge loss, but still an adverse impact we were not considering, an unintended consequence.

These numbers will be smaller or larger depending on assumptions or data on the ratio between embodied carbon vs active energy carbon, on the amount of improvements that reducing energy usage provides for a system, and the carbon mix of the energy being used to run the system. All of these factors require further research to evaluate the possible and probable ranges, and more accurately estimate the overall climate change impacts of approaches like the active energy management discussed here.

As we noted at the start of this post, the aim of this change for ARCHER2 was to reduce the power draw and energy use of the system and this has other potential desirable outcomes. For example, the energy consumed to run an HPC system costs resources, and the more that can be reduced the more money can be spent on other research, equipment, or facilities. Furthermore, reducing the overall energy requirements of a country or region can help with the progress towards net zero energy systems by reducing the overall requirements on energy generation.

All of the above mean that the energy management approach may well be beneficial for those running HPC systems. However, with much in research, it is also clear that the relationship between energy use of HPC systems and the emissions associated with such systems may be more complicated than they initially appear, and for a full understanding of the issue further research is needed. This is exactly what is expected from pilot research projects like those EPCC is involved with in this Net Zero research programme, and we hope will inform future research directions and research funding.

Further information

See also the EPCC article Net Zero: investigating the energy use of ARCHER2.