Met Office

Improving UK weather forecasts

The challenge

The UK weather forecast you see on TV is typically only accurate to 1km, but there is plenty of interesting weather within this 1km scale that is very important on a local level, such as clouds and turbulent flows.

High resolution modelling of local atmospheric conditions down to 1m scales provides numerous benefits, not least delivering very high accuracy weather forecasts for challenging local conditions.

Heathrow airport, for example, which is prone to fog that necessitates a significant gap between aircraft. Being able to simulate when the fog will clear is hugely valuable, but equally is one of the major challenges of weather forecasting because of the massive amount of computational power involved.

The Met Office had an existing high resolution atmospheric model, the Large Eddy Model (LEM), which was originally developed in the 1980s and written in a mixture of Fortran 77 and 66.

This had served the Met Office and UK universities well for around 30 years, but was designed before distributed-memory High Performance Computing (HPC) machines became mainstream. And whilst the model had been retrofitted in the early 1990s to the early Crays of the era, it struggled to take advantage of modern supercomputers.

This meant that scientists were limited to the size of the system they could model (for both domain and resolution). Around 15 million grid points was the realistic maximum, taking many hours or days to complete over a maximum of 500 cores.

How we helped

We worked with the Met Office and the Natural Environment Research Council (NERC) to redevelop the LEM model and, while there were numerous code level activities such as moving to Fortran 2003, the main focus was to enable a step change in capability.

The Met Office’s new atmospheric model is called MONC and from day one we ensured that the code would run on large HPC machines and provide significant efficiency. While we aimed to keep much of the scientific functionality the same in this initial version, climate and weather scientists were keen to view the model as a toolkit for atmospheric science and plug their own science into the model.

The model is now routinely used across the UK and wider world, with domains of up to 2.1 billion grid points having been modelled on over 32,768 cores of ARCHER (the UK’s previous national supercomputer).

This will increase in the coming years, and already there have been numerous scientific discoveries made with MONC that would have been untenable with previous-generation models.

These include the study of low-level clouds over west Africa as part of the Dynamics Aerosol Chemistry Cloud Interactions in West Africa (DACCIWA) project, which used MONC to explore the impact of the expected tripling of polluting emissions in southern West Africa by 2030 caused by economic and population growth.

The toolkit nature of MONC has meant that projects have taken the core MONC model and built on it in entirely new ways. For example, the MPIC model from Leeds and St Andrews which uses a “parcel in cell” approach to atmospheric modelling.

The MONC project has undoubtedly been a great success, and since development finished in 2016 it has been hosted on the Met Office’s science repository with significant involvement from the community.

While EPCC continues to be involved in its development, a steering board has been set up which is in overall charge of the model. The National Centre for Atmospheric Science (NCAS) is responsible for model support across a wide range of HPC machines including ARCHER2.

Dr Ben Shipway Met Office ScientistNumerical Weather Prediction (NWP) and climate models have developed considerably over the past few decades along with the computational power to drive them.

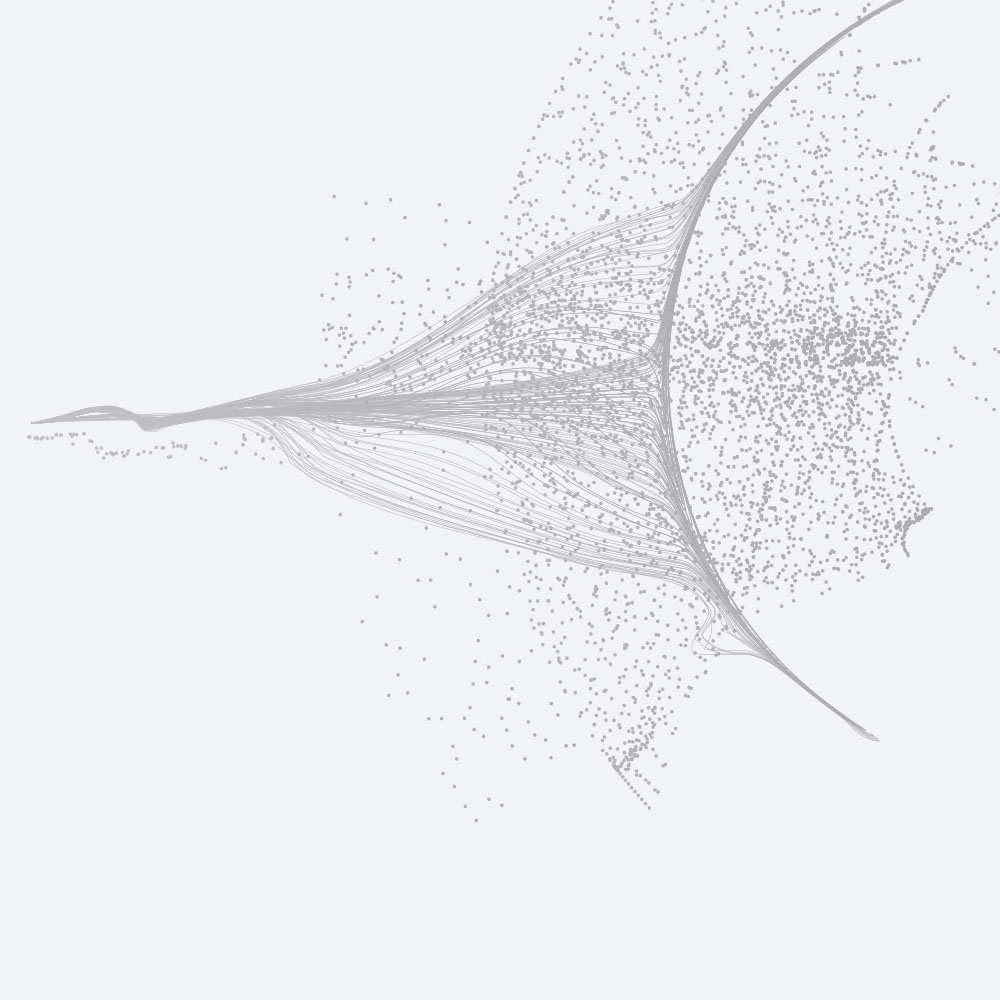

Thirty years ago, resolvable length scales of atmospheric flows were on the order of 100km in operational models, where now they are on the order of 10km for global operational models and 1km for regional models. With an increase in resolution comes increased accuracy, but even at these higher resolutions the fundamental fluid motions of clouds and turbulent flows remain at the subgrid scale.

A key tool in understanding the fundamental physics of these flows and thus development of the parametrizations is Large Eddy Simulation (LES).