Accelerating the simulation of galactic images with OpenMP Target Offload

11 July 2023

EPCC has been involved with the Large Synoptic Survey Telescope (LSST) for over a decade. As part of LSST:UK’s contribution to the Dark Energy Science Collaboration’s work, we have recently introduced GPU acceleration to the GalSim codebase, significantly speeding up the simulation of galactic images.

Dark Energy Science Collaboration (DESC) is the international science collaboration that will make high accuracy measurements of fundamental cosmological parameters using data from the Rubin Observatory Legacy Survey of Space and Time (LSST).

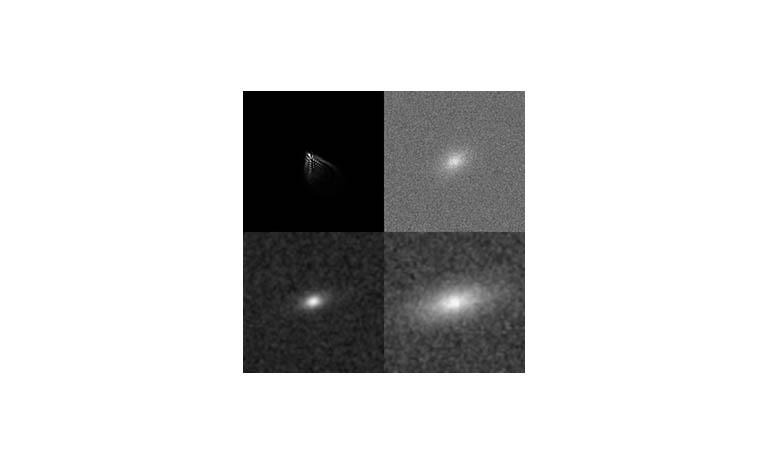

GalSim is an open source galactic image simulation code and it forms the basis for ImSim, DESC’s image simulation code that is used to generate fake telescope images. These images can then be used to test LSST’s image processing pipeline in preparation for the telescope being commissioned. GalSim is mostly written in Python but some of its low-level computations are implemented in C++. The sensor model, which simulates the behaviour of the silicon CCD sensors used within the LSST, is an especially performance-critical part of the code and an obvious target for optimisation.

DESC were keen to keep GalSim cross-platform and vendor neutral, so OpenMP Target Offload, which supports multiple GPU architectures and compiler suites, was considered a better option than (for example) programming to NVIDIA’s CUDA platform directly. Another advantage of OpenMP is that it is a directives-based approach; rather than having to entirely rewrite the section of code to be accelerated and explicitly orchestrate data transfers and kernel invocations, the programmer need only annotate the performance-critical loops and the compiler will take care of offloading them to an accelerator device. At least, that’s the theory.

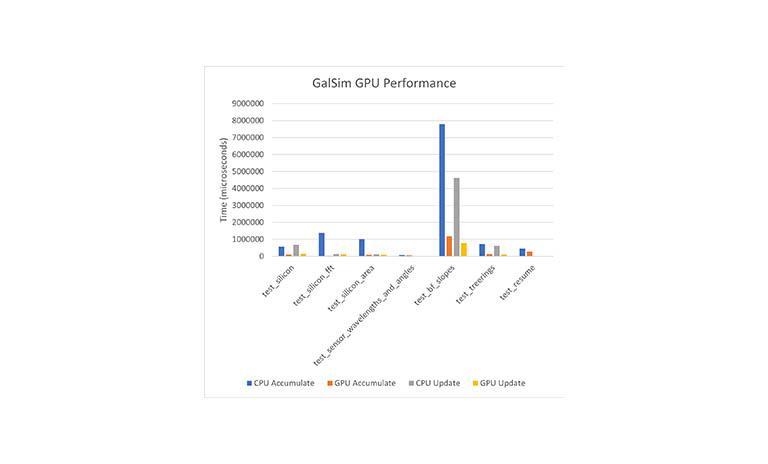

Figure 1: Performance of GalSim sensor test suite with and without GPU acceleration.

Our work involved applying OpenMP Target Offload to two key parts of the sensor model: the main photon accumulation loop, which rains photons down onto the simulated sensor, and the pixel boundary update, which deals with the subtle but important distortion of the pixel shapes as electrical charge accumulates on the sensor. Although OpenMP’s directives-based approach is significantly less intrusive than CUDA, it was still necessary to rewrite portions of the code and remove some C++ features and library calls that are not currently supported when offloading. It was also necessary in many cases to manually specify when data should be copied to and from GPU memory, in order to minimise costly transfers over the slow bus between CPU and GPU.

A significant challenge was integrating OpenMP Target Offload support into GalSim’s build system. This technology is not yet as mature as CUDA and is quite sensitive to the specific compiler versions and flags used, while GalSim uses a build system based on Python’s setuptools, which can be difficult to modify. But after some work it was possible to build GalSim’s C++ library with full offloading support, using the Clang compiler suite.

Development and testing were carried out on the Perlmutter system at NERSC. Perlmutter, which provides over 7,000 NVIDIA A100 GPUs, is the computer DESC plan to use for most of their simulation runs going forward, so it was critical that GalSim performed well there. Across the whole of the GalSim sensor test suite, the accelerated GPU versions of the loops were on average 4 times faster than the CPU versions. Even more encouragingly, when only compute time was measured and data transfer time disregarded, the accelerated loops ran 18 times faster on GPU than on CPU.

Figure 2: Some example images generated by GalSim.

The accelerated sensor model has now been merged into the main GalSim codebase, but there is still more to do. The eventual aim is to accelerate the entire photon pipeline – from sky simulation, through telescope optics simulation, and finally to sensor simulation. Ideally the photon data should remain in GPU memory throughout, minimising the inefficiency of transferring it over the bus, however this poses some architectural challenges and detailed design work needs to be done. It is also our intention to test the accelerated code on other systems, including EPCC’s Cirrus, in the near future.

Links

Dark Energy Science Collaboration

You can read about another EPCC collaboration with LSST:UK in this article on our website: Parallelising Macauff photometric catalogue software for the LSST:UK cross-match service.