Biomedical urgent computing in the Exascale era

5 July 2022

CompBioMed is a European Commission H2020-funded Centre of Excellence focused on the use and development of computational methods for biomedical applications.

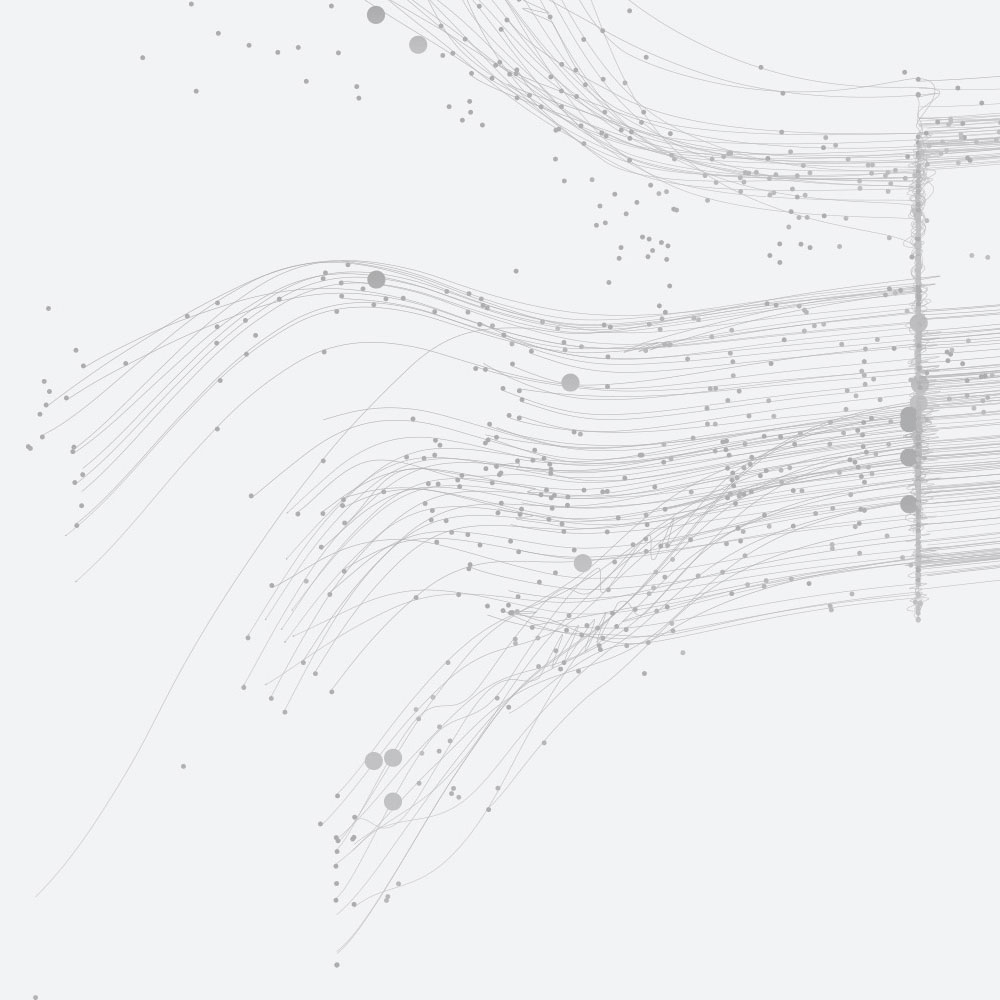

Image shows simulation of the effect on red blood cells of a flow diverter implanted in a brain artery to reduce local pressure.

In the field of personalised medicine, one example of urgent computing is the placement of a stent (or flow diverter) into a vein in the brain: once the stent is inserted it cannot be moved or replaced. Surgeons of the future will use large-scale simulations of stent placement, configured for the individual being treated. These simulations will use live scans to help identify the best stent, along with its location and attitude.

Part of CompBioMed’s remit is to prepare biomedical applications for future Exascale machines, where these machines will have a very high node count. Individual nodes have a reasonable mean time to failure; however, when you collect hundreds of thousands of nodes together in a single system, the overall mean time to failure is much higher. Moreover, given Exascale applications will employ MPI, and a typical MPI simulation will abort if a single MPI task fails, then a single node failure will cause an entire simulation to crash.

Computational biomedical simulations employing these Exascale platforms may well employ time- and safety-critical simulations, where results are required at the operating table in faster than real-time. Given we intend to run these urgent computations on machines with an increased probability of node failure, one mitigation is to employ what we have called Resilient HPC Workflows.

Two classes of such workflows employ replication. The first resilient workflow replicates computation, where the same simulation is launched concurrently on multiple HPC platforms. Here the chances of all the platforms failing is far less than any individual; however, such replicated computation can prove expensive, especially when employing the millions of cores expected in Exascale platforms. The second resilient workflow replicates data, where restart files are shared across a distributed network of data and/or HPC platforms. Then, a simulation that finds its host HPC crash will simply continue from where it left off on another platform.

In both classes, the simulation’s results will be available as if no catastrophic failure had occurred; however, the first class, where computation is replicated, will produce the results quicker as the simulation will not have to wait in a second batch system. On the other hand, from personal experience, the time to coordinate multiple HPC platforms to start a simulation concurrently increases as the cube of the number of platforms. As such, the total turn-around time of the second class, ie, where data is replicated, is more likely to be faster. Turn-around might be reduced further via the use of batch reservations.

The LEXIS project [1] has built an advanced engineering platform at the confluence of HPC, Cloud and Big Data, which leverages large-scale geographically-distributed resources from the existing HPC infrastructure, employs Big Data analytics solutions and augments them with Cloud services.

LEXIS has the first form of reliant workflow in its arsenal. As part of CompBioMed, EPCC is working with LEXIS and the University of Amsterdam (UvA) to create the second form: resilience via data replication. The simulation employed is the HemoFlow [2] application from UvA. Under normal operating conditions, the application writes frequent snapshots and, less frequently, restarts files.

For our workflow, we have created a data network and an HPC network. The data network includes three data nodes: one at the SARA [3] HPC centre in the Netherlands, one at LRZ [4] HPC centre in Germany, and one at IT4I [5] HPC centre in the Czech Republic. It is based on nodes of CompBioMed and of the LEXIS “Distributed Data Infrastructure”, relying on the iRODS/EUDAT-B2SAFE system. The HPC network includes five HPC systems: Cirrus [6] at EPCC in the UK, two at LRZ (including SuperMUC-NG [7]) and two at IT4I. Both these networks are distributed across different countries to mitigate against a centre-wide failure, eg, power-outage, at the initial HPC centre. The application has been ported to all the HPC systems in advance. The input data resides on the LEXIS Platform and is also replicated across the data nodes. The essential LEXIS Platform components are set up across their core data centres (IT4I, LRZ, ICHEC [8], and ECMWF [9]), with redundancy built in for failover and/or load balance. These components include an advanced workflow manager, namely the LEXIS Orchestrator System.

The test workflow is under construction and will progress as follows. The workflow manager submits the simulation at the initial HPC centre. As soon as the simulation begins and restart files are created, the workflow manager replicates these restart files across all the data nodes. As the simulation progresses, we will emulate a node failure: a single MPI task will abort and, as such, will cause the entire simulation to fail. This failure will trigger the workflow manager to restart the simulation on one of the remote HPC platforms, using the latest pre-staged restart file. The automated choice of platform ensures the fastest turn-around and will be performed by the LEXIS Platform’s broker tool, namely its Dynamic Allocation Module. Once the target HPC platform is known, the latest restart file is staged there from the closest data node.

The staging of data for Exascale simulations must naturally consider both the amount of data that needs to be moved and the bandwidth required; however, given biomedical simulations can contain patient-sensitive data, data staging must also ensure the staging follows all relevant legal requirements, including the common FAIR principles [10] of research data management. The amount of data can be addressed by employing the existing GEANT2 network [11], whilst FAIR data principles and legal constraints are addressed by the LEXIS Platform itself, as the underlying mechanism for staging data employs EUDAT [12].

Exascale supercomputers bring new challenges and our Resilient HPC Workflow mitigates the low probability but high-impact risk of node failure for urgent computing. These supercomputers are on the horizon and present an exciting opportunity to realise personalised medicine via ab initio computational biomedical simulations which, in this case, will provide live, targeted guidance to surgeons during life-saving operations.