DiRAC: Tursa (GPU)

Tursa is an Extreme Scaling GPU-based DiRAC system.

EPCC hosts the Extreme Scaling component of the DiRAC facility. The Extreme Scaling GPU based system, Tursa, is an Eviden Sequana XH2000 system housed at EPCC's Advanced Computing Facility. EPCC also provides service support for the entire DiRAC consortium.

Technology

Nodes

6 CPU nodes

64 GPU nodes with A100-80

114 GPU nodes with A100-40

CPUs

GPU Nodes: 2x AMD EPYC Rome 7272, 2.9GHz, 12-core per node

CPU Nodes: 2x AMD EPYC ROME 7H12, 2.66GHz, 64-core per node

Total CPU cores

3,504

GPUs

4x NVIDIA Ampere A100-80 GPU

2x AMD 7413 EPYC 24c processor

NVLink intranode GPU interconnect

4x 200 Gbps NVIDIA Infiniband interfaces

114 GPU nodes with A100-40

4x NVIDIA Ampere A100-40 GPU

2x AMD 7302 EPYC 16c processor

NVLink intranode GPU interconnect

4x 200 Gbps NVIDIA Infiniband interfaces

Total GPU cores

291,840 Tensor cores, 3,151,872 CUDA cores

System Memory details

GPU Nodes: 1,024 GB per node

CPU Nodes: 256 GB per node

Over 180 TB of RAM

Storage technologies and specs

Working storage is provided by a 5.1 PB DDN Lustre file system, with a 22.6 PiB tape library for backup and archive of user data.

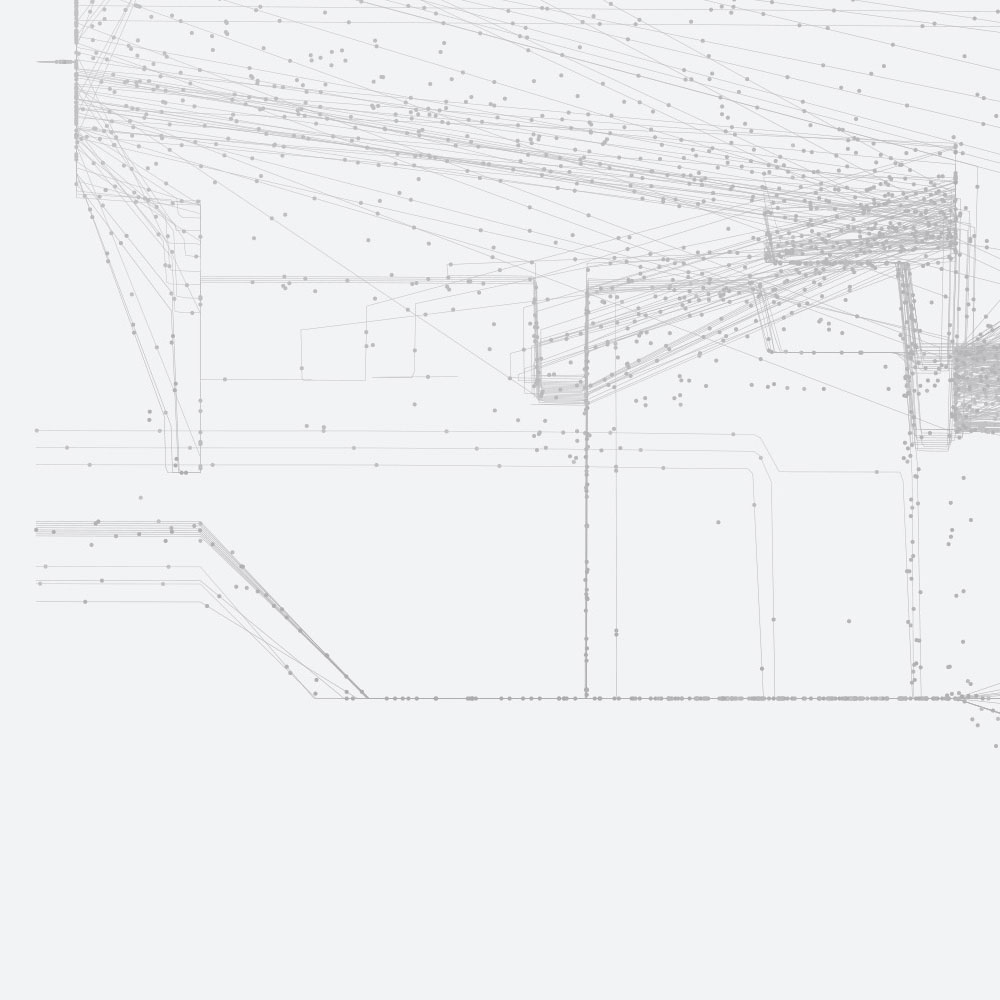

Interconnect technologies

Tursa has a high performance interconnect with 4x 200 Gbps infiniband interfaces per node. It uses a 2-layer fat tree topology:

- Each node connects to 4 of the 5 L1 (leaf) switches within the same cabinet with 200 Gb/s links.

- Within an 8-node block, all nodes share the same 4 switches.

- Each L1 switch connects to all 20 L2 switches via 200 Gb/s links - leading maximum of 2 switch to switch hops to get between any 2 nodes.

Layout/Physical system scale

Tursa is composed of 5 Eviden Sequana XH2000 racks, two management and storage racks and a tape library.

Cooling technology

Tursa compute nodes are housed in water-cooled Eviden Sequana XH2000 racks.

Scheduler details

Bull Slurm

System OS Details

Red Hat Enterprise Linux

Science and applications

DiRAC is recognised as the primary provider of HPC resources to the STFC Particle Physics, Astroparticle physics, Astrophysics, Cosmology, Solar System and Planetary Science and Nuclear physics (PPAN: STFC Frontier Science) theory community. It provides the modelling, simulation, data analysis and data storage capability that underpins the STFC Science Challenges and our researchers' world-leading science outcomes.

The STFC Science Challenges are three fundamental questions in frontier physics:

- How did the Universe begin and how is it evolving?

- How do stars and planetary systems develop and how do they support the existence of life?

- What are the basic constituents of matter and how do they interact?

Access

Academic access

Access to DiRAC is coordinated by The STFC’s DiRAC Resource Allocation Committee, which puts out an annual Call for Proposals to request time as well as a Director’s Discretionary Call.

Details of access to DiRAC can be found on the DiRAC website.

Commercial access

DiRAC has a long track record of collaborating with Industry on bleeding-edge technology and we are recognised as a global pioneer of scientific software and computing hardware co-design.

We specialise in the design, deployment, management and utilisation of HPC for simulation and large-scale data analytics. We work closely with our industrial partners on the challenges of data intensive science, machine learning and artificial intelligence that are increasingly important in the modern world.

Details of industrial access to DiRAC can be found on the DiRAC website.

Trial access

If you are a researcher wishing to try the DiRAC resources, get a feel for HPC, test codes, benchmark or see what the DiRAC resources can do for you before making a full application for resources, an application can be made for seedcorn time.

Details of seedcorn access to DiRAC can be found on the DiRAC website.

People

The system is managed by DiRAC and is maintained by EPCC and the hardware provider Eviden.

Support

The DiRAC helpdesk is the first point of contact for all questions relating to the DiRAC Extreme Scaling services. Support is available Monday to Friday from 08:00 until 18:00 UK time, excluding UK public holidays.

Contact us at dirac-support@epcc.ed.ac.uk if you encounter any problems running on the system or for questions about disk space, allocations or software availability.

Documentation

See Tursa document for details of how to log on, compile and run jobs, access disk, etc.